Projects – Spring 2026

Click on a project to read its description.

Sponsor Background

Aspida is a tech-driven, agile insurance carrier based in Research Triangle Park. We offer fast, simple, and secure retirement services and annuity products for effective retirement and wealth management. More than that, we’re in the business of protecting dreams; those of our partners, our producers, and especially our clients.

Background and Problem Statement

When developers submit pull requests, it is often unclear which platforms, features, or business areas can be impacted by the changes. This lack of visibility slows down QA planning, increases regression risk, and makes it difficult to prioritize testing effectively. Manual tagging and tribal knowledge are not scalable solutions. A tool that leverages machine learning to analyze code changes and provide actionable insights into impacted areas would significantly improve release confidence and streamline the testing process.

Project Description

Aspida proposes building a tool that automatically analyzes PRs, clusters files by functional areas, and predicts impacted features or components. The impact analysis should consider both structural and semantic relationships within the codebase to provide accurate predictions. The system should:

- Ingest PR Data

- Cluster the Repository

- Map Changes in the PR to Clusters

- Generate an Impact Report

- Stretch Goals:

- Develop a dashboard to visualize clusters and track PR impacts.

- Integrate with test management tools to auto-trigger relevant regression tests.

Technologies and Other Constraints

- LLM – Claude via AWS Bedrock (preferred)

- Cloud Environment – AWS (preferred, but local execution possible)

- UI (if needed) – React

- Backend – Node.js with TypeScript

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Bandwidth is a software company focused on communications. Bandwidth’s platform is behind many of the communications you interact with every day. Calling mom on the way into work? Hopping on a conference call with your team from the beach? Booking a hair appointment via text? Our APIs, built on top of our nationwide network, make it easy for our innovative customers to serve up the technology that powers your life.

Background and Problem Statement

Any organization working with personal or confidential data requires tools that can remove sensitive information safely and accurately. Manual redaction processes are difficult to scale and can lead to errors. Bandwidth has an opportunity to provide automated, privacy-first tooling that aligns with our trust and compliance commitments.

Project Description

The AI-Redaction Service is a tool designed to automatically detect and remove sensitive information—such as phone numbers, emails, dates(ex. DOB), credit card numbers, and other Personally Identifiable Information (PII)—from call transcripts or audio. It enhances privacy and compliance for customers using Bandwidth’s call recording and transcription features. Students will build a text-based redaction MVP, with optional audio enhancements as stretch goals.

Objectives

- Automatically detect and redact common PII in transcripts.

- Provide structured output summarizing detected entities.

- Build a simple, intuitive interface or API for redaction workflows.

- Deliver a working demonstration suitable for internal and customer use cases.

Core Features (MVP)

- Upload or paste transcript → receive redacted version.

- Detect PII categories including: phone numbers, emails, credit card numbers, account numbers, timestamps, and other structured entities.

- Replace sensitive elements with standardized tokens (e.g., [REDACTED_PHONE]).

- JSON summary of detected items.

- No customer data required; supports synthetic transcripts.

Success Criteria

- High accuracy for common structured PII.

- Maintains readability of redacted transcripts.

- Low false positives and minimal missed detections.

- Demonstrates value for Bandwidth customers and internal teams.

- Clear path for productization or integration into existing tools.

Stretch Features

- Named Entity Recognition (NER) model for names, addresses, and contextual PII.

- Audio redaction (mute or bleep sensitive portions).

- Integration with Bandwidth Recording or Transcription API.

- Real-time or streaming redaction.

- Customizable redaction rules.

Technologies and Other Constraints

Technical Approach

- Backend API for detection, redaction, and output formatting.

- Two-layer pipeline:

- Regex + rule-based detection for structured PII.

- ML/NER model for contextual PII (optional).

- Simple web UI for upload and visualization (React, Streamlit, or HTML/JS).

- Synthetic test data for evaluation.

- Clear explainability and auditing of detected entities.

Expected Deliverables

- Functional text-based redaction service (API or UI).

- Documentation of detection logic and patterns.

- Sample transcripts and synthetic test suite.

- Demo of redaction workflow.

- GitHub repository with setup and usage instructions.

- Optional: audio redaction and/or enhanced NER layer.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Information & Background

The Computer Science department at NC State teaches software development using a “real tools” approach. Students use real-world industry tools, such as GitHub and Jenkins, to learn about software development concepts like version control and continuous integration. By using real tools, students are better prepared for careers in computing fields that use these tools.

Problem

Google Docs maintains a history of all revisions made to a given document. Several browser plugins exist that allow a user to replay the changes made to a document. These browser plugins allow users to jump backward, forward, or ‘replay’ an animation of the revision history at custom speeds. The plugins also provide analytics, such as displaying a calendar view that identifies which days revisions were made.

GitHub provides a similar experience as Google Docs where a revision history is maintained to track changes to a software codebase. However, it’s often difficult to understand how a code file has changed over time, especially when working with team assignments when there may be hundreds of commits. GitHub provides an interface to manually inspect commit histories, but no interface exists to view a ‘live replay’ of the history of a given file.

Many core computer science courses are considered ‘large’ courses, often with over 200 students enrolled each semester. A tool that allows replaying a full history of changes to a file in a repository over time could help students better understand their own coding approaches (such as “what have I tried already?” or “what work has my team member contributed since our last meeting”). The tool could also help teaching team members understand how to better help students debug logic errors since they could quickly replay a history of edits to a file to know what a student has already attempted.

Solution

The user should indicate which GitHub repository and branch they wish to inspect. The user should also be able to select a specific file for which to replay commit history. The tool should allow users to perform actions such as ‘rewind’, ‘fast forward’, ‘pause’ or ‘play’ while presenting a visual replay of all changes made to the given file. Each change should be clearly highlighted and annotated with information such as the name of the person who committed the code. A visual timeline should also be presented to show a history of commits. Within this timeline, the tool should clearly indicate how many lines of code were added/removed from one commit to the next. Additional features may be identified during the course of the project.

Technologies

The commit history replay tool should be designed and implemented as a web application. The backend should be implemented in either JavaScript/TypeScript or Java as a REST API. The frontend will require the use of visualization libraries, such as D3.js and D3-derived libraries.Using a frontend framework for the frontend such as React is acceptable. The number of dependencies should be limited to those really needed to simplify maintenance of the application.

Sponsor Background

Dr. Tian is a research scientist who works with children on designing learning environments to support artificial intelligence learning in K-12 classrooms.

Dr. Tiffany Barnes is a distinguished professor of computer science at NC State University. Dr. Barnes conducts computer science education research and uses AI and data-driven insights to develop tools that assist learners’ skill and knowledge acquisition.

Background and Problem Statement

Elementary students rarely have opportunities to learn computational thinking, data literacy, and AI concepts through creative, meaningful activities. Traditional instruction often separates storytelling from STEM learning, even though upper-elementary students naturally reason about cause and effect, choices, and narrative structure. Schools and libraries need developmentally appropriate tools that let children learn these skills through playful exploration—not through direct instruction alone.

This project addresses this gap by using AI-supported computational storytelling. The challenge for the student team is to design and develop components of a web-based platform where children (grades 3–5) can create branching stories, test the logic behind their narratives, analyze reader data, and receive age-appropriate AI scaffolding. The motivation is to broaden access to computational thinking and AI literacy skills by embedding them into an engaging creative medium that teachers and librarians can use in classrooms, camps, and community programs.

Project Description

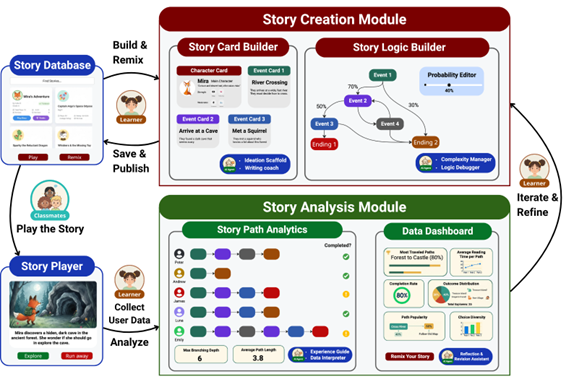

We envision a modular, child-friendly web platform (see Figure 1 for user flow overview) that guides students through story creation, story logic design, and data-driven story analysis, with optional AI assistants providing hints and reflective prompts.

Figure 1 Storytelling Platform Overview

We envision the platform contains the following components:

- Story Creation Tools

A visual editor where students design characters, events, and branching choices. Students can assign probabilities to different story paths and visualize their narrative as a decision tree. - Story Player

An interactive reader mode that lets peers “play” through the story. Each choice generates data about which paths are selected and how often various endings occur. - Story Database & Interaction Data Logging

A shared gallery where students can publish stories, explore others’ work, and remix stories as new starting points. All user interaction data with the platform will be logged for research data analysis later. - Story Analysis & Data Dashboard:

Child-friendly visualizations (bar charts, heatmaps, path diagrams) showing which choices were popular, which branches failed or succeeded, and how changing probabilities might affect outcomes. - AI Assistants

LLM-based helpers that can suggest improvements (e.g., “Do you want to clarify this description?”), flag logical inconsistencies, or help students interpret analytics data.

Because of the scope of senior design projects, we encourage the student teams to choose one to three modules from 1-4 as a semester project.

Use Case Example

Lina, a fourth grader, logs into the AI-in-the-Loop platform for the first time to create a story. She wants to create a story about a clever fox named Mira. Lina clicks on the character card to create Mira and types in Mira’s strengths: smart and brave (see Figure 1 illustrating some of these choices). The AI prompts her to consider weaknesses: “What challenges might Mira face? Adding a weakness can make your story more interesting.” Lina adds: “Mira can’t resist exploring dangerous places.” With that, Mira feels like a real character, and Lina is eager to see what adventures she might have. Lina starts creating an event card: “Mira finds a dark cave in the forest.” Below the card, the platform prompts her to add choices. Lina clicks the “+” button two times and types: 1) explore the cave or 2) run away. Each choice automatically generates a new blank Event Card, which Lina clicks to add descriptions and small illustrations. The system prompts Lina to assign probabilities to each path, and she initially makes them to be equal: explore (50%) and run away (50%). The AI gently suggests: “Since Mira is brave and clever, should the chance of exploring the cave be higher?” Lina thinks for a moment and decides to raise it to 70%. Once Lina has filled in all next-event cards, she clicks “Play Your Story”. She clicks through each choice, watching the story unfold dynamically. Some outcomes succeed, others fail, and the AI gently prompts reflection: “Why do you think your hero lost in this path? Could a different attribute have helped?” Lina adjusts a choice, testing how Mira’s bravery changes the outcome.

Once Lina finishes the story, she publishes her story to the story database on the platform. Over the next week, her peers play her story and some even choose to remix it to extend the story beyond her original narrative, and the platform collects reader data: which paths were chosen most, which failed, and which led to surprise endings. Lina later opens her Data Dashboard to examine the story outcomes, sees that most readers avoided running away, and notices that her “explore” choice led to many successful outcomes.

Benefit to End Users

Children explore computational logic and data literacy through creative storytelling. Educators gain a standards-aligned tool for integrating STEM skills into authentic narrative tasks.

Technologies and Other Constraints

Students can begin by exploring Twine (twinery.org) to understand branching story representation, node-based narrative structure, and basic functionality. This platform will not use Twine directly, but can take some inspiration from Twine’s editor and player workflow.

Suggested Technologies:

- Front-end framework: React, Vue, Svelte, etc

- Backend: Node.js, Python/FastAPI, etc.

- Database: PostgreSQL, MongoDB, or similar to store stories, branches, analytics data.

- Visualization library: D3.js, Chart.js, Plotly for the Data Dashboard.

- LLM integration: Llama, local APIs

Preferred Paradigm:

- Web-based solution; mobile-responsive is helpful but not required.

- For AI components, API-based design is preferred to allow modular integration.

Constraints & Considerations:

- Use synthetic data for testing.

- If using external LLM APIs, teams must follow usage policies and rate limits.

- All code must be original (no copyrighted code beyond permissible open-source licenses).

- No special hardware required.

Sponsor Background

Nathalie Lavoine is an Associate Professor in Renewable (Nano)materials Science and Engineering. As part of her research and teaching initiatives, she focuses on developing sustainable packaging from plant-derived resources to replace petroleum-derived products, lower the environmental footprint of packaging and increase the shelf-life and safety of food products.

By training, Dr. Lavoine is a Packaging Engineer. As an instructor at NC State, she shares her passion through the offering of one annual undergraduate level course on fiber-based packaging and regular guest lectures on this topic.

A common misperception reduces packaging to ‘just a box’. The reality is that sustainable containers are the product of a highly complex, multidisciplinary orchestration. Their development requires the integration of materials science, environmental ethics, mechanical engineering, automated logistics, and beyond. This project is driven by the need to dismantle this reductive view, engaging students, faculty and the public to recognize the technical depth, rigorous labor, and ethical considerations embedded in the materials we use every day.

Background and Problem Statement

The field of Sustainable Packaging is a multidisciplinary science involving complex material properties, historical context, and intricate lifecycle data. Traditional educational methods, primarily static lectures and summative paper-based assessments, often struggle to engage students with the highly technical and data-driven nature of the subject. There is a "visualization gap": students can memorize facts about recycling rates or polymer barriers, but they lack an interactive way to see how these elements combine to create a "complete" sustainable solution.

The motivation for this project is to bridge this gap through gamification. By transforming a dense database of packaging science into a competitive, "Kahoot-style" trivia experience, we can increase knowledge retention and provide students with a sense of progress. The project addresses the need for a modern, interactive tool that can be used both for individual study and as a real-time classroom engagement platform.

Project Description

The proposed solution is "The Sustainable Box," a full-stack web application. The core mechanic is a trivia game where players answer questions across five color-coded categories (such as History, Technical, 3 Pillars, Ethics, and End-of-Life).

Some examples of key features for this game:

- Instead of traditional Trivia pie, the app will feature a 3D-rendered box. As a student masters a category, the corresponding face of the box fills with color. This provides a tangible, visual representation of building sustainable knowledge.

- This game should offer a multi-mode gameplay: (1) a "Teacher Mode" allowing an instructor to host a live game. Students join via a room code on their own devices to compete in real-time. (2) a self-paced mode for individual review (single player) and (3) a multi-player mode for social activity.

- Because the primary use of this game will be for teachers, a teacher’s dashboard would be important. Instructors should gain access to an analytics suite that tracks class performance. This would allow them to identify knowledge silos – meaning specific categories where the whole class (or a higher number of students) might be struggling (e.g., if the "Blue/Technical" face remains empty for most students).

- We could also think of an administrative backend where an LLM can be used to generate/verify question sets based on specific source materials to ensure the database remains fresh and accurate. To be discussed (we would need to ensure and confirm the generated sources and questions are correct.)

Other features can be developed and included. This list is not exhaustive, and I would appreciate student input as they will be the primary audience. We could also think of different levels of difficulties.

Another aspect of the project will be the generation and collection of questions & answers. I may not have enough time and bandwidth to find all the different questions per category (I believe a classic Trivia game relies on 30-50 questions per category). Hence, the student team would be expected to do some additional literature research and to dive into this topic.

Examples of category includes: 1- history & evolution (ancient materials, industrial revolution, mid-century rise of plastics), 2- technical aspects/engineering (polymers, barrier properties, manufacturing process, structural integrity), 3- sustainability (environmental impact, social responsibility, economic viability – global vision), 4- consumerisms & ethics (marketing psychology, regulations, labeling, user experience), and 5- end-of-life and data (recycling rates, biodegradation vs composting, LCA, waste management systems).

Technologies and Other Constraints

- A Web-based application is required to ensure accessibility across different devices (laptops, tablets, and smartphones) without requiring an app store download.

- Students should be able to access the game easily, but the game should not – at this stage – be open access. At the beginning though, access could be restricted to students with a specific class code, or ncsu.edu address to manage server load and data privacy.

- A real-time communication framework may be necessary for the classroom mode.

- A database will be needed to manage a library of 150–250 questions and student leaderboards.

- Potential constraints:

- The system must be able to handle at least 20–30 (at first) concurrent users in a single "Live Session" without significant latency.

- Students must implement a way to flag or verify LLM-generated questions to ensure scientific accuracy.

- The final product should be developed with an Open Source mindset, though the specific packaging science database may remain proprietary to NC State – to be confirmed depending on the nature of the sources.

Sponsor Background

LexisNexis Legal & Professional, an information and analytics company, states its mission as: to advance the rule of law around the world. This involves combining information, analytics, and technology to empower customers to achieve better outcomes, make more informed decisions, and gain crucial insights. They strive to foster a more just world through their people, technology, and expertise for both their customers and the broader communities they serve.

Background and Problem Statement

In its continuing mission to support the Rule of Law, LexisNexis has around 3,000 people working on over 200 projects per year developing software for its products.

In a rapidly changing environment, as opportunities arise and priorities shift, the question often asked (and rarely answered with confidence) is “What is the consequence of moving someone from one project to another?”

LexisNexis needs an intuitive tool to manage and track the association of people with projects, to provide the insight and data necessary to support business priority decisions.

Project Description

LexisNexis is looking for a simple, intuitive application that will allow the management of resource allocation to teams and projects.

The tool will be used by Software Development Leaders to group their people into teams and to associate them to projects for a given duration.

It should help Leaders readily determine issues and opportunities with their teams and projects, and take actions accordingly.

The data collected will support decision making when considering the resourcing of projects when competing priorities need to be considered.

It should ultimately also support financial tracking and planning.

Technologies and Other Constraints

This project extends a foundation laid by a previous NSCU Senior Design team.

The team is at liberty to determine with elements of the previous team’s work they wish to retain and which they feel would benefit from rework/reimplementation.

The preferred solution would be an application accessible though Microsoft Teams, LexisNexis’ collaboration tool of choice.

LexisNexis is best placed to support development in C#, .Net, Angular and SQL Server, although the team may consider other technologies if appropriate.

The initial source of data will be CSV/Excel spreadsheets.

Organizational data will ultimately be sourced through Active Directory, available through Microsoft Graph API.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

ShareFile is a leading provider of secure file sharing, storage, and collaboration solutions for businesses of all sizes. Founded in 2005 and acquired by Progress Software in 2024, ShareFile has grown to become a trusted name in the realm of enterprise data management. The platform is designed to streamline workflows, enhance productivity, and ensure the security of sensitive information, catering to a diverse range of industries, including finance, healthcare, legal, and manufacturing.

Background and Problem Statement

In today’s business environment, every organization—whether a startup, small business, or enterprise launching a new product—must establish a strong and recognizable brand identity. A brand identity includes visual elements such as logos, color palettes, and typography, as well as messaging components like taglines and brand voice. These elements shape how customers perceive a business and significantly influence trust, credibility, and market differentiation.

Historically, creating a cohesive brand identity required hiring branding agencies or freelance designers. These services often cost thousands to tens of thousands of dollars and can take weeks or months to complete. For many small businesses and entrepreneurs, this level of investment is unrealistic. As a result, they frequently launch with inconsistent, unprofessional, or generic branding that limits their competitiveness.

DIY design tools have attempted to democratize branding, but they still require substantial creative skill and strategic understanding. Users must know color theory, design principles, typography, and marketing psychology to produce effective brand assets. Even when tools provide templates or basic logo generators, the results often lack originality and fail to communicate a unique brand story.

Another major challenge is brand cohesion. Ensuring that a logo works with a color palette, that a tagline aligns with the visual identity, and that all elements communicate the right message requires expertise most users do not possess. Existing tools treat each asset separately, leaving users to manually piece together a brand system.

The current landscape has reduced the barrier to creating assets, but not to creating high-quality, cohesive, professional brand identities. We want to further reduce the time, cost, and expertise required by applying generative AI technology. By leveraging AI, a brand identity platform could generate complete, harmonized brand systems based on simple user prompts and intelligent design principles.

Project Description

The goal of this project is to create an AI-powered Brand Identity Studio that enables users to generate comprehensive, cohesive brand assets—including logos, color palettes, and taglines—through natural language prompts. The system will combine multimodal generative AI (text-to-image, text-to-text, and algorithmic color generation) with a backend platform for managing brand projects, asset versions, and analytics.

There are two primary user personas:

Brand Creators

These users initiate brand projects by providing prompts such as “Create a modern, minimalist brand identity for a sustainable skincare company.” They can iterate on AI-generated assets, mix and match components, and export production-ready files.

Brand Reviewers / Stakeholders

These users view generated assets, provide feedback, and help evaluate which brand directions best fit the business goals.

The platform will ensure that all generated assets work harmoniously—colors are accessible and complementary, logos scale effectively, and taglines align with the intended brand voice and target market.

Requirements

1. Brand Asset Generation

AI-Generated Brand Systems

Use generative AI models to produce:

- Logos (text-to-image)

- Taglines and brand messaging (text-to-text)

- Color palettes (algorithmic color theory engine)

Prompt-Based Generation

Users describe their business, audience, and style preferences. The AI generates:

- Multiple brand directions

- Cohesive asset sets

- Variations for refinement

Asset Types

- Logos (primary, secondary)

- Color palettes (primary, secondary, accessible variants)

- Taglines and messaging snippets

- Templates & Collateral (Presentation templates, business cards)

- Typography recommendations (stretch)

Customization Tools (Stretch)

- Edit color palettes

- Regenerate logos with constraints

- Adjust tagline tone or style

Preview Mode (Stretch)

Show assets applied to mockups, such as:

- Business cards

- Social media banners

- Product packaging

2. User Interface (Frontend)

Brand Workspace

An interface where users:

- View generated assets

- Compare variations

- Save or discard versions

Asset Renderer

Display logos, palettes, and taglines in a cohesive layout.

Export Tools

Allow users to download:

- PNG, SVG, JPG logo files

- Color palette files (ASE, JSON)

- Tagline text files

3. Backend

Flexible Schema

Support multiple asset types and versions per project.

CRUD APIs

- Create: New brand projects and asset generations

- Read: Retrieve brand assets, versions, and analytics

- Update: Modify or regenerate assets

- Delete: Remove projects or versions

Asset Validation

Ensure generated assets meet accessibility and quality standards (e.g., color contrast).

4. Integration with AI Services

AI Model Integration

Use external or custom-trained models for:

- Logo generation (image models)

- Tagline generation (LLMs)

- Color palette generation (algorithmic + AI refinement)

- RAG

Natural Language Input

Users describe their brand vision in plain language.

5. Data Management

Storage

Store:

- Brand metadata

- Asset files

- Version history

- User feedback

Data Export (Stretch)

Allow export of brand project data for external analysis.

6. Analytics and Insights (Stretch)

Brand Performance Dashboard

Track:

- Which generated assets users prefer

- Which styles perform best across industries

- Engagement metrics (views, downloads)

Industry Benchmarking

Compare generated brand styles to common patterns in similar markets.

Technologies and Other Constraints

Frontend: React is suggested as a front-end framework.

Backend: Python is suggested for any inference or semantic RAG back-end.

Cloud Providers: If cloud providers are used, AWS is preferred.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

The Undergraduate Curriculum Committee (UGCC) reviews courses (both new and modified), curriculum, and curricular policy for the Department of Computer Science.

Background and Problem Statement

North Carolina State University policies require specific content for course syllabi to help ensure consistent, clear communication of course information to students. However, creating or revising a course syllabus to meet updated university policies can be tedious, and instructors may often miss small updates to mandatory texts the university may require in a course syllabus. There is additional tediousness in updating a course’s schedule each semester. In addition, the UGCC must review and approve course syllabi as part of the process for course actions and reviewing newly proposed special topics courses. Providing feedback or resources to guide syllabus updates can be time-consuming and repetitive, especially when multiple syllabi require the same feedback and updates to meet university policies.

Project Description

The UGCC would like a web application to facilitate the creation, revision, and feedback process for course syllabi for computer science courses at NCSU. An existing web application enables access to syllabi for users from different roles, including UGCC members, UGCC Chair, and course instructors (where UGCC members can also be instructors of courses). The UGCC members are able to add/update/reorder/remove required sections for a course syllabus, based on the university checklist for undergraduate course syllabi. Instructors are able to use the application to create a new course syllabus, or revise/create a new version of an existing course syllabus each semester. The tool provides the functionality for review comments and resolution, important dates (e.g., holidays and wellness days), and the inclusion of schedule information.

We are building on an existing system. The focus this semester will support template item editing and propagation, syllabus duplication with schedule updates, and front-end refactoring and automated testing.

New features include:

- Propagate template edits to syllabi based on the current template version. The propagation should be automatic for any non-editable blocks. There will need to be a process for conflict management that could be handled programmatically or with user intervention.

- Full implementation of syllabus duplication that will copy a syllabus for the next semester. This should include updating the course’s schedule to the new semester’s dates and avoiding important semester dates where classes are not held. A robust solution (as a stretch goal) would handle a class that is moving days (e.g., M/W to T/H or 2 days per week to 1 day per week).

Process improvements include:

- Refactoring front-end and adding automated front-end testing

- GitHub actions that will run tests and report statement coverage

- Scripts to support VM deployment

Technologies and Other Constraints

- Technologies are based on extending the existing codebase, which uses:

- Docker

- Java (for the backend)

- PostgreSQL

- JavaScript (for the frontend)

- The software must be accessible and usable from a web browser

Sponsor Background

Blue Cross and Blue Shield of North Carolina (Blue Cross NC) is the largest health insurer in the state, serving ~5 million members with ~5,000 employees across Durham and Winston‑Salem. Since 1933, Blue Cross NC has focused on making healthcare better, simpler, and more affordable, and on tackling the state’s most pressing health challenges to drive better outcomes for North Carolinians.

Background and Problem Statement

Employer HR/Benefits administrators need a straightforward way to perform member maintenance for group insurance: adding subscribers and dependents, terminating members, updating demographics and contact information, and handling effective dating (including retroactive changes). Current processes are often fragmented across multiple tools and require manual interpretation of complex policy/business rules.

Project Description

Students will build an end‑to‑end system—front to back—that demonstrates a clean user experience on the front end with server-side rendering and Micro UIs. They will include a lightweight AI policy assistant that provides real-time, non-binding guidance during HR workflows. Users can chat to describe their task, and the assistant surfaces relevant policy impacts and prompts for confirmation on downstream effects.

Technologies and Other Constraints

The project uses Vue with Nuxt 3 (SSR) for the frontend, adopting a micro‑frontend architecture via Vite Module Federation. The backend runs on Java with Quarkus, exposes GraphQL APIs, and supports any relational database (PostgreSQL recommended). Constraints include synthetic/mock data only, deployment preferably on OpenShift/Kubernetes, and stretch goals for observability (OpenTelemetry + Tempo),TLS encryption, SSO with Ping OAuth.

Sponsor Background

The Campus Writing & Speaking Program (CWSP), within the Office for Faculty Excellence (OFE), supports faculty in embedding oral, written, and digital communication across the curriculum. Co-directed by Dr. Kirsti Cole and Dr. Roy Schwartzman, with Senior Strategic Advisor Dr. Chris Anson, CWSP leads the Writing & Speaking Enriched Curriculum (WSEC) and ACI initiatives. Its work has helped position NC State as a top US university for writing-in-the-disciplines.

Background and Problem Statement

Phase I delivers the CWSP ACI Certificate Companion, a unified system for faculty to track progress, store artifacts, and submit capstones.

Phase II expands this foundation by creating a Student Partner Program that connects undergraduates with ACI faculty projects, while also layering in advanced innovation features.

Key possibilities include:

- Student Engagement: Students join faculty certificate projects, contribute artifacts, and log reflections for recognition (badges, micro-credentials).

- Enhanced Feedback: Faculty, mentors, and students interact through threaded feedback, rubrics, and collaborative comments.

- Knowledge Commons: The resource repository expands into a faculty-student knowledge base of assignments, prompts, rubrics, and sample projects.

- Digital Communication Innovation: The platform pilots a home-grown bot to guide faculty and students in using LLMs effectively and ethically. This bot could operate in multiple modes:

- “Digital Hand” – a step-by-step scaffolder for drafting and revision.

- Game-based tutorial mode – scenario-driven experiments with AI outputs.

- Reflective companion – prompting users to interrogate ethics, accessibility, and rhetorical appropriateness.

Phase II is visionary but realistic: it leverages the Phase I system, introduces a student role, and layers in future-facing features that distinguish NC State as a leader in communication innovation.

Project Description

Vision. Extend the platform into a multi-role ecosystem where faculty and students collaborate, supported by integrated resources and innovative digital tools.

MVP Features

- Student Partner role and opportunity board.

Faculty can post structured opportunities (e.g., syllabus pilot support, oral workshop assistance, digital portfolio testing). Students browse, sign up, and get matched to projects. - Matching and approval workflow.

Mentors/admins oversee a lightweight matching process to ensure projects are appropriate and workloads are balanced. Students see a clear status update (applied, approved, active). - Student reflection and artifact logging.

Students log contributions and upload artifacts alongside guided reflection prompts (mirroring Phase I’s faculty reflection prompts), reinforcing the habit of metacognition in communication practice. - Faculty dashboards with student integration.

Dashboards show both faculty progress and student contributions, enabling faculty to see how their partners are engaging with their project. - Resource repository expansion.

The repository becomes a shared knowledge commons, where both faculty and students can upload, tag, and reuse assignments, rubrics, and digital tools. Resources are filterable by role and certificate track.

Stretch Goals

- Student achievement badges and certificates.

Undergraduate partners earn digital micro-credentials (badges or certificates) for completing engagements, aligning their contributions with NC State’s broader recognition of experiential learning. - Peer-to-peer feedback with threaded discussions.

Students and faculty can comment on each other’s artifacts in discussion-style threads, introducing collaborative critique that mirrors writing center and studio practices. - Integrated showcase gallery.

Builds on the Phase I micro-showcase by combining faculty capstones and student projects into a browsable gallery, with search and tagging by certificate track, communication mode, or skill. - Interactive digital communication bot (pilot).

A home-grown bot scaffolds ethical and effective LLM use in digital communication. Possible modes include:- Digital Hand: guided, step-by-step support for drafting and revising.

- Game-based Tutorial: scenario-driven activities where users test prompting strategies.

- Reflective Companion: prompts that nudge users to evaluate tone, ethics, accessibility, and rhetorical choices.

- Analytics dashboards.

Visual dashboards summarize program outcomes: faculty completion rates, student engagement hours, resource usage, and patterns of feedback. These dashboards help CWSP assess impact and demonstrate institutional value.

Fit for Senior Design. Requiring scalability, permissions logic, and user-experience innovation. Provides opportunities for backend, frontend, AI integration, and UX design.

Timeline and scope for the Spring 2026 semester to be determined with sponsors.

Technologies and Constraints

- React/Next.js; Node/Express or FastAPI; PostgreSQL.

- Must ensure backward compatibility with Phase I features.

- AI/bot prototype can be sandboxed using open APIs, but should remain lightweight, ethical, and accessible.

- Scalable design for future expansion (graduate mentors, cross-college programs).

Sponsor Background

Jenn Woodhull-Smith is a lecturer in the Poole College of Management and has developed open source textbooks for several entrepreneurship courses on campus. The creation of open source micro-simulations based on the course content will not only enhance student learning in and outside of the classroom, but also provide a cost effective learning tool for faculty and students.

Background and Problem Statement

Currently, there is a significant lack of freely accessible simulations that effectively boost student engagement and enrich learning outcomes within educational settings. Many existing simulations are typically bundled with expensive textbooks or necessitate additional purchases. An absence of interactive simulations in an Entrepreneurship course diminishes student engagement, limits practical skill development, and provides a more passive learning experience focused on theory rather than real-world application. This can reduce motivation and readiness for entrepreneurial challenges post-graduation.

Our primary goal is to develop an open source simulation platform that initially supports the MIE 310 Introduction to Entrepreneurship course, but could be later made accessible to all faculty members at NC State and eventually across diverse educational institutions.

Project Description

The envisioned software tool is a versatile open source tool designed to create visual novel-like mini-simulations with content and questions related to a certain course objective. The intent is to empower educators to be able to create their own simulations on a variety of different topics. Faculty will be able to develop interactive learning modules tailored to their teaching needs. This tool needs to be able to export grades, data, and other relevant information based on the following requirements:

- Introduce the scenario in a welcome screen, including text and images

- Have an end of simulation screen that displays the users’ grade

- Allow for multiple simulations to be run back to back

- Export grades and user to a database to be used by Moodle to grade the student

- Update system design

- Create a website for students to access the simulation by logging in with their NCSU credentials

Technologies and Other Constraints

Suggestions for the Spring 2026 team are the following:

- Performance and Technical Debt:

- Sequential data fetches are blocking, allow them to run concurrently

- Project structure should be refactored to clear out legacy and unused code

- Prepare for a production environment:

- Ensure all error handling is up to par

- Setup monitoring

- Test for accessibility

- Completely Open-Source the Project for other Universities to use

- Connect LTI with Canvas

Technologies used in prior semesters include:

- Frontend: React, Tailwind CSS, docker, TypeScript

- Backend: NextAuth.js, TypeScript, docker, Google Cloud, Moodle, Google Classroom, mongo DB

Sponsor Background

Decidio uses Cognitive Science and AI to help people make better decisions and to feel better about the decisions they make. We plan to strategically launch Decidio into a small network fanbase, then grow it deliberately, methodically and through analytics into a strong and accelerating network.

Background and Problem Statement

Consumer platforms live or die by their ability to solve the Cold Start Problem. We require tooling to simulate, interrogate, and forecast network formation under different strategic assumptions so that (1) execution aligns tightly with model predictions, (2) investor communication is concrete and falsifiable, and (3) once launched, live telemetry can be visualized against projections for adaptive steering.

Project Description

The solution includes the implementation of a Domain Specific Language embedded in a Common Lisp REPL and a visualization engine provided through a webapp. The DSL will be provided and is designed to closely mirror the thinking process of membership network creation experts and strategists. Lisp will be the supporting language with its highly interactive Read-Eval-Print-Loop and IDE ecosystem. All of Lisp's capabilities will be directly exposed to the user so they can create anything from simple imperative simulations (scripts) to applicative algorithms to functional schemes, etc. The webapp will be responsible for presenting the visualizations in extremely sophisticated and polished ways. (This is not a behind-the-scenes utility.)

Technologies and Other Constraints

Steel Bank Common Lisp (SBCL) will be used exclusively, along with items like QuickLisp for package management and preferably Emacs + Slime for the IDE. Technologies under consideration for bridging the REPL to server and/or webapp include drakma, hunchensocket, woo + woo-websock, clws and websocket-driver. Strong preferences for the bridge will be taken into consideration; otherwise, Decidio will provide initial guidance. The visualization engine should run purely on the server and/or webapp client. The stack will be standard full-stack TypeScript/JavaScript, CSS, HTML and Node.js. The visualization itself will be a Temporal Force-Directed Graph (similar to https://observablehq.com/@d3/ temporal-force-directed-graph) with full playback capability. For this we recommend using D3.js.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

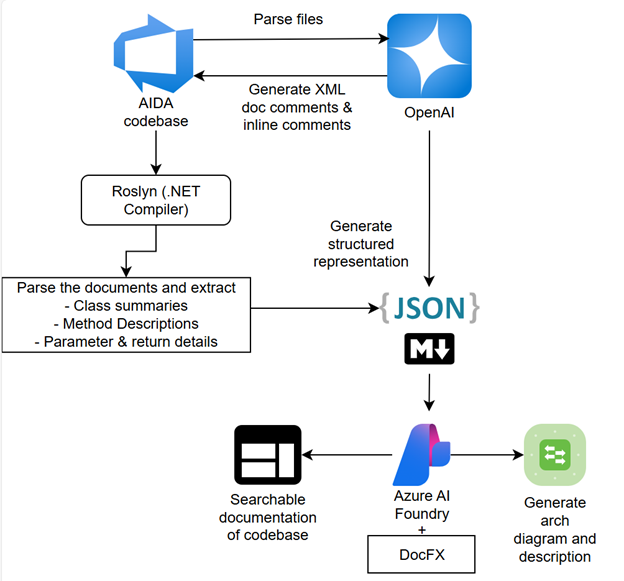

Hitachi Energy develops advanced transformer design optimization software, including AIDA, to support engineering teams in creating efficient and reliable solutions. Comprehensive documentation is critical for maintainability, onboarding, and accelerating development cycles.

Background and Problem Statement

The AIDA codebase, written in C#, currently lacks adequate documentation, making it challenging for developers to understand class structures, methods, and parameters. Manual documentation is time-consuming and prone to inconsistencies. There is a need for an automated, AI-driven approach to generate accurate and searchable documentation for the entire codebase.

Project Description

The goal of this project is to automate documentation generation for the AIDA C# codebase using AI and modern tooling:

- Parse C# files with OpenAI to generate XML doc comments and inline explanations for missing documentation.

- Leverage Roslyn to extract structured metadata:

- Class summaries

- Method descriptions

- Parameter and return details

- Aggregate extracted data into JSON or Markdown format for portability.

- Use OpenAI and DocFX to transform this data into searchable, user-friendly documentation for the entire codebase.

This solution will ensure maintainability, improve developer productivity, and provide a scalable approach for future projects.

A high-level view of the proposed solution is as shown below:

Expected Outcomes and Benefits

- Comprehensive Documentation: Automatically generate XML doc comments and inline explanations for all classes and methods.

- Improved Developer Efficiency: Reduce onboarding time and accelerate feature development by providing clear, searchable documentation.

- Traceability and Consistency: Ensure uniform documentation standards across the codebase.

- Scalable Solution: Create a repeatable process for other C# projects within Hitachi Energy.

- Student Learning Benefits:

- Hands-on experience with AI-assisted code parsing and documentation generation.

- Exposure to Roslyn for code analysis and DocFX for documentation publishing.

- Practical understanding of integrating OpenAI APIs into enterprise workflows.

- Opportunity to work on a high-impact project improving software maintainability.

Technologies and Other Constraints

Develop the solution as a SaaS tool hosted on Microsoft Azure, leveraging OpenAI APIs, Roslyn, and DocFX within the appropriate technology stack. While designed for automated documentation generation with minimal human intervention, incorporate a human-in-the-loop approach to allow developers to review, provide feedback, and override AI-generated comments and summaries.

Students will be required to sign over IP to sponsors when the team is formed.

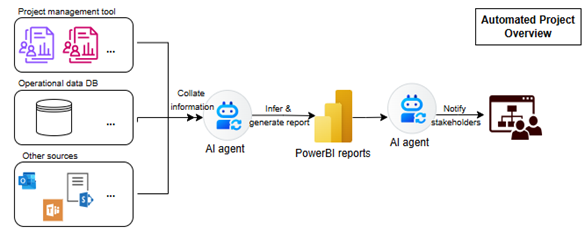

Sponsor Background

Hitachi Energy serves customers in the utility, industry, and infrastructure sectors with innovative solutions and services across the value chain. Together with customers and partners, we pioneer technologies and enable the digital transformation required to accelerate the energy transition toward a carbon-neutral future.

Background and Problem Statement

Hitachi Energy specializes in traction transformers designed for transportation applications. These transformers deliver high uptime, safety, and reduced energy costs through superior efficiency and lightweight construction. They are engineered for resilience in harsh environments and unstable grids, ensuring low maintenance and reliable performance.

Currently, tracking the status of projects within the Traction Transformer portfolio requires manual collation of data from multiple sources (project management tools, emails, spreadsheets). This process is maintained in Excel, which is not scalable and consumes significant human resources.

Project Description

The objective of this project is to develop an automated AI-driven pipeline that:

- Aggregates project status data from diverse sources (tools, emails, spreadsheets).

- Parses and infers insights from the collected data for actionable intelligence.

- Generates dynamic Power BI dashboards for real-time project tracking.

- Implements a custom notification engine to deliver tailored updates to relevant stakeholders.

This solution will streamline project monitoring, reduce manual effort, and enhance decision-making through timely and accurate information.

A high-level view of the proposed solution is as shown below:

Expected Outcomes and Benefits

- Improved Efficiency: Reduce manual effort and time spent on project tracking by automating data aggregation and reporting.

- Real-Time Visibility: Enable stakeholders to access up-to-date project status through interactive dashboards.

- Proactive Communication: Deliver timely, customized notifications to relevant teams, improving collaboration and responsiveness.

- Scalability: Create a solution that can handle multiple projects and diverse data sources without additional overhead.

- Student Learning Benefits:

- Hands-on experience with AI-driven automation and data engineering.

- Exposure to Power BI for visualization and reporting.

- Practical understanding of integrating cloud services and notification systems.

- Opportunity to work on a real-world problem with measurable business impact.

Technologies and Other Constraints

Develop the solution as a SaaS tool hosted on MS Azure, leveraging the appropriate technology stack. While designed for minimal human intervention, incorporate a human-in-the-loop approach to allow feedback and override AI decisions.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Progress Software is a global enterprise software company that builds tools and platforms used by developers and organizations worldwide to create, deploy, and operate modern applications. As part of our ongoing focus on innovation and AI-driven transformation, this project is sponsored by Progress’s centralized Software Development Lifecycle (SDLC) organization, which is responsible for evolving how 1,000+ engineers across the company build software—exploring how AI, automation, and agent-based systems can meaningfully improve developer productivity, software quality, and delivery speed.

Background and Problem Statement

Progress engineering teams work across multiple CI/CD tools—Jenkins, GitHub Actions, Harness, Azure DevOps, and Buildkite—each with different conventions, security controls, and maturity levels. Engineers are often asked to perform tasks in a tool they don’t know (X) even though they are proficient in another (Y). While our SDLC strategy emphasizes Pipelines-as-Code, reusable components, and embedded governance, there is no capability that guides engineers step-by-step to implement changes “the Progress way” in unfamiliar tools.

This gap leads to slower onboarding, uneven compliance, duplicated effort, and inconsistent application of shared modules/templates. The problem is not a lack of standards or inner source assets—it’s making those assets immediately actionable, with guided translation from the engineer’s starting point to the target tool and pipeline pattern.

Project Description

Design and prototype an AI assistant that helps engineers complete real CI/CD tasks in any of our pipeline tools by translating from what they already know (Y) to what they need to do (X) while enforcing Progress best practices.

What the assistant will do:

- Guided Translation (Y→X): Map familiar pipeline concepts (e.g., Azure DevOps YAML stages) to the target tool (e.g., Harness pipelines), providing side-by-side examples and recommended Progress approved modules.

- Practice Aware Recommendations: Surface the right reusable template, shared step, security scan, or policy gate at the moment of need; explain why each control exists.

- Task Walkthroughs: Generate a checklist and code snippets (YAML, JSON, shell) to complete tasks like “create a common Harness module” or “migrate build/test steps to a reusable GitHub Action,” with inline rationale tied to our standards.

- Compliance by Design: Embed Progress governance (license checks, vulnerability scanning, quality gates) into the suggested workflow so implementations align with policy from the start.

Example Use Case:

“I know Azure DevOps in MOVEit. Help me write and roll out a new common module in Harness the Progress way—explain each step in Azure DevOps terms so I learn while doing.”

Outcomes for end users: Faster, compliant delivery; reduced support burden on experts; increased reuse of golden modules; improved SDLC scorecard metrics (lead time, deployment frequency, change failure rate).

Technologies and Other Constraints

Preferred technologies (flexible based on availability):

- LLM & Orchestration: Azure OpenAI (or opensource LLM), LangChain/LlamaIndex for tool aware workflows.

- Knowledge & Retrieval (RAG): Indexed Progress materials (standards, templates, inner source repos) from SharePoint/Confluence/GitHub; vector store for semantic search.

- CI/CD Integrations:

- GitHub Actions (REST/GraphQL APIs, reusable workflows)

- Harness (Pipelines API, modules)

- Azure DevOps (Pipelines API)

- Jenkins (Pipeline libraries)

- Buildkite (APIs)

- Governance & Quality: OPA/Rego for policy gates; Black Duck and SonarQube integration points.

- Implementation Stack: TypeScript or Python; containerized service; minimal web UI (React or simple Flask/FastAPI) for prompts, walkthroughs, and snippet output.

- Auth & Access: Entra ID/Azure AD for authentication (mocked or sandboxed for student use).

Constraints and guidance:

- Use publicly shareable or synthetic datasets for standards/examples; do not access confidential Progress material in the student environment.

- Focus on pipeline tasks (not all SDLC tools) to ensure scope fits for a semester.

- Deliver a working prototype with at least two tool mappings (e.g., Azure DevOps → Harness; Jenkins → GitHub Actions).

- Include evaluation metrics (task completion time, governance coverage, reuse of shared modules) in the demo.

Sponsor Background

Siemens Healthineers develops innovations that support better patient outcomes with greater efficiencies, enabling healthcare providers to meet the clinical, operational, and financial challenges of a rapidly changing healthcare landscape. As a global leader in medical imaging, laboratory diagnostics, and healthcare information technology, Siemens Healthineers has deep expertise across the entire patient care continuum, from prevention and early detection to diagnosis and treatment.

Within Siemens Healthineers, the Managed Logistics organization supports service engineers who perform planned and unplanned maintenance on imaging and diagnostic equipment worldwide. The Managed Logistics team plays a critical role in ensuring that the right replacement parts reach the right engineer at the right place and time, directly contributing to reliable patient care.

Background and Problem Statement

Siemens Healthineers’ software developers and data analysts rely on a large and growing body of internal documentation, including OneNote notebooks, PDF documents, and code repositories. Over time, this information has become difficult to search and navigate, leading to siloed knowledge, inconsistent understanding across the team, and challenges onboarding new team members efficiently.

Currently, finding relevant information often requires manual searching, asking colleagues for guidance, or relying on institutional memory. These approaches can be time-consuming and prone to misunderstandings. As teams and documentation continue to grow, there is a clear need for a more effective way to surface relevant internal knowledge and ensure that team members share a common, accurate understanding of processes, systems, and best practices.

Project Description

The goal of this project is to design and build an Internal Knowledge Companion: a web-based, AI-powered assistant that helps internal users quickly find, understand, and reference information contained within Siemens Healthineers’ internal documentation.

The envisioned solution will leverage retrieval-augmented generation (RAG) techniques, combining an open-weight large language model with a document retrieval system. Rather than training a language model from scratch, the system will retrieve relevant content from internal documents at query time and use the language model to generate clear, context-aware responses grounded in those sources.

Example use cases include:

- A new team member asking, “How does our order escalation process work?” and receiving a concise answer with links or citations to the original documentation.

- A developer querying, “Where is the data model for lead-time forecasting defined?” and being pointed to relevant design documents and repositories.

- Analysts asking domain-specific questions and receiving answers supported by source attribution to reduce ambiguity and build trust.

The system will be designed to adapt over time, allowing new documents to be added without retraining the language model. By improving information accessibility and consistency, the Internal Knowledge Companion will enhance onboarding, reduce time spent searching for information, and help teams stay aligned.

Technologies and Other Constraints

Requirements:

- Retrieval-Augmented Generation (RAG) architecture

- Web-based user interface

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Alen Baker is a 1973 graduate of the NCSU CSC department, and 2017 Hall of Fame inductee. He sponsored multiple Senior Design teams over his 20 years employed by Duke Energy. After retiring, he established the Fly Fishing Museum of the Southern Appalachians in Bryson City. He is currently developing a non-profit fly fishing center to support non-profit organizations with education and activities that utilize fly fishing as a means of recovery and enrichment, including programs for veterans with PTSD, cancer patients, foster youth, and scouting merit badges.

Background and Problem Statement

The Cap Wiese Fly Fishing Center resides within the historic Patterson School Campus. The private middle to high school closed in 2009 and reopened as a community education center for various education opportunities such as STEM camps and conservation education. In 2024, Alen Baker proposed that the campus be open to fly fishing organizations that ”give back” via fly fishing instruction and support to those that have an interest in fly fishing as a recovery mechanism as well as conservation. Fly fishing activities are spread among multiple classrooms as well as the campus bodies of water. The campus is 1400 acres in total. This makes it difficult to know and record who visits the campus and where they are located. Obtaining a liability of waiver and the identity of anglers utilizing the facilities is a challenge, especially with the limited office hours. Automation would allow a check-in process to be available 24/7. Digital records would allow for usage analysis.

Project Description

This project will replace the existing manual campus access procedures with a fully automated, easy-to-use QR- activated cellular phone application. Essential functions include creating and maintaining an identity record for each individual who has physical contact with the campus, as well as obtaining signed waivers for both adults and minors. Upon access, a notification will be issued to the administrative office and a record of the event will be posted for future viewing and analysis.

Desired data for retention and functional use may include identity records of individuals including selected specifics that profile for fly fishing interests, signed waivers for liability release, event details, organizational partnerships and linked inventory records related to applicable assets.

Technologies and Other Constraints

In addition to Google technologies, the student team will employ software to create and deploy a mobile app, including software to read QR codes.

Sponsor Background

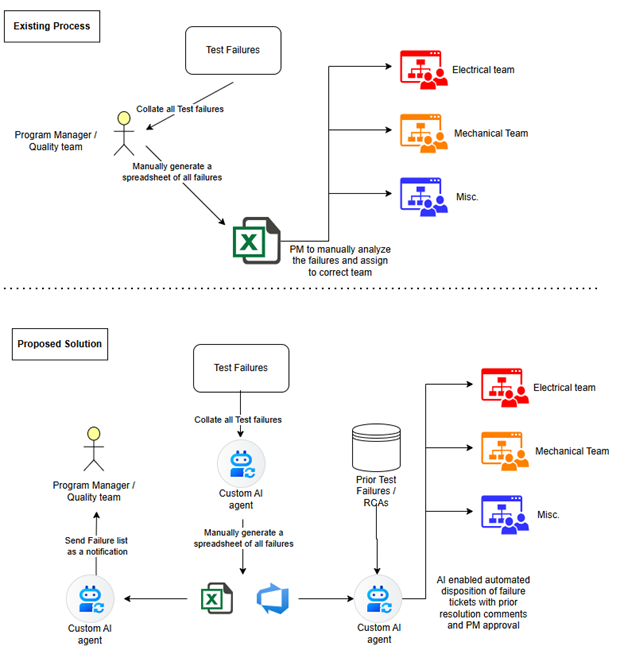

Hitachi Energy is committed to delivering high-quality products and services across the energy value chain. Ensuring product reliability and compliance with stringent quality standards is critical to maintaining customer trust and operational excellence.

Background and Problem Statement

Quality teams currently manage disposition workflows and analyze historical failure data through manual processes and fragmented tools. This approach increases the risk of overlooking test failures, delays in corrective actions, and limited traceability of issues. There is a need for an intelligent, automated system that not only streamlines workflows but also provides transparent reasoning behind AI-driven recommendations.

Project Description

The objective of this project is to develop an AI-enabled Quality Support Platform that:

- Automates disposition workflows for test failures and quality checks.

- Provides historical failure insights to guide decision-making and improve reliability.

- Delivers transparent AI recommendations with drill-down capabilities for root cause analysis.

- Integrates with existing quality systems for seamless adoption and scalability.

This solution will empower quality teams with actionable insights, reduce manual effort, and enhance overall product quality.

A high-level view of the current and proposed solution is as shown below.

Expected Outcomes and Benefits

- Reduced Missed Failures: Minimize chances of overlooking test failures through automated detection and alerts.

- Workflow Automation: Streamline dispositioning and status tracking to improve efficiency.

- Enhanced Traceability: Ensure complete visibility of issues and corrective actions for compliance and audits.

- Improved Quality: Leverage historical data and AI-driven insights to proactively address recurring problems.

- Learning Benefits:

- Hands-on experience with AI for quality assurance and workflow automation.

- Exposure to explainable AI techniques for transparent recommendations.

- Practical skills in integrating AI solutions with enterprise systems.

- Opportunity to contribute to a high-impact project improving operational excellence.

Technologies and Other Constraints

Develop the solution as a SaaS tool hosted on MS Azure, leveraging the appropriate technology stack. While designed for minimal human intervention, incorporates a human-in-the-loop approach to allow feedback and override AI decisions.

Students will be required to sign over IP to sponsors when the team is formed.

Sponsor Background

Hitachi Energy is a global leader in transformers and digital solutions. The CoreTec is a piece of Linux hardware which monitors transformer health using data from sensors. It is critical for the transformer ecosystem because it allows operators to track and fix issues before they become larger problems.

Background and Problem Statement

The CoreTec currently uses a basic hardware watchdog to maintain system stability. In general, the purpose of a hardware watchdog is to restart a system whenever a critical issue occurs.

With the current implementation, the hardware watchdog toggles a GPIO (General Purpose Input/Output) flag every second. If the flag is not toggled within a certain time interval, the watchdog assumes there is a critical issue and triggers a hardware restart.

This type of hardware watchdog is effective for detecting system faults, but it does not monitor process-level failures or system resource usage. It would be beneficial for the CoreTec to have a more fully-featured watchdog.

Project Description

The goal of this project is to implement a software watchdog which monitors system resources and processes. The project will improve system resilience and provide more granular control over issue recovery.

Technical Details:

- The watchdog must monitor CPU usage, memory usage, and incoming zeroMQ messages for a set of configurable processes. Then, when usage/cycle thresholds are met, the watchdog should execute a configurable EXEC command.

- The software watchdog should toggle a bit on every cycle to indicate its health. Then, the existing hardware watchdog should check the bit and conduct a power cycle if the bit has not changed over a set time period.

- The application must be integrated with systemd and run indefinitely as a system service. Systemd is a system and service manager which typically handles system boot, processes, and system resources.

- All watchdog configuration settings must be stored in a JSON file which can be edited by the developers. A user interface for configuration is not part of this project.

Technologies and Other Constraints

The technologies are required unless otherwise stated.

Development Environment

- Visual studio 2022 (Community license)

- WSL ubuntu 20.04

Programming Language

- C++ 17 or C++ 20

- Avoid C++ 23 or later due to possible deployment incompatibility

Build Tools

- cmake, version 3.27 or later

- gcc with the following flags:

- -Wall -Wextra -Wshadow -Wnon-virtual-dtor -pedantic

- The compiler should be free of warnings.

Static Analysis Tools

- Clang-tidy (integrated with cmake)

- ASan Address Sanitizer

- Integrate address sanitizer in debug mode

- gcc -fsanitize=address,undefined

- Sonarcloud (optional)

External Libraries

- These libraries currently exist in the CoreTec’s codebase and will be necessary for the project.

- nlohmann modern JSON version 3.10.2

- zeroMQ version 4.3.4

- zeroMQ is a network library used in the CoreTec to send messages between different parts of the system

- https://github.com/zeromq/libzmq/tree/v4.3.4

- Note: Other external libraries must be formally agreed upon before usage

JSON File

- All watchdog settings must be stored in a single JSON configuration file. The JSON file should include settings for the watchdog itself and for each of the monitored processes. The project sponsor will share more information on it.

Other Tools and Constraints

- Use legacy libprocps version 3.x API (openproc/readproc) to sample per-PID CPU% and RSS.

- OS command/utilities must be called using the Linux C/C++ API. Do not fork shell or use system(), exec(), or system other calls.

- Note: Due to desired flexibility, forking a shell will be required to execute the JSON-configured exec commands on watchdog trigger (see JSON file guidelines).

- Reference https://www.man7.org/tlpi/ for more information. The book is available online and in print through the NCSU library (https://catalog.lib.ncsu.edu/catalog/NCSU4276484).

Students will be required to sign over IP to sponsors when the team is formed.

Sponsor Background

The Laboratory for Analytic Sciences is a research organization in support of the U.S. Government, working to develop new analytic tradecraft, techniques, and technology that help intelligence analysts better perform complex tasks. Processing large volumes of data is a foundational capability in support of many analysis tools and workflows. Any improvements to existing processes and procedures, whether they are measured in time, efficiency, or stability, can have significant and broad reaching impact on the intelligence community’s ability to supply decision-makers and operational stakeholders with accurate and timely information.

Background and Problem Statement

Artificial Intelligence models can now perform many complex tasks (e.g. reasoning, comprehension, decision-making, and content generation) which until recent years have only been possible for humans. Like humans though, an AI model generally works best on tasks that it was specifically trained to perform. While general-purpose models (often called foundational models, or pretrained models) can have surprisingly strong performance across a range of applications in their domain, they are typically outperformed within any particular subdomain by a model which was specifically trained for that more narrow subdomain. The most common approach to building these more specialized models is to start with a foundational or pretrained model, and then fine-tune it with a dataset in the more narrow subdomain so that the result is specifically trained, and hyper-focused, on that subdomain.

For example, consider the speech-to-text (STT) model Whisper from OpenAI. Out of the box, this model is capable of producing very accurate transcriptions over a wide range of speech audio recordings (i.e those having differing languages, dialects, accents, noise environments, verbiage, etc). Now suppose that a user is only concerned with transcribing speech audio originating from a single environment and a single speaker, e.g. perhaps a recording of a professor’s lectures throughout a semester. This is a far

more narrow subdomain of application. A data scientist could, of course, apply Whisper and move on to other projects. However, if squeezing out the best accuracy possible is deemed worth the effort, then that data scientist could consider fine-tuning a custom version of Whisper for this particular application.

To fine-tune Whisper, the data scientist would start by considering Whisper to be a pretrained model, i.e. a starting point for the eventual model to be trained. Then the user could gather a relatively small set of labeled data, meaning recordings that are manually transcribed with ground truth transcriptions. In the lecture recording example, this might mean going to class for the first week of the semester, recording the audio, and manually transcribing everything that was spoken. With this labeled dataset in hand, the next step would be to fine-tune Whisper. Optimal procedures for fine-tuning an AI model can be a very complex process, and is perhaps both an art and a science, but general procedures are generally available. The result will be a fine-tuned Whisper variant that, in all likelihood, will produce more accurate speech-to-text results, for future recordings of that professor’s class, than the original Whisper model will. Important to note, this fine-tuned model may presumably perform worse than the original Whisper model on most other applications.

Working with previous senior-design teams, LAS has developed an online tool, TuneTank, to help streamline the process of fine-tuning a Whisper model to a given dataset. It is expected that this will enhance the efficiency and effectiveness of the process/results. The existing, fine-tuning interface has very basic support for evaluating the fine-tuned model or for selecting a model that is best suited to a particular dataset.

Project Description

To complement TuneTank, the LAS would like a Senior Design team to develop a python program to evaluate whisper finetunes. Given one or more whisper models, the program should benchmark each of the models on several pre-determined and user-specified datasets using metrics like levenshtein distance and word error rate. Once the benchmark is completed, the program should recommend the best overall whisper model and the best whisper model for special use cases (noisy data, multilingual data, etc.)

The LAS will provide the team with one or more data set(s) with which to use for development and testing. The LAS will also provide the team with experienced mentors to assist in understanding the various AI aspects of this project, with particular regards to the fine-tuning methodologies to be implemented. However, this is a complex topic so at least half the team should have strong interest in the topic of machine learning/artificial intelligence.

Technologies and Other Constraints

The team will have great freedom to explore, investigate, and design the benchmarking system described above. However, the methodology employed should not have any restrictions (e.g. no enterprise licenses required). In general, we will need this technology to operate on commodity hardware and software environments, and only make use of technologies with permissive licenses (MIT, Apache 2.0, etc). Beyond these constraints, technology choices will generally be considered design decisions left to the student team. The LAS will provide the student team with access to AWS resources for development, testing and experimentation, including GPU availability for model training.

ALSO NOTE: Public distributions of research performed in conjunction with USG persons or groups are subject to pre-publication review by the USG. In the case of the LAS, typically this review process is performed with great expediency, is transparent to research partners, and is of little to no consequence to the students.

Sponsor Background

The Laboratory for Analytic Sciences is a research organization in support of the U.S. Government, working to develop new analytic tradecraft, techniques, and technology that help intelligence analysts better perform complex tasks. Processing large volumes of data is a foundational capability in support of many analysis tools and workflows. Any improvements to existing processes and procedures, whether they are measured in time, efficiency, or stability, can have significant and broad reaching impact on the intelligence community’s ability to supply decision-makers and operational stakeholders with accurate and timely information.

Background and Problem Statement

Artificial Intelligence models can now perform many complex tasks (e.g. reasoning, comprehension, decision-making, and content generation) which until recent years have only been possible for humans. Like humans though, an AI model generally works best on tasks that it was specifically trained to perform. While general purpose models (often called foundational models, or pretrained models) can have surprisingly strong performance across a range of applications in their domain, they are typically outperformed within any particular subdomain by a model which was specifically trained for that more narrow subdomain. The most common approach to building these more specialized models is to start with a foundational or pretrained model, and then fine-tune it with a dataset in the more narrow subdomain so that the result is specifically trained, and hyper-focused, on that subdomain.

For example, consider the speech-to-text (STT) model Whisper from OpenAI. Out of the box, this model is capable of producing very accurate transcriptions over a wide range of speech audio recordings (i.e those having differing languages, dialects, accents, noise environments, verbiage, etc). Now suppose that a user is only concerned with transcribing speech audio originating from a single environment and a single speaker, e.g. perhaps a recording of a professor’s lectures throughout a semester. This is a far

more narrow subdomain of application. A data scientist could of course apply Whisper and move on to other projects. However, if squeezing out the best accuracy possible is deemed worth the effort, then that data scientist could consider fine-tuning a custom version of Whisper for this particular application.