Projects – Spring 2025

Click on a project to read its description.

Sponsor Background

Aspida is a tech-driven, agile insurance carrier based in Research Triangle Park. We offer fast, simple, and secure retirement services and annuity products for effective retirement and wealth management. More than that, we’re in the business of protecting dreams; those of our partners, our producers, and especially our clients.

Background and Problem Statement

Our Operations team handles calls from external sales teams and direct clients. Often our Operations associates are new college grads with little experience. Often they are not able to solve a problem without assistance and sometimes require a call back. We have historical data which could be used as a knowledge base but it's unstructured and not searchable.

Project Description

Our project is to build an LLM using historical data – call logs, chat logs, product knowledge. The LLM will be utilized as an assistant for Operations associates to quickly resolve problems while on the phone with the customer. Further, we have some stretch goals in mind which could include automating a response using AI generated voice or text.

Technologies and Other Constraints

- Data sets – we are assuming data sets will be in JSON format

- LLM – we prefer using Claude with Bedrock on AWS

- Cloud Environment – AWS preferred

- UI – React

- Backend – Node.js

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Through a collaboration between Poole College of Management and NC State University Libraries, we aim to create an innovative open educational resource. Jenn, a recipient of the Alt-Textbook Grant, has already developed an open source textbook for MIE 310 Introduction to Entrepreneurship. Our upcoming focus now involves creating open source mini-simulations as the next phase of this initiative.

Background and Problem Statement

Currently, there is a significant lack of freely accessible simulations that effectively boost student engagement and enrich learning outcomes within educational settings. Many existing simulations are typically bundled with expensive textbooks or necessitate additional purchases. An absence of interactive simulations in an Entrepreneurship course diminishes student engagement, limits practical skill development, and provides a more passive learning experience focused on theory rather than real-world application. This can reduce motivation and readiness for entrepreneurial challenges post-graduation.

Our primary goal is to develop an open source simulation platform that initially supports the MIE 310 course, but could be later made accessible to all faculty members at NC State and eventually across diverse educational institutions.

Project Description

The envisioned software tool is a versatile open source tool designed to create visual novel-like mini-simulations with content and questions related to a certain course objective. The intent is to empower educators to be able to create their own simulations on a variety of different topics. Faculty will be able to develop interactive learning modules tailored to their teaching needs. This tool needs to be able to export grades, data, and other relevant information based on the following requirements:

- Introduce the scenario in a welcome screen, including text and images

- Have an end of simulation screen that displays the users’ grade

- Allow for multiple simulations to be run back to back

- Export grades and user to a database to be used by Moodle to grade the student

- Update system design

- Create a website for students to access the simulation by logging in with their NCSU credentials

Technologies and Other Constraints

All resources used must be created with the open-source tools as the Alt-Textbook project is open source. For maintainability and extension, the software must be full stack web-based and not use a gaming engine.

Sponsor Background

The Undergraduate Curriculum Committee (UGCC) reviews courses (both new and modified), curriculum, and curricular policy for the Department of Computer Science.

Background and Problem Statement

North Carolina State University policies require specific content for course syllabi to help ensure consistent, clear communication of course information to students. However, creating a course syllabus or revising a course syllabus to meet updated university policies can be tedious, and instructors may often miss small updates of mandatory text that the university may require in a course syllabus. There is additional tediousness in updating a course’s schedule each semester. In addition, the UGCC must review and approve course syllabi as part of the process for course actions and reviewing newly proposed special topics courses. Providing feedback or resources for instructors to guide syllabus updates can be time consuming and repetitive, especially if multiple syllabi require the same feedback and updates to meet university policies.

Project Description

The UGCC would like a web application to facilitate the creation, revision, and feedback process for course syllabi for computer science courses at NCSU. An existing web application enables access to syllabi for users from different roles, including UGCC members, UGCC Chair, and course instructors (where UGCC members can also be instructors of courses). The UGCC members are able to add/update/reorder/remove required sections for a course syllabus, based on the university checklist for undergraduate course syllabi. Instructors are able to use the application to create a new course syllabus, or revise/create a new version of an existing course syllabus each semester.

We are building on an existing system. The focus this semester will be on adding the idea of schedule functionality to the syllabus tool. Additionally, there are several process improvements that should be made to support future deployment of the system.

New features include:

- Update how syllabus changes are handled. Instructors, course coordinators, and UGCC members should be able to make changes to syllabi for any given semester at any point. Finalized, template-level changes will update down-stream syllabi.

- UGCC members can add important semester dates like last day to add classes, drop/revision deadline, and last week of classes.

- Section blocks should be expanded to include a schedule block for listing class topics and dates.

- When copying a syllabus for a new semester, the instructor can add the teaching schedule and there is a first attempt at updating the schedule to the new semester’s dates.

- Instructors can upload a schedule file that can populate a schedule block.

- Provide functionality for downloading the syllabus in multiple formats like *.docx, *.pdf, *.md.

- Process improvements include:

- Automated frontend testing

- GitHub actions that will run tests and report statement coverage

- Scripts to support VM deployment

Stretch goal:

- Usability testing by CSC faculty

Technologies and Other Constraints

- Technologies are based on extending the existing codebase, which uses:

- Docker

- Java (for the backend)

- PostgreSQL

- JavaScript (for the frontend)

- The software must be accessible and usable from a web browser

Sponsor Background

Katabasis is a non-profit organization that specializes in developing educational software for children ages 8-15. Our mission is to facilitate learning, inspire curiosity, and catalyze growth in every member of our community by building a digital learning ecosystem that adapts to the individual, fosters collaboration, and cultivates a mindset of growth and reflection.

Background and Problem Statement

It can be difficult for everyone to consider the long-term effects of their decisions in the moment, but particularly difficult for students with emotional and behavioral disorders (EBD). Students with EBD will often have increased rates of office discipline, suspension from school, and poor academic performance. Long-term outcomes for students with EBD also suffer as a result, including a significantly greater likelihood for school dropout, high unemployment, low participation in postsecondary education, increased social isolation, and increased levels of juvenile and adult crime.

By encouraging these students to give structure to and reflect on their decisions, we can empower students with EBD to take control over their thought processes and incentive structures. To this end, Decision Intelligence provides an excellent framework for decision modelling, and is highly scalable to all levels of technical fluency.

Project Description

We are seeking a group of students to develop a web interface for middle school students sent out of the classroom (or otherwise dealing with emotional or behavioural issues) to interact with to attempt to better understand their actions, external factors, and outcomes.

Developing an intuitive and approachable interface will be the key to success in this project. It should consist of an elicitation portion, display portion, and editing portion. For the elicitation portion, there should be a gamified way of getting the details of the situation from the student. This can take the form of a literal browser game, or be more indirect, such as phrasing questions in a more whimsical fashion with engaging graphics. The key is to make it approachable and intuitive. For the display portion, we want to see a clear flow from actions to outcomes, as established by the decision intelligence framework (which we will provide the team further details on). The goal here is to make it clear to the users how different actions can lead to different outcomes. We recommend a causal decision diagram (which we can again provide more documentation for), but are open to creative ways to display the same information. Finally for the editing portion, we want to make sure users are able to tweak and adjust the display that has been generated to better match their reality. The elicitation will likely never generate a perfectly accurate display, so we want to make sure users are able to refine the display until it best matches their actual real life circumstances.

In summary, the game should be developed around the following core feature set:

- Gamified and Intuitive Interface: The web platform created must be highly approachable and intuitive, given its target users are likely to have short attention spans and high potential for bouncing off or getting frustrated with systems they can’t understand. Making sure the experience

- Decision Intelligence Framework: The backbone of the system is the Decision Intelligence framework. This consists of a way of structuring the decision making process by identifying levers (or actions), external factors affecting your decision, intermediate factors that act as stepping stones to the final factor, outcomes, the things you are measuring as an end result. Ensuring the interface developed highlights and clearly communicates these factors is essential.

- OpenDI Toolset: The team will adhere to the open source OpenDI standards, which has some data protocols and initial codebases to branch off of. This will provide the team with some initial guidance on DI software development toolsets, and also give experience in working with open source projects.

Technologies and Other Constraints

We encourage students to utilize, adhere to, and potentially contribute to the OpenDI framework.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

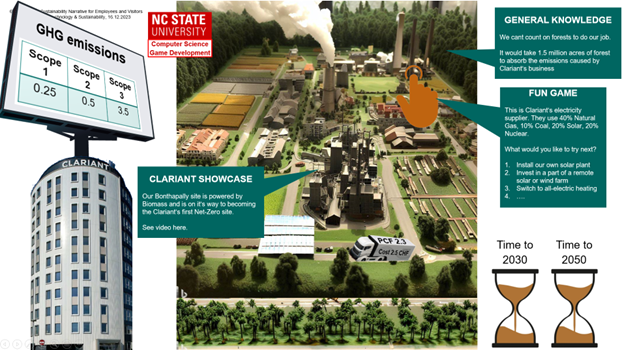

Dr. Lavoine is an Assistant Professor in renewable nanomaterials science and engineering. Her research exploits the performance of renewable resources (e.g., wood, plants) in the design and development of sustainable alternatives to petroleum-derived products (e.g., food packaging). As part of her educational program, Dr. Lavoine aims to help faculty integrate sustainability into their curriculum to raise students’ awareness of current challenges on sustainable development and equip them with the tools to become our next leaders in sustainability.

Background and Problem Statement

In June 2024, Dr. Lavoine and three of her colleagues offered a faculty development workshop on sustainability that introduced a new educational framework to guide faculty in the design of in-class activities on sustainability.

This platform integrates three known frameworks: (1) the pillars of sustainability (environment, equity, economy), (2) the Cs of entrepreneurial mindset (curiosity, connection, creating value) and (3) the phases of design thinking (empathy, define, ideate, prototype, implement, assess). For ease of visualization and use, this platform was represented as three interactive spinnable circles (one circle per framework) that faculty can spin and align with each other to brainstorm ideas at the intersection of phases from the different frameworks.

Because this platform only served as a visual tool with no functionality, a group of CSC students took up the challenge in Fall 2024 to build an entire website and database, based on this initial framework, for faculty to design and seek inspiration for in-class activities on sustainability. The CSC students did a great job at designing and programming the front end and the back end of the platform. They have suggested great graphics ideas and have built the entire foundation for users to 1- create an account, 2- upload in-class activities and 3- search for in-class activities.

This website is not quite complete yet. Hence, the purpose of this project is to keep building and designing this website to have a final prototype by June 2025. Indeed, Lavoine and her co-workers will offer this faculty development workshop in June 2025 again. How great would it be for the faculty participants to have this website functioning and ready to use?

Once finalized, Dr. Lavoine intends to share this website with the entire teaching community – and to use it primarily during faculty development workshops on sustainability. Demands from instructors and students on sustainability are exponentially increasing. Now, more than ever, it is important to put in place the best practices in teaching and learning sustainability. It is not an easy task, and research in that field has already shown some tensions between frameworks, systems, etc. The goal of this website is not to tell faculty and instructors what sustainability is about (because there is no single definition), but rather to guide them towards creatively and critically design active learning, engaging activities that raise students’ awareness on the value tensions around sustainability and help them make decisions!

Project Description

As mentioned above, the design of the website and its functionality has been initiated in Fall 2024. It will be important to build on the work that has already been done to make this Spring 2025 project a success.

Looking at the “amazing work” the 2024 CSC team has done, Dr. Lavoine has some ideas to take this website to the next level:

- Replace the previous graphic with a new, visually appealing design showing connections between the three frameworks and enabling user interaction.

- Implement upgrades and untested features from the 2024 website version.

- Add a text box for users to input a summary/brief description of activities during the upload process.

- Represent activities as tiles with an image and title.

- Integrate AI-based image generation based on activity titles and descriptions.

- Conduct usability testing with students and faculty, using feedback forms for improvements.

- Make the platform mobile-friendly and evaluate required changes for usability.

- Develop a student training interface on sustainability using submitted activities.

- Explore tools for faculty to create tests, quizzes, or assignments from uploaded activities.

More ideas are welcome! This website will evolve and get better with your input and your expertise.

Technologies and Other Constraints

Frontend: ReactJS v18.3.1, Backend: Django 4.2.16, Database: MySQL 8.4.2, Containerized with Docker

This platform should be, first, accessible through a desktop/laptop and a tablet (hence, a touch-screen option would be nice), but in the future, why not think about a mobile application or a web platform accessible through mobile.

When it comes to hosting this platform, Dr. Lavoine would consider using first the virtual machine options available at NC State (https://oit.ncsu.edu/about/units/campus-it/virtual-hosting/pricing-and-billing/). If this platform reaches demands and needs beyond expectations, cloud services (such as AWS) could be used.

Sponsor Background

The mentors mentioned above are part of the Enterprise Architecture and Innovation (EAI) division at the North Carolina Department of Information of Technology (NCDIT). They use information technology to make government more efficient, effective, and user-friendly for the public. The EAI team partners with business leaders throughout the state to find innovative ways to solve problems and improve solutions for our customers.

One of the business leaders we have been working closely with over the past two years is the Chief IT Procurement Director for the State of NC, James Tanzosch. James leads the Statewide IT Procurement Office (SITPO) that is responsible for overseeing the procurement of IT goods and services for the state. We have worked with this team to solve many process pain points through innovative solutions, but we still have many more opportunities to improve!

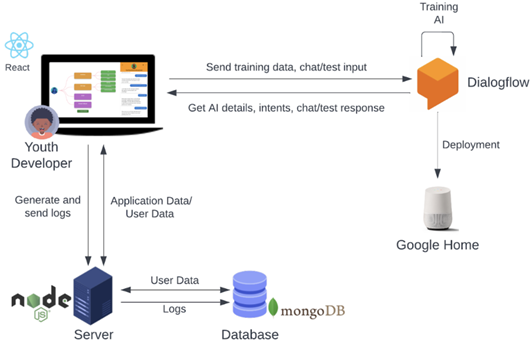

Background and Problem Statement

The NC IT procurement process is a very complex and lengthy process that our end users (agencies) have to navigate for the purchase of IT goods and services. There have been several process changes over the past two years to move an email and paper-based process to a new electronic system that is used for submitting solicitation requests, receiving proposals from vendors, evaluating vendor responses, organizing NCDIT reviews of responses, and awarding a contract to vendors. This has created a more repeatable and transparent process for all to follow.

However, there still is not a user-friendly visual that end users can reference to see where their procurement requests are within the 10-step procurement process, to understand if their request is delayed, and what actions need to be done next. Figure 1 shows the ten steps of the NCDIT IT Procurement Process.

Figure 1: NCDIT 10-Step Procurement Process

Project Description

The vision from the Statewide IT Procurement Office is to create a “pizza” tracker type of visual to show end users where their procurement projects are within the 10-step procurement process. An example of a “pizza” tracker can be seen below in figure 2. In addition to visualizing status, we could make the tracker interactive and/or provide access to available educational content so the end users understands the critical tasks / activities they need to be aware of to progress to the next step within the 10-step procurement process.

Figure 2: Example of a Domino’s Pizza Tracker

This tracker would be beneficial to the end users in a couple of ways:

- The tracker will give end users one location to see their procurement projects, where those projects currently are in the process, and have links / connections to educational information within that same visual. This will keep the end users from having to access multiple systems and sites for this information.

- The educational content linked to this tracker will allow new end users and those not as familiar with the IT procurement process to get up-to-speed on the process quicker. This will help them have a better overall end user experience.

Technologies and Other Constraints

The application will be web-based and mobile-friendly. It must utilize Azure Entra for authentication. The choice of technology stack or framework is flexible, provided that only active open-source technologies are selected.

The application should be designed for deployment to a cloud provider using a standard Docker container. Alternatively, the agency has access to the Power Platform, which can also be utilized for development.

Project and status information will be provided through a predefined REST API (a Swagger file will be supplied). Any additional backend resources developed for this application should adhere to RESTful principles.

If a database is required, it should be a Platform as a Service (PaaS) solution that can be deployed as a native service on Azure. Please note that options from the Azure Marketplace are not currently permitted within the state. It will be important for any proposed developed solution, that the solution is compliant with allowed state technologies. The NCDIT mentor team can work with students to align the requirements as needed.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

The Senior Design Center (SDC) of the Computer Science (CSC) Department at NC State oversees CSC492—the Senior Design capstone course of the CSC undergraduate program at NC State. Senior Design is offered every semester with current enrollment surpassing 200 students across several sections. Each section hosts a series of industry-sponsored projects, which are supervised by a faculty Technical Advisor. All sections, and their teams, are also overseen by the Director of the Center.

Background and Problem Statement

Senior Design involves extensive collaboration among students, faculty, and sponsors. Regular meetings are essential for teams to coordinate their work, report progress, and seek feedback from their sponsors. Scheduling these meetings, however, is a significant challenge. The process often involves numerous back-and-forth communications to find mutually agreeable times, taking into account each participant’s availability, room availability, and potential conflicts with other obligations such as classes or other meetings.

Current tools like when2meet and Doodle facilitate some aspects of scheduling but lack the ability to enforce necessary constraints, such as avoiding scheduling during class times or reserving appropriate meeting rooms. Furthermore, there is no centralized system that allows Senior Design staff and faculty to view and manage all meeting schedules across sections, while still limiting visibility for students and sponsors to their respective teams.

Project Description

For this project, your team will develop a Web application to streamline the scheduling of meetings for Senior Design participants. This application will enhance existing scheduling solutions by introducing additional constraints and functionalities tailored to the needs of the Senior Design Center. The system will have three types of users: system administrators, teaching staff, and team members (students and sponsors).

The entire feature set of the system is not fully determined, so students are welcome to propose useful features. However, the initial system should provide features such as:

All Users

- Authenticated users can initiate a meeting request

- Specify a date range for when the meeting can occur

- Specify a duration for the meeting

- Indicate if virtual or in person

- View expected available time slots of respondents

- The system will produce a unique link that can be shared

- Any user with the link can respond to meeting requests by indicating availability on a calendar view in 15-minute increments. Time blocks can be marked as available or “if-need-be”.

- Responses can be modified

- Prepopulate their profile with typical availability, to serve as a starting point when responding to requests.

- Send messages to participants in meeting requests they are a part of

- Receive notifications for confirmed meeting schedules and updates.

Teaching Staff

- View all meetings scheduled for teams within their sections.

- Filter meetings by team, date, or meeting type.

System Administrators

- Manage “workspaces”, which serve as groupings of users. For example, this can be used to segment users into semesters or similar groupings.

- Manage meeting rooms with a designated Google Calendar each. If the calendar for a room is not busy at a specific time, the room is available.

- Ability to toggle room availability on or off, so that an existing room can be excluded from consideration for a meeting request

- View and manage all scheduled meetings across all sections and teams.

- Assign default meeting durations and room preferences based on meeting type (e.g., sponsor meetings vs. team-only meetings).

- Configure integration with Google Calendar for system-wide and individual availability.

- Assign users into teams and/or sections, as well as define their role in these.

Additional features can be added if time permits, such as setting up recurring meetings.

Technologies and Other Constraints

This will be a Web application running on Docker containers. The backend will expose a REST API and will be written in Node.js with the Express Framework. The frontend will be built using React. The database will use MySQL/MariaDB. Integration with the Google Calendar API will be implemented to sync availability and meeting schedules.

This application will use a combination of Shibboleth and local accounts to authenticate users.

Sponsor Background

SAS provides technology that is used around the world to transform data into intelligence. A key component of SAS technology is providing access to good, clean, curated data. The SAS Data Management business unit is responsible for helping users create standard, repeatable methods for integrating, improving, and enriching data. This project is being sponsored by the SAS Data Management business unit in order to help users better leverage their data assets.

Background and Problem Statement

An increasingly prevalent and accelerating problem for businesses is dealing with the vast amount of information they are collecting and generating. Combined with lacking data governance, enterprises are faced with conflicting use of domain-specific terminology, varying levels of data quality/trustworthiness, and fragmented access. The end result is a struggle to timely and accurately answer domain-specific business problems and potentially a situation where a business is put at regulatory risk.

The solution is either building, or buying, a data governance solution to allow you to holistically identify and govern an enterprise's data assets. At SAS we've developed a data catalog product which enables customers to inventory the assets within their SAS Viya ecosystem. The product also allows users to discover various assets, explore their metadata, and visualize how the assets are used throughout the platform.

Now we have established the lay of the land, let's consider two cases which expose some of the weaknesses of a data catalog:

- As part of some financial reporting, an enterprise has some number of data tables upon which an ETL (exchange-transfer-load) flow is performed to generate a report-ready data table that is used in displaying or generating the content for a report. Currently in our data catalog, we have the ability to identify these individual assets, but only via their lineage are we able to understand their connection. Additionally, we don't have the ability to govern (in terms of authorization, versioning, etc.) all the assets involved in this process (input data tables, ETL flow, report-ready table, report) as a unit, but only as individual assets.

- In an enterprise, data assets are typically owned by a given business unit and for another party/business unit to make use of their data they'll need to go through some type of request process and then get a copy/tailored version of the data asset(s) for the requestor's use case. Currently in our data catalog, you may be able to identify a set of assets and who the owner of those assets is within your enterprise, but there is no formal mechanism for gaining and governing access.

This brings us to a burgeoning concept known as "data as a product "and "data products" in industry (see terminology below). A data product is a combination or set of reusable data assets curated to solve a specific business problem for a data consumer. If we could create the concept of a data product and data product catalog on top of our existing data catalog, we could realize the connections of existing assets and govern their entire lifecycle as one unit.

Terminology

Data as a product is the concept of applying product management principles to data to enhance its use and value.

Data product is a combination of curated, reusable assets (likely datasets, reports, etc.) engineered to deliver trusted data (and metadata) for downstream data consumers to solve domain-specific business problems. A more technical definition of a data product would be a self-describing object containing one or more data assets with its own lifecycle.

Data catalog is a centralized inventory of an organization/ecosystem's data assets and metadata to help them discover, explore, and govern their data.

Data product catalog is similar to a data catalog, but the inventory doesn't consist of an organization's data assets, but it's product. The focus of a data product catalog is to reduce the gap between data producers and consumers to help data consumers solve their domain-specific problems.

Project Description

As part of this project, you'll begin the work of creating a data product catalog. The tool must allow users to define a blueprint/template for a data product (i.e. name, description, shape/characteristics of assets, etc.). After a blueprint is defined, the user must be able to perform an analysis of available metadata and receive suggestions of potentially existing data products in the metadata. If a user believes one or many of the suggestions are accurate, they must be able to create a data product object based on the suggestion.

Data Product Blueprint and Instances

The tool must be able to define a blueprint for a data product (`data product blueprint`) and allow for instances of the blueprint (`a data product`) to be created.

The tool must also support the remaining basic CRUD (create, read, update, delete) operations for blueprints and instances of the blueprints. The tool may support versioning.

As a suggestion, see the following model: https://docs.open-metadata.org/v1.4.x/main-concepts/metadata-standard/schemas/entity/domains/dataproduct

Metadata

The sponsor will provide a script for the generation of synthetic metadata based off the Open Metadata schema (https://docs.open-metadata.org/v1.4.x/main-concepts/metadata-standard/schemas). The generated metadata will be available in a CSV format and the tool must be able to upload or otherwise ingest the metadata.

Identification

Given metadata and a data product blueprint, the tool must allow the user to run a identification algorithm to provide suggestions from the metadata for assets matching the blueprint.

The implementation of the identification algorithm is up to the team, but the algorithm:

- should be performant (to be defined by team in collaboration with sponsors). On a small amount of metadata, the algorithm should run in seconds.

- must provide results as suggestions with some type of ranking/score

- may provide the ability for the user to define any configuration properties for the algorithm

Manual Assignment

Given metadata and a data product blueprint, a user with knowledge of a data product must be able to use the tool to manually create a data product (an instance of a data product blueprint). The creation process must include mapping of metadata to the data assets defined in data product blueprint.

Technologies and Other Constraints

- Any open source library/packages may be used.

- This should be a web application. While the design should be responsive, it does not need to run as a mobile application.

- React must be used for UI.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Bandwidth is a software company focused on communications. Bandwidth’s platform is behind many of the communications you interact with every day. Calling mom on the way into work? Hopping on a conference call with your team from the beach? Booking a hair appointment via text? Our APIs, built on top of our nationwide network, make it easy for our innovative customers to serve up the technology that powers your life.

Background and Problem Statement

As a company, we’re always looking for ways to improve productivity and interpersonal communication. A solution allowing us to see real time analytics about our internal communications platform (Slack) could lead to insights on how we communicate with those both within and across our internal teams.

Project Description

Usage statistics for emojis, both in messages and as reactions to them, show how we express ourselves in the workplace and can be a valuable tool for gauging the feelings and mentalities of our employees, allowing us to act on those insights and create a better workplace environment.

The problem? The data is all retrievable, but we have no meaningful way to view and act on it. The solution: A way to view this data broken down by different metrics in an easily understandable fashion.

We would like to be able to view the total number of times each emoji has been used across a Slack workspace. There could be a table showing the top few, but there should be a way to search for a specific emoji even if it hasn’t been used. It would be nice if we had different views for emojis used in messages, as reactions, and combined. Additionally, we would like to have the same views, but filtered by individual(s) and channel(s). This includes the ability to view an individual(s) emoji usage across specific channel(s).

There may be a place for sentiment analysis in this project. For example, a 👍 likely means the person had a positive reaction to a message, but a 👎 likely means the person had a negative reaction to the message. What about other emojis, like 🙂, that are more ambiguous?

Technologies and Other Constraints

We’d like for this data to be viewable in some kind of web application/dashboard, this should most likely be done in JavaScript/Typescript (maybe React). Feel free to find libraries that provide nice tables and/or graphs. How exactly you choose to represent the data is up to you. The web app should be viewable internally only, meaning we’ll need to deploy it using AWS, potentially via ECS, a lambda, or Elastic Beanstalk.

The back end for this will probably require a database to hold the historical data, as well as an API for grabbing and manipulating the data from the database. Python should be good for this, but it could be done in other languages if you determine some advantage a language offers. Since the project is going to contain at least a front end and back end, it should be Dockerized with a container for each component.

Sponsor Background

NC State DELTA, an organization within the Office of the Provost, seeks to foster the integration and support of digital learning in NC State’s academic programs. We are committed to providing innovative and impactful digital learning experiences for our community of instructors and learners, leveraging emerging technologies to craft effective new ways to engage and explore.

Background and Problem Statement

This project is a continuation of previous Senior Design Projects from Spring and Fall 2024, continuing the development of an editor for creating branching dialogue chatbots. While there is a lot of focus on using AI to power various instances of a "chatbot" system, there is still a tendency for AI-driven chat systems to go "off the rails" and provide unexpected results, which are often disruptive, if not exactly contrary, to the intentions behind a chatbot dialogue simulation where accuracy matters. We have developed a "chatbot" prototype that simulates having conversations in various contexts (conducting an interview, talking to experts, leading a counseling session, etc.) using a node-based, and completely human-authored branching dialogue format. While this guarantees that conversations remain on-script, it also means that a human needs to author the content, and this responsibility currently falls to internal developers on the DELTA team, instead of instructors who have more expertise with the content. We feel like this tool could be a benefit to a large number of faculty at the University and, extending the efforts of the previous student teams, we would like to expand the capabilities of the editor to support widespread use and/or adoption of this tool.

Provided are some current examples of how the chatbot tool is actively in use at NC State:

- Counseling Chatbot, https://cechatbot.ced.ncsu.edu/

- Horse Wellness, https://sites.google.com/ncsu.edu/trot-to-trophy-ii/scenario-chat

- Interview a Document, https://go.distance.ncsu.edu/ed730/

Project Description

DELTA has collaborated with the Senior Design Center in the Spring and Fall of 2024 to develop a functioning prototype of an authoring application for these node-based conversations. The tool gives users direct control over the conversational experiences they are crafting with the ability to visualize, create, and edit the branching dialogue nodes. This authoring tool does not require users to have any programming experience, as the tool converts these nodes directly into a data format which is compatible with chatbot display systems.

Continuing development from Fall 2024, our primary goals for Spring 2025 focus on further improvements to the node editing interface and the user experience; in particular we would like to introduce expanded conversational capabilities and quality of life features in the editing interface, potentially including:

- Enhancements to options for editing, previewing, and exporting chat conversations.

- Improved node organization groups to make it more visually clear which options appear as a single choice.

- "Comment" nodes, not connected to the main dialogue tree, for the purpose of planning and organizing content and keeping track of work-in-progress conversations.

- Support for "Dinner Party" scenarios where a single "chatfile" (JSON data format) could contain several speakers and concurrent bubbles of conversation that an end user could drop into and out of in non-linear fashion.

Technologies and Other Constraints

The previous student teams developed the current editor using SvelteFlow in the frontend and a Node.js REST API backend powered by Express, and using a MySQL/MariaDB database. While we imagine continued development in the same environment would be the most efficient path forward, we are still somewhat flexible on the tools and approaches leveraged.

We envision the editor, and chatbot instances themselves, as web applications that can be accessed and easily shared from desktop and mobile devices. The versions of the chatbot currently in use are purely front-end custom HTML, CSS, and JavaScript web applications, which parse dialogue nodes from a "chatfile" which is just a human readable plaintext file with a custom syntax. We want to preserve the node-based structure and current level of granularity of the chat system, but are flexible regarding the specific implementation and any potential improvements to data formats or overall system architecture.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

We are the NC State Urban Extension Entomology Research & Training Program, and our mission is to promote the health and wellbeing of residents across North Carolina within and immediately surrounding the built environment. Our primary goals are to partner with the pest management community of NC to 1) address key and ongoing issues in the field through innovative research, 2) develop and disseminate relevant and timely publicly available information on household pests, and 3) to design and offer impactful training programs to pest management professionals.

Background and Problem Statement

The pest management industry is often behind the curve in the widespread implementation of technology, which in this modern age greatly limits access to available information. Currently, despite our program frequently developing crucial publications and offering impactful training programs, only a small percentage of our stakeholders utilize these services. This disparity is largely due to the way in which these services are currently presented: hidden within obscure NC State domains or sent via mail as paper bulletins across the State. As a result, countless stakeholders across NC currently miss integral information which could influence pest management programs, directly impacting the health of residents across the State.

Despite the slow uptake of technology within the pest management industry, one piece of technology has become ubiquitous: the smartphone. We have already worked with a team to begin to leverage this technology through the development of several core components of an app (The WolfPest App) that connects our generated materials and courses directly to the pest management industry across the state. We launched the app in January, 2025.

Project Description

Despite the incredible progress made last semester, we have already identified additional application features that, if added, will further flesh out the app and provide critical functionality and access to our stakeholders. This includes the incorporation of a pest-database of all published content housed within the app, where stakeholders can “favorite’ pests to quickly access their information. Further, we want to offer more streamlined specimen submission for identification, as well as a “store” where stakeholders can purchase training materials from a simple online catalog.

Technologies and Other Constraints

As this is well beyond our typical wheelhouse, we would like to work with the students to identify key pieces of technology they feel are most useful for these additional components of the project. We would like to work with the students to build upon the app’s foundation, ensuring that the second app iteration is as equally streamlined and simplified for use by the pest management industry. We recognize the development and updating of applications is an ongoing process, and given this project is centered around NC State resources the IP would likely remain with NC State University. However, we would want students in this project to attend the annual NC Pest Management Conference in January to announce their involvement with the project, and to familiarize themselves with the industry they are serving (if they have the interest in doing so). We are unaware of any other issues, and are happy to discuss changes in our project ideas as feasibility and timeliness dictates.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Ankit is the Founder & CEO of K2S and an NC State Computer Science alum. He envisions a platform which enables other alumni an easy way to give back to the student community by way of mentorship to active Computer Science students. From an industry and a university perspective we’re trying to create a virtual engagement program for the NC State Community.

Background and Problem Statement

Successful alumni often revisit the path that got them there, and it invariably leads them down to the roots of their alma mater. In recognition of their supporters and heroes along that path, they have the urge to become one themselves.

In the Department of Computer Science at NC State, we understand that navigating the program and curriculum can be challenging. Wouldn't it be great to have someone who has been there before support and guide you around the pitfalls, helping you reach your full potential? Much of the skills and knowledge you will experience will take place in classrooms and labs but it also happens in the individual connections made with peers and alumni. CSC's alumni network includes over 10,000 members, many that have been very successful in their careers and who are eager to give back and support current students.

We proposed creating an online mentorship portal that connects current CSC students with CSC alumni to share a goal of promoting academic success and professional advancement for all. A portal which allows alumni to easily provide mentorship and their lessons learned not only is fulfilling to the alumni as a way of giving back, it also provides real help and guidance to students stepping out from the shadows of our lovely campus. Examples include George Mason University's Mason Mentors Program and; UC-Berkley's Computer Science Mentor Program.

Project Description

WolfConnect got its name when it was kickstarted with a team of Senior Design Students in Fall 2023. Today it includes the ability to sign up, set up your profile, education and work history and send a connection request to other users. You can also send private messages to other users.

Primary Portal end-users include: CSC alumni looking to give back to their alma mater by mentoring students and; current CSC UG and GD students looking for help on a specific topic or project. Secondary users could include alumni who are looking for speaking opportunities and; current students searching for contacts for specific internships and Coops.

This semester, we aim to take the WolfConnect portal to the finish line by making it ready for production. This requires some new features and enhancements to existing features, as well as some bug fixes. The main new feature will be adding a “News Feed”. Some of the existing features that can be enhanced include:

- Implementing user retention metrics for administrators

- Improving forum discussions to allow replies in threads and adding tags to posts

- Implementing an algorithm to suggest connections based on user interests and schedule compatibility

There are also some implemented features that are incomplete or not working properly, including:

- Ability to log in with Shibboleth (for NCSU users)

- Saving user’s availability times

- Saving user’s profile picture

Technologies and Other Constraints

The previous team has a detailed handoff of their deliverables, source code and user guides. The idea would be to use that to build on top of that existing model. The system runs on Docker. The backend container is PHP-based and serves a REST API using the Laravel Framework. The frontend container is built with React and MaterialUI. A MySQL database will be used to store all the data. The platform is hosted on an NC State-hosted VM.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

The North Carolina Department of Health and Human Services (DHHS), in collaboration with our partners, protects the health and safety of all North Carolinians and provides essential human services. The DHHS Information Technology (IT) Division provides enterprise information technology leadership and solutions to the department and their partners so that they can leverage technology, resulting ultimately in delivery of consistent, cost effective, reliable, accessible, and secure services. DHHS IT Division works with business divisions to help ensure the availability and integrity of automated information systems to meet their business goals.

Background and Problem Statement

We have an existing legacy application, OpTrack, that utilizes FoxPro and was developed 25 years ago in a production-only environment to meet business operation needs for tracking the inventory of labels used in the Agency printing shop. The print shop receives shipments of various labels used in the correspondence that is sent out by multiple DHHS Divisions. Labels are received into a main warehouse, but inventory can also be maintained in the print shop for immediate use. Staff use a combination of spreadsheets and the OpTrack application to track inventory in the warehouse and the print shop. A separate system is used to order labels.

Project Description

We would like to create a new application to improve the workflow for tracking label inventory in the warehouse and the print shop. Students will be able to meet with the product owner to analyze existing workflows and discuss desired improvements. The application should rely on role-based access control and expand the current application functionality. In the new application, all users must be able to view the current warehouse inventory levels.

Warehouse staff must be able to create new inventory types, record new stock into their inventory, and remove items from their inventory once print jobs are completed. The user interface should be modern and adhere to digital accessibility guidelines.

Potential stretch goals include adding functionality to send label print jobs to designated printers, to record losses, and to integrate support for barcode scanning.

Technologies and Other Constraints

Tools and technologies used are limited to those approved by the NC Department of Health and Human Services Information Technology Department (NC DHHS ITD). Student projects must follow State IT Policies dictated by NC DHHS ITD to allow the app to be deployed by ITD.

In addition, although there is currently some development work previously performed by the Agency using the Microsoft Dynamics Power Platform; students will not be limited to that framework as the basis of a solution. Students are encouraged to think of innovative solutions to manage inventory and design accordingly. If the Power Platform software is used, DHHS will provide licensing for Power Apps, Power Automate (Workflows), Power Pages, and Dataverse components.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Division of Parks and Recreation

The Division of Parks and Recreation (DPR) administers a diverse system of state parks, natural areas, trails, lakes, natural and scenic rivers, and recreation areas. The Division also supports and assists other recreation providers by administering grant programs for park and trail projects, and by offering technical advice for park and trail planning and development. The North Carolina Division of Parks and Recreation exists to inspire all its citizens and visitors through conservation, recreation, and education.

Applications Systems Program

The Applications Systems Program works to support the Division and its sister agencies with web-based applications designed to fulfill the Division’s needs and mission. The current suite of applications addresses: personnel activity, Divisional financial transactions, field staff operations, facilities/equipment/land assets, planning/development, incidents, natural resources, etc. Data from these web applications assist program managers with reporting and analytic needs.

Many previous SDC projects have been sponsored, providing a strong understanding of the process and how to efficiently support the completion of this project while gaining insights into real-world software application development. Our team includes five NCSU CSC alumni, all of whom have completed projects with the SDC. The Apps System Program will be overseeing the project and working directly with you to fulfill your needs and facilitate the development process.

Background and Problem Statement

The Division of Parks and Recreation (DPR) has a passport program that lets visitors track their adventures in state parks and collect stamps at each location. The passport features a page for every state park, along with pages for four state recreation areas, three state natural areas, and nine state trails. Each page includes a photo of a signature landmark, activities available at the site, contact information, and more.

Visitors typically collect stamps at the park's visitor center, though stamps for trails can sometimes be obtained at partner locations like bike shops. This initiative encourages park-goers to explore each park and collect every stamp. While having a physical passport can be fun and engaging for park visitors, it invites problems such as if a visitor loses their passports or a new version is released, their progress will be lost. Our solution to these problems is a digital passport. A digital passport can store a visitor's progress online and let it be easily accessed and updated without the risk of losing physical copies or starting over with each new version.

After discussions with our Public Information Officer and her team, there is significant interest in developing a digital passport application. This transition could provide numerous benefits, including reduced printing costs, timely updates on passport news and versions, and a richer, more informative experience for passport holders about state parks.

Additionally, this project will provide an opportunity for the Applications System Team to become more familiar with new technologies and explore how they can be incorporated into existing applications.

Project Description

The Digital Passport app is an innovative project aimed at enhancing the visitor experience at State Parks by transitioning from a physical paper passport to a digital platform. This app will allow users to track their visits and progress, collect virtual stamps using geolocation technologies, and explore information about each park and natural resources like fauna and flora found at them. This project will be reasonably open-ended in its approach, but there are a couple of features that should be maintained or included.

Key Features

- Offline Accessibility: As a Progressive Web Application (PWA), the app will be accessible offline, allowing users to access their passport information and track their progress without the need for a constant internet connection.

- Geolocation Integration: Users can collect virtual stamps as they visit various State Parks, trails, and Recreation Areas. Geofencing technology will ensure that stamps are only awarded when users are physically present at the designated locations.

- Informational Content: Each digital passport page will feature images of signature landmarks, icons indicating available activities, and details about the number of trails at each park. This will provide a more informative and engaging experience compared to the traditional physical passport.

The Digital Passport App represents a unique opportunity for students to contribute to a meaningful project that encourages exploration and appreciation of NC State Parks while reducing costs associated with traditional printing and materials.

Technologies and Other Constraints

Tools and technologies used are limited to those approved by the NC Department of Information Technology (NC DIT). Student projects must follow state IT policies dictated by NC DIT once deployed by DPR. Additionally, students cannot use technologies under the Affero General Public License (AGPL).

Besides these constraints, we do not have requirements on what technologies students can use. We are encouraging students to experiment and use technologies they find interesting. We would be interested in seeing potential ARCGIS incorporation, but it is not a requirement or need. If students do go down that route, we can provide ARCGIS data relevant to state parks and trails.

Students will be required to sign an NDA related to personal and private information stored in the database and to sign over IP to sponsors when the team is formed. We are willing to work with students on what IP they can use for their future Resume/CV.

Sponsor Background

The Senior Design Center (SDC) of the Computer Science (CSC) Department at NC State oversees CSC492—the Senior Design capstone course of the CSC undergraduate program at NC State. Senior Design is offered every semester with current enrollment exceeding 200 students across several sections. Each section hosts a series of industry-sponsored projects, which are supervised by a faculty Technical Advisor. All sections, and their teams, are also overseen by the Director of the Center.

Background and Problem Statement

Grading team-submitted documents is a critical component of evaluating Senior Design projects. Currently, this grading process relies on spreadsheets where teaching staff manually input grades for rubric items, calculate averages, and aggregate scores. While functional, this system is prone to errors, such as accidentally modifying formulas, and lacks advanced features to simplify grading and feedback. Additionally, managing and distributing these grading spreadsheets across sections and teaching staff adds unnecessary overhead.

The existing process does not easily support flexible rubrics or proper aggregation of scores from multiple graders. Furthermore, there is no streamlined way for administrators to monitor grading progress or ensure consistency across sections. These shortcomings highlight the need for a centralized, robust grading tool tailored to the SDC’s specific needs.

Project Description

For this project, your team will develop a Web application to replace our current spreadsheet-based system. This application will streamline document grading, improve accuracy, and introduce advanced features such as flexible rubrics, multi-grader score aggregation, and role-based access.

System Features

The "SDC Documentation Grader Tool" will include the following features, tailored to specific user roles:

Grading Management

- Rubric Definition and Customization

- Administrators can define grading rubrics with nested categories, optional items, conditional weights, and extra-credit options.

- Rubrics can be assigned to specific documents, allowing flexibility for different assignment types.

- Multi-Grader Support: Administrators can assign multiple graders to the same document. Rubric scores for each item will be averaged across all graders who evaluate a document.

- Real-Time Updates: Grading progress and rubric aggregations are updated automatically to provide live feedback to users.

Role-Based Features

- Administrators

- Assign teaching staff (advisors and TAs) to teams and documents for grading.

- View all grades and grading progress across all teams and sections.

- Designate deadlines for teaching staff to complete grading a specific document.

- Monitor overall grading and compliance with deadlines.

- Teaching Staff (Advisors and TAs)

- Access assigned documents and rubrics.

- Input grades with optional detailed feedback for rubric items.

- View only the grades they have submitted for assigned documents.

- Students

- Access final grades and detailed feedback for graded documents, once released by administrators.

Technologies and Other Constraints

This Web application will run on Docker containers. The backend will expose a REST API and will be developed using Node.js with the Express Framework. The frontend will be built with React. The database will use MySQL/MariaDB. Authentication will be integrated with Shibboleth to leverage existing institutional infrastructure.

Sponsor Background

From critically endangered vultures and gorillas in Africa to rare native plants and amphibians in our state, the North Carolina Zoo has been a leader in wildlife conservation for more than two decades. Our team of experts conducts thorough research to understand the needs of threatened species. We provide data and technology to global communities and organizations to assist them in their efforts to protect wildlife. Zoo staff also work to protect over 2,800 acres of land around our property.

Background and Problem Statement

The NC Zoo is home to three Grey Mouse Lemurs: Cholula, Cedar, and Speedwell. Mouse Lemurs are among the smallest primates, averaging only 3 inches tall. As a nocturnal species, these lemurs tend to spend their days sleeping and nights actively foraging, exercising, and playing. This nocturnal behavior pattern is at odds with the Zoo’s visitor times, making the educational mission of the Zoo a challenge for keepers. To address this problem, keepers have experimented with a variety of lighting conditions in the lemur enclosure to “flip” daytime and nighttime. However, keepers are responsible for many animals and their enclosures, making tracking the effectiveness of lighting configurations difficult. Keepers would like a way to track and visualize the movement patterns of Cholula, Cedar, and Speedwell to better understand how changes to lighting conditions impact their behavior.

The NC Zoo is home to three Grey Mouse Lemurs: Cholula, Cedar, and Speedwell. Mouse Lemurs are among the smallest primates, averaging only 3 inches tall. As a nocturnal species, these lemurs tend to spend their days sleeping and nights actively foraging, exercising, and playing. This nocturnal behavior pattern is at odds with the Zoo’s visitor times, making the educational mission of the Zoo a challenge for keepers. To address this problem, keepers have experimented with a variety of lighting conditions in the lemur enclosure to “flip” daytime and nighttime. However, keepers are responsible for many animals and their enclosures, making tracking the effectiveness of lighting configurations difficult. Keepers would like a way to track and visualize the movement patterns of Cholula, Cedar, and Speedwell to better understand how changes to lighting conditions impact their behavior.

Project Description

The long term vision for the project is to instrument the lemur enclosure with one or more cameras and a computer-controlled lighting system that will allow keepers to program the lights and objectively measure the time, location, frequency, and duration of movement. This information should be made available via an application that facilitates keepers exploring the history and trends of movement behaviors, possibly filtered by lighting conditions. To facilitate progress in this first semester project, students will receive a library of pre-recorded videos from the Zoo staff. They will use off-the-shelf image processing techniques to identify movement, and display those movements in the application.

The application shall run locally without internet access on a computer furnished by the NC Zoo:

- Enable video (live or pre-recorded) to be analyzed for lemur movement

- Display video (live or pre-recorded)

- Visualize the movement location, time, frequency, and duration of the lemurs

- Enable keepers to enter lighting configuration information, including the characteristics and schedule

- Allow movement analytics to be filtered and summarized based on range or lighting characteristic queries

- Display results of (5) in appropriate graphs and/or visualizations

Technologies and Other Constraints

Students will use existing open source image processing libraries (e.g., Open CV) to analyze videos and open source graphics libraries for visualizing results. If the students elect to design a web-based application, it must still run locally on the computer without internet access.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Dr. Tian is a research scientist who works with children on designing learning environments to support artificial intelligence learning in K-12 classrooms. Dr. Tian’s PhD dissertation study focused on designing AMBY, a development environment for middle school students to build their own conversational agents.

Dr. Tiffany Barnes is a distinguished professor of computer science at NC State University. Dr. Barnes conducts computer science education research and uses AI and data-driven insights to develop tools that assist learners’ skill and knowledge acquisition.

Ally Limke is a PhD student who works closely with Dr. Barnes and Dr. Tian to understand what teachers need to lead programming activities in their classrooms.

Background and Problem Statement

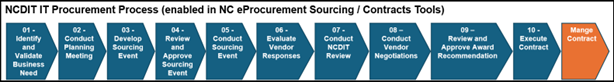

AMBY (“AI Made by You”, Figure 1, see here for a video demo of AMBY) is a web-based application that allows middle school-aged children to create conversational agents (or chatbots) without prior programming experience. In AMBY, children can define the components of the chatbot such as intents, training phrases, responses, and entities. An intent is the underlying goal of a user when they message the chatbot, for example, the intent of a user request “Can you recommend a song?” is “Request for song recommendations”. Training phrases are example phrases the children create for the chatbot to recognize a certain intent. Responses are the list of responses the chatbot will return back to the user if a certain intent gets recognized. Entities are a list of phrases or words that can be extracted from the end-user expressions. AMBY has enabled over 300 middle school students to create chatbots and learn about AI.

Figure 1. AMBY interface with a chatbot example. Children develop the chatbot on the left panel and test the chatbot on the right.

AMBY is currently built on Google Dialogflow, which is an existing chatbot development environment (mainly targeted for small businesses like ordering pizza). We created AMBY to address the educational needs for children to learn about AI by creating AI artifacts. Compared with Dialogflow, AMBY is more visually attractive to younger aged students, and it visualizes the conversation flow which allows users to design conversations, and it eliminates the advanced features of Dialogflow to make it easy for children with no prior programming experience to create a chatbot of their own interest.

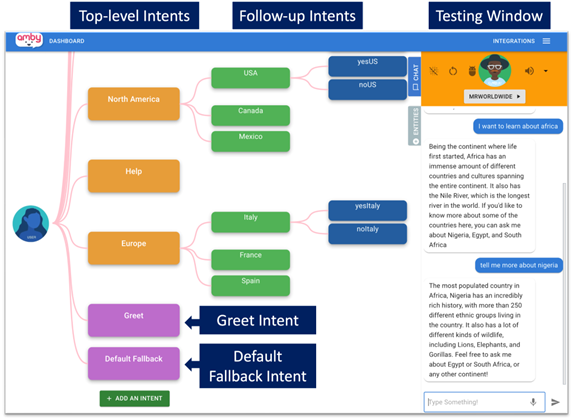

Regarding technical implementation (Figure 2), AMBY uses the React framework front-end and Node.js on the backend. For chatbot training and testing, it communicates with the Google Dialogflow using the Google Dialogflow API.

Figure 2: AMBY architecture.

One constraint of the current AMBY architecture is that its backend relies on Dialogflow. We have encountered several challenges using a Dialogflow backend: 1) Initially not designed for children to build chatbots, Dialogflow has age restrictions for user google accounts (limited to 14+ years old users). In the past we had to pay for specialized google accounts for children to use, but this workaround is not scalable for everyone to use; 2) There is limited information we can pull from Dialogflow to help children understand how their chatbot gets trained and debug on their chatbots.

Project Description

We envision creating a new backend for AMBY for an enhanced control of dialogue models and higher explainability of the chatbot training process. The new backend will replace the “Dialogflow” component  in Figure 2.

in Figure 2.

Project Goals:

- Data Extraction:

- Review the current AMBY database and build a pipeline to extract chatbot data (intents, training phrases, responses, entities) in a structured format (e.g., a .json file) for use in dialogue modeling.

- Backend Design and Development:

- Investigate potential LLM-based backend solutions for dialogue modeling. Possible options to start are OpenAI GPT4, LLAMA, and Rasa. Design a new backend architecture for AMBY.

- Implement the identified backend solution to 1) send extracted data from goal 1 to the backend and 2) train dialogue models for the chatbot. 3) handle chatbot testing, which includes receiving user messages, generating chatbot responses based on trained dialogue models, and sending the output back to AMBY for display in the interface.

- Student Feedback Generation:

- Create a script using LLM prompts that analyzes the content of the children-created chatbot and provides constructive feedback for children. The feedback will analyze the quality of their input training data and identify areas for improvement in the chatbot’s design.

Technologies and Other Constraints

- Technologies required for Web-based app

- MongoDB database

- Express.js

- React.js

- Node.js

- LLM requirements

- OpenAI or LlamaIndex or other free/low-cost, open source API for LLM calls

- Python

- Prompt Engineering

Sponsor Background

Hitachi Energy serves customers in the utility, industry and infrastructure sectors with innovative solutions and services across the value chain. Together with customers and partners, we pioneer technologies and enable the digital transformation required to accelerate the energy transition towards a carbon-neutral future.

Background and Problem Statement

Hitachi Energy is currently developing a monitoring product for bushings and tap changers within the TXpert Ecosystem that utilizes multiple inputs, sub-processes those inputs, and sends them further as inputs to algorithms and for consumption by end-users. The product will fundamentally consist of a single board computer (SBC) and an input/output (I/O) board.

The features of the product will be:

- time sync with GPS

- measure temperature and humidity

- measure leakage currents in bushings

- measure vibrations in tap changers

- measure motor currents in tap changers

The outcome of the project will enable the I/O board to work with the targeted SBC.

Project Description

The project requires software drivers that need to be created and tested – enabling functional verification of the platform.

The required drivers for this project are:

- USB Communications with GNSS/GPS Device (NEO-M9N-00B-00)

- I2C Driver for Temperature and Humidity Sensor (SHT30-DIS-B2.5KS)

- I2C Driver for Light Emitting Diode (LED) Driver (LP5569RTWR)

- USB-to-SPI Device (FT4222)

- Digital Input/Output (DIO) Port (read/write)

- SPI Driver Shift Register Interface (74HC165)

- SPI Driver for high-speed ADC (AD7771)

- SPI Driver for RTD (temperature sensor) (ADS124S08)

Technologies and Other Constraints

- The drivers will be utilized on a Linux OS platform.

- Hitachi Energy will provide a development platform to enable validation of the drivers, targeted at the end of January 2025.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Hitachi Energy Transformers Business Unit specializes in manufacturing and servicing a wide range of transformers. These include power transformers, traction transformers, and insulation components. They also offer digital sensors and comprehensive transformer services. Their products are crucial for the efficient transmission and distribution of electrical energy, supporting the integration of renewable energy sources and ensuring grid stability.

The Hitachi Energy’s North American R&D team for Transformers business unit, located at the USA corporate offices in Raleigh NC, is looking to partner with NCSU to enhance the functionality of a software component delivered within some of our product offerings.

Background and Problem Statement

The Web API Link is a Hitachi Energy application that allows the transmission of data between remote end points. For this project, we’re concerned with data transmission between remote monitoring devices and the Hitachi Energy Lumada Asset Performance Management (APM) application. The data transmitted are power transformer condition parameter values, like ambient temperature, oil temperature, or gas content in oil, that are tracked or calculated by the remote monitoring devices.

The remote monitoring devices are Smart Sensors and/or Data Aggregators attached to the power transformers. They are able to transmit data using a TCP/IP network and a variety of protocols likeModbus TCP, IEC-61850 or DNP3.

The Lumada APM application is used to assess the condition of power transformer fleets. It can be accessed as a SaaS or an On-Premise application. The Lumada APM application provides a REST API interface that allows the exchange of data with external applications.

Web API Link application description

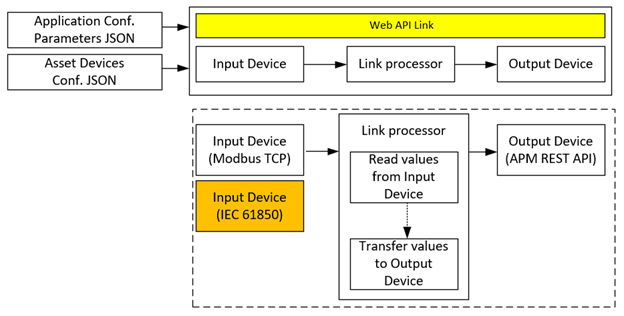

The Web API Link allows the transmission of data between remote end points. It implements the following concepts and allows the transmission of data between them:

- Input Devices. An interface that allows the implementation of communication protocols/functionalities to read data from a device implementing a communication protocol such as: Modbus TCP, IEC-61850, etc.

- Output Devices. An interface that allows the implementation of communication protocols/functionalities to output data that has been read from an input device to transmit/communicate data or an outcome. For example, REST-API, email messages, etc.

Figure 1 graphically describes the main components of the application.

Figure 1

The architecture of the application is structured in such a way that allows the creation of drivers/plug-ins that can be attached to the application to add additional communication protocols/functionalities support dynamically, without having to modify the core code of the application. The configuration of the application and the end points between the Input Devices and Output devices is done via JSON configuration files.

Project Definition

The senior design team’s work will focus on two significant enhancements to the functionality of the Web API Link application. We’re describing these as two phases of the project.

Phase 1 – Web API Link Configuration UI Enhancement.

In the past, configuring the Web API Link has required creating and editing JSON by hand. This work has been simplified by the Web API Link Configurator, an application created by a Fall 2024 senior design team at NC State. This application reduces the tedium of creating the configuration files, but there are some opportunities to extend it to make it even more useful. In particular, the Spring 2025 senior design team will be designing and implementing several enhancements:

- Adding field validations.

- Enhancing the user experience by improving the configuration flows and the availability of preloaded information.

- Adding functionality of the application.

Phase 2

Support for additional protocols would permit the Web API Link application to communicate with additional input devices. Referring to the application diagram in Figure 1, the additional protocols could be supported as Input Device driver/plugins. This would allow extensions to the Web API Link application without significant changes to the design. In the Spring 2025 semester, two supports will be added for two additional protocols:

- DNP3 plug-in component. To allow the Web API Link to communicate and read register values from devices enabled with the DNP3 communication protocol and transmit these values to the Lumada APM application via its REST API interface.

- IEC-61850 plug-in component. To allow the Web API Link to communicate and read register values from devices enabled with the IEC-61850 communication protocol and transmit these values to the Lumada APM application via its REST API interface (to be implemented internally at Hitachi Energy)

Technologies and Other Constraints

The technologies to be used to create the software components are:

- The application must be able to be executed both on Windows and Linux operating systems.

- The output DLL must be able to be executed on x86 processors computers.

- .NET Core 6.0 or above

- C# programming language

- Application configuration files are created using JSON format.

- Visual Studio 2022 or above.

- The application was created on a Windows 10 PC but will be used on a Linux Operating system.

- The necessary libraries to implement the code change, will be provided by Hitachi Energy.

- A test environment will be provided by Hitachi Energy.

The technologies requirements for the Web API Link Configuration UI are:

- ASP.NET Docker container application

- .NET Core 6.0 or above

- Compatible with Ubuntu (20.04). Main target.

- Compatible with Windows (10/11). Desirable.

- The specific technologies could be proposed by the NCSU students working on the project.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

The Laboratory for Analytic Sciences is a research organization in support of the U.S. Government, working to develop new analytic tradecraft, techniques, and technology that help intelligence analysts better perform complex tasks. Processing large volumes of data is a foundational capability in support of many analysis tools and workflows. Any improvements to existing processes and procedures, whether they are measured in time, efficiency, or stability, can have significant and broad reaching impact on the intelligence community’s ability to supply decision-makers and operational stakeholders with accurate and timely information.

Background and Problem Statement

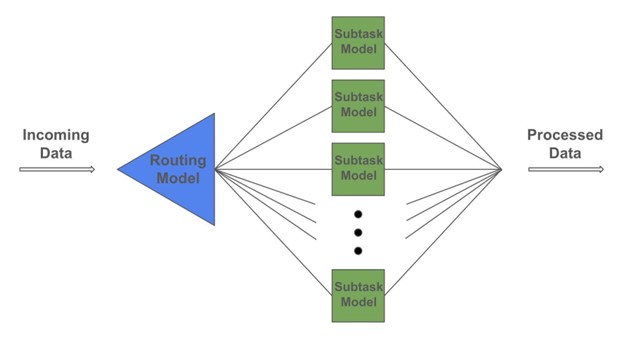

In some cases, the task a model is trained to perform is quite broad, for example the task for a large language model like GPT is to generate human-like text in response to almost any prompt. In other cases the task can be extremely specific, for example the famous Netflix Challenge was to create a model which could predict the rating (1-5 stars) that a user would assign to a given movie. Naturally, all else being equal, more specific tasks are easier to accomplish than general tasks which gives rise to potential accuracy improvements and time/computation cost savings from designing processing systems around task-specific models that do exactly what is desired…and nothing else.