Projects – Spring 2022

Click on a project to read its description.

Sponsor Background

Autonomous vehicles technology is maturing and could offer an alternative to traditional transit systems like bus and rail. EcoPRT (economical Personal Rapid Transit) is an ultra-light-weight and low-cost transit system with autonomous vehicles that carry one or two passengers at a time. The system can have dedicated guideways or alternatively navigate on existing roadways where the vehicles are routed directly to their destination without stops. The advantages include:

- Dual mode – existing roadways and pathways can be used for low install cost in addition to elevated roadways at a lower infrastructure cost than existing transit solutions

- A smaller overall footprint and less impact on the surrounding environment so guideway can go almost anywhere.

The research endeavor, ecoPRT, is investigating the use of small, ultra-light-weight, automated vehicles as a low-cost, energy-efficient system for moving people around a city. To date, a full-sized prototype vehicle and associated test track have been built. For a demonstration project, we are aiming to run a fleet of 5 or more vehicles on a section of Centennial campus. The Vehicle Network server will serve as the centralized communications and vehicle routing solution for all the vehicles.

Background and Problem Statement

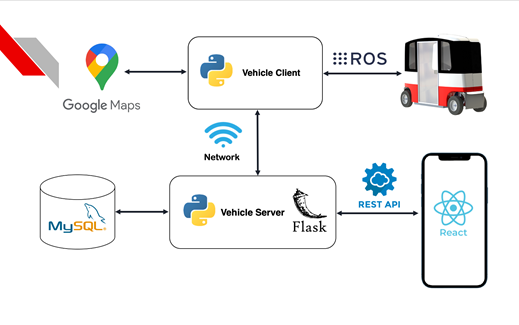

With the aim of running a multi-vehicle live pilot test on Centennial Campus, the overarching goal is to create a Vehicle Network Controller (VNC) and the associated ROS vehicle client software to guide the vehicles and provide interaction to users. Please refer to the architectural diagram below showing the server architecture of the solution. The VNC will manage a fleet of cars, dispatching them as needed for ride requests and to recharge when necessary. It will also provide interaction to users to both make these requests and manage the network itself.

Project Description

The work on the VNC would be a continuation of the work from previous senior design teams. The current VNC solution provides a means to simulate multiple vehicles, evaluate metrics of performance, create administrators/users, and allow for vehicle clients to interact with the server in different ways. Though still considered an alpha stage at this point, there is a need to further develop the VNC to make ready to be used with physical vehicles.

Previous work focused on using Google maps as a means for routing vehicles. This current effort would look at replacing google maps as the vehicle routing and planning. While google maps is helpful with preexisting roads, the vehicles will follow pathways that could include pedestrian or bicycle pathways on preconfigured routes. With that said, we are looking for a solution that does not rely on google maps for routing and path planning.

The effort includes two tasks which would likely need to be done one after the other. Though two tasks are presented, it would be expected that a single semester CSC team might work on just one of the tasks. The first task would be to replace google maps routing and planning with a home-grown solution. Further, the vehicles will follow specific routes, but setting up those routes requires a user interface to either obtain the routes from the existing vehicles or to have the user click and draw pathways on a map.

The second task is to further build-out the simulator for multiple vehicles and multiple rider requests. Effort would include the development of a user interface for easily setting up and running the simulation and creating the specified number of simulated vehicles and people.

For the first task, below are a set of subtasks for the path creation and planning:

- Identification of the interface points of Google Maps in the Vehicle Network Controller

- Path creations: create a user interface to easily obtain paths from existing vehicles and alternatively from a graphical user interface

- Uploading an image to the vehicle network server that serves as the map of the system. Questions to address: how can the map image be sent to client devices for displaying as well?

- The framework for the pathways is an “edge and node” map. Edges represent the pathways and nodes represent the common intersection points of multiple pathways.

- Developing an algorithm to route vehicles to riders in an efficient manner

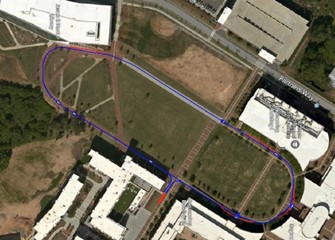

Above picture shows the oval route with paths represented by an “edge and node” map

The second task involves the development of an interface to easily setup and run simulated vehicles. In addition to controlling multiple live vehicles, the vehicle network server can also interact with simulated vehicles. As new sites are explored, creating a tool that can estimate ridership and performance data will help determine the utility of a transit solution on the particular site in question.

To that end, this second task is to provide a user interface to easily setup a vehicle network, run a simulation, and extract performance data. The specific tasks are outlines below:

- Create a user interface that allows user to easily create a map and path for vehicles

- User interface allows for the easy manipulation of initial conditions of the simulation (i.e. number of vehicles in network)

- Ability to set the number of vehicles that can be parked at each station

- User interface allows for the easy input of station demand over time. (i.e. how many riders per hour go from station A to B, how many from B to C, how many from A to C, etc.)

- Application allows for the creation of an arbitrary number of simulated vehicles and simulated “riders”.

- Include top level input parameters such as number of vehicles, number of passengers per hour, relative distribution of people at stations

- Application allows user to input total simulation time and run the simulation. At end of simulation, key parameter data is stored in a specific format (CSV or XML or ?) and is easily displayed for user.

- Key parameters could include total wait time, total ride time, total miles driven for vehicles, battery state of charge, etc.

Below is a set of additional tasks to further develop as desired. Some of them refer to the above two tasks while others are improvements on existing development. As an exhaustive list the expectation is not that all of this should be done within a single semester, but instead it provides a list of possible areas to explore, and initial meetings can be used to refine the scope of these goals.

- Improve mobile app for people calling the vehicle

- Add secure connection to client vehicle ROS package

- Include ability to monitoring battery voltages

- Include charging stations

- Algorithms to re-route vehicles to charging stations when battery is low

- Algorithms to better improve vehicle assignment to requested ride

- Include vehicle storage depot in VPN to allow storage of excess vehicles

- For running multi-vehicle simulations, include improve usability of GUI interface for server.

- Include top level input parameters such as number of vehicles, number of passengers per hour, relative distribution of people at stations

- Include ability to draw paths with mouse and have them persist through sessions

- Ability to set the number of vehicles that can be parked at each station

- Further improve multi-vehicle simulations

- Make running simulations more turn-key

- Add physics within the emulated vehicle module

- Further refine KPI's for success (i.e. dwell time, ride time)

- Further explore the vehicle routing algorithm

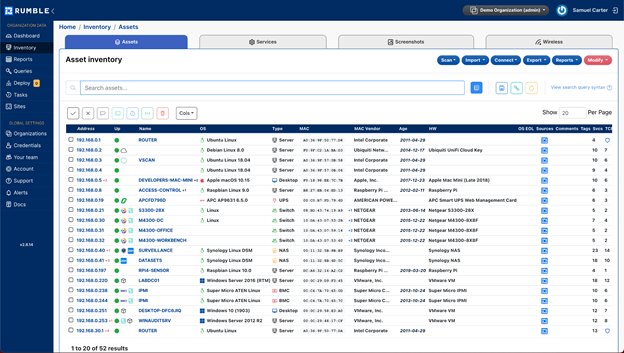

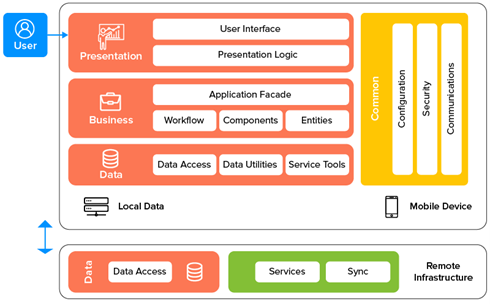

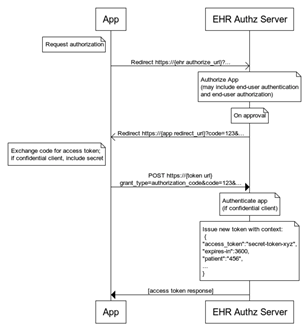

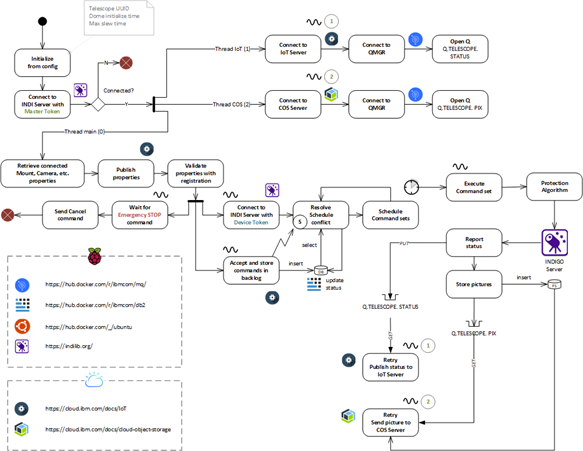

Architecture of Vehicle Network Server

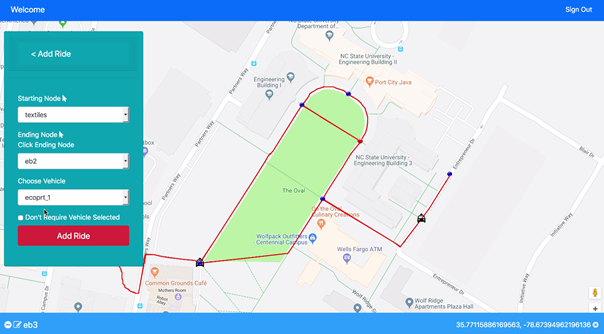

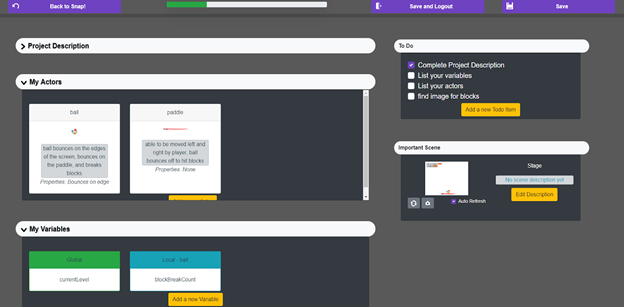

Initial View for Vehicle Network Controller

EcoPRT Vehicle

Technologies and Other Constraints

|

Name |

Description / Role |

Version (if necessary) |

|

ROS |

Robot OS system for autonomous vehicle |

Melodic |

|

NodeJS |

Web Server software. This will run on the web server and will serve the website and connect the website to the database. It will contain a REST API. The REST API will allow the website and other services to access the functions of the web application. |

8.9.4 |

|

Python |

Used to write the Vehicle Server and Vehicle Client processes |

3.4.9 |

|

NPM |

Node Package Manager for installation |

- |

|

MySQL |

SQL implementation. Database that is used for storing tabular data that is very relational in nature. |

14.14 |

|

Neo4j |

Graph database. Used for storing graph-like data. Uses the Cypher query language. |

3.4.9 |

|

Bootstrap |

Using Bootstrap will give more freedom to customize the look and feel of the web application. It also makes it easier for to make a mobile friendly version of the website. |

4.0.0 |

|

AngularJS |

Used for the logic of the website. It works very well for data binding which is the bulk of the web application since all data is pulled from the database. |

1.6.8 |

|

Express |

Routes URLs to files and/or functions |

4.16.2 |

|

HTML5 |

Used to create web pages |

5 |

|

REST |

Used to get information from the server and send it to the front end |

- |

|

Socket.io |

Used to get information from the server and send it to the front end |

2.0.4 |

|

CasperJS |

Used for automated testing of web applications with JavaScript |

1.1.0-beta4 |

|

Mocha |

JavaScript framework for Node.js that allows for Asynchronous testing |

5.0.5 |

|

Chai-HTTP |

Assertion library that runs on top of Mocha |

4.0.0 |

|

Istanbul (nyc) |

Used for determining code coverage |

11.7.1 |

Sponsor Background

#GoBeyond is a media and tech innovation lab pushing the boundaries of what we as humans know to be possible to inspire a new wave of innovation in the process: one that is sustainable and inclusive. Decades ago the world fell in love with Carl Sagan, the mission for the moon, the idea of flying cars. But science and technology have reached a critical inflection point. We no longer are enamored with the idea of infinite progress. We've broken some fundamental social and scientific systems, and it's time to rebuild those, and rebuild the world's trust in innovation to meet the needs of the broader community. In the three years since our founding #GoBeyond has broken ground creating content that has been viewed by millions and featured in over 100 publications including Forbes, Scientific American, and Interesting Engineering. We’re a Women in AI hackathon winner, DeFi Talent Fellow to advance inclusion in blockchain, and Luminary Fellow to advance equality in entrepreneurship. We were also recently nominated for an Emmy for our series Financially Naked, which focused on financial equity and inclusion.

Background and Problem Statement

Last year while most of the world was battling Covid, Jenn, the founder and Chief Story Engineer of #GoBeyond, was hospitalized and diagnosed with an autoimmune disorder. It turns out Covid has been triggering an increase in autoimmune illnesses. Many weeks and hours of internet forum searching later, she learned that one of the top world renowned institutions for treating her illness created an anti-inflammatory nutrition protocol to help patients achieve remission.

Autoimmune disorders, in particular, are one of the fastest growing illness classes with more than 100 unique identifiers and no known cures. According to the National Health Council more than 40% of Americans are battling a chronic illness, and 1 in 10 people globally are battling a chronic autoimmune disorder. These illnesses are much more prevalent in industrialized nations, with 80% of autoimmune sufferers being women and minorities, and autoimmune disorders are the leading cause of death and disability for women in the 20-50 age demographic.

The good news is there is more data now than ever to help us begin to uncover casualties and cures, and there are millions of autoimmune sufferers who have taken to forums online to try and crowdsource to improve treatment outcomes, but right now these communities are fragmented and exist in places like Reddit, Instagram, and Facebook, where no one is mining this data for trends.

AI for Ai won the Women in AI hackathon for our proposal of a social network designed to support the millions with autoimmune disorders while providing critical data for scientists, healthcare providers, and pharmaceutical companies to pursue research and cures. Because of fragmented healthcare data and studies, many patients presently turn to online forums such as Facebook, Reddit, and even Clubhouse to share symptoms and seek medical advice.

Project Description

AI for Ai will allow patients to share their symptoms through surveys and forums, and support others affected by autoimmune disease. Users can upload images of their symptoms, images of treatments, text statuses, and opt in for what data they want to share with medical providers vs. members of the autoimmune community. Natural Language Processing (NLP) and Computer Vision (CV) consolidates this data and makes recommendations to healthcare providers and scientists on areas of exploration to track trends in disease progressions and causation. Additionally, AI for Ai will scrape medical journals like PubMed to further augment our datasets.

AI for Ai should be designed for use by two audiences: patients and providers. For patients, the app will act as a social support group, providing the ability to connect with and support other patients, track symptoms and disease progression, as well as connect directly with their providers and read up on new research and findings. Each patient will have a profile where they can select their particular autoimmune illness(es), upload pictures and text updates tracking their progression. Additionally there will be community chat features where patients can share this content with other patients.

The interface for providers (scientists, researchers, healthcare providers and pharmaceutical companies) will allow these users to access data scrapers and NLP-based AI systems to identify promising areas of exploration in identifying disease progressions, environmental and genetic triggers, treatments and eventually cures. AI for Ai will also scrapes and includes publicly available data, particularly scientific papers and reports on these specific autoimmune illnesses, from the NSF and NIH, which are the largest public funders of biomedical research worldwide. AI for Ai will use NLP and CV to analyze trends within these papers and compare them to patient data on the platform.

Patients, providers, scientists, and regulators create profiles and consent for the services provided by our platform individually. They can decide what information they want to be public on the platform (i.e. they can choose not to disclose their full legal name outside of verification purposes on the platform). All patients sign a Health Insurance Portability and Accountability Act (HIPAA) waiver to respect patient privacy. Additionally in order to be more compliant with the American with Disabilities Act and to be able to better assess casualties, we’d like to create functionality within the app for voice to text recording to be able to allow patients to speak freely the way they do on Clubhouse, particularly for patients with MS and other autoimmune conditions for which typing or using a smartphone is quite difficult.

Technologies and Other Constraints

Python, PyQt5, NLP, Computer Vision, GPT3, machine learning and cyber security. Open to a mobile-first web app or mobile approach. Alternatively, students can recommend technologies and architecture based on requirements.

Python / NLP / Computer Vision / Machine learning - Analysis to study macro-trends in data provided by users and pulled from scientific papers and reports.

PyQt5 - Time permitting it would be great to have a version of the app that is voice to text friendly for folks battling disabilities who may not be able to easily type their symptoms into the app but could describe it verbally.

GPT3 - Time permitting a chat-bot based survey rather than traditional form surveys would also help with usability.

Cybersecurity - We expect the system to be built with security in mind.

We have several dozen patients who have autoimmune illnesses that have offered to be early testers of the platform. Students are encouraged to conduct usability testing with these users. Because health data is highly sensitive and legally protected the students will be asked to sign NDAs.

Should the students choose to stay on with AI for Ai after this prototype project is complete, we’re happy to explore extending and expanding employment opportunities with the project or within the broader scope of #GoBeyond.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

ICON provides innovative drug development solutions across all phases and therapeutic areas. ICON’s Cybersecurity Team is a small group of professionals that share a passion for the topic. Our team is excited to cooperate with NCSU students on the sixth project to further develop the RePlay Honeypots.

Background and Problem Statement

Honeypots are systems that behave like production systems but have less attack surface, less resource requirements, and are designed to capture information about how potential attackers interact with them. Their intent is to trick an adversary with network access to believe that they are a real system with potentially valuable information on it. The honeypot will log the attacker’s behavior to collect valuable information about the adversary and to enable defenders to detect the threat and gain an advantage against it.

Previous Senior Design teams have developed Replay Honeypots--a low-interaction production honeypot system that can be configured and deployed easily, remaining cost effective in large numbers. The system is architected around three main components: 1) a central management system that provides a way to monitor and control honeypots and serves as a central repository of data collected by each honeypot it controls; 2) a series of lightweight, secured honeypots that support various methods of deployment and will simulate network behavior of other systems; 3) a configuration generation tool that will ingest packet capture (PCAP) and Nmap scan outputs, and generates configuration files that honeypots use to determine how they should behave. The Project has recently been released as an Open Source Project on GitHub, and the team will actively contribute.

While this system has come a long way, there are still some areas for improvement:

- The bridge networking model, used currently, does just enough right now to allow the honeypots to be accessible via the host’s network. A better architecture for the honeypots would be for each to be independently attached to the same network as the host. This would allow the honeypots to behave independently of the host, and have complete control over their networking model and behavior. This would also make a sizable difference to an attacker’s perspective of the system. If all containers are attached to the network as independent devices, there is less of a sign that these are a docker swarm and thus deceptive devices.

- The current implementation of the honeypots gets a request, checks it against the request in the configuration, and determines if it passes the similarity threshold. If it does, the honeypot then responds with a predetermined byte sequence from the config. However, some packets might contain information such as timestamps or random values that would give the honeypot away on closer inspection. The previous team has made significant progress towards improving this issue.

Project Description

For this project, we would like the following enhancements to be implemented.

Central Management System

Here is where users create, view/monitor, and modify honeypot configurations. In Phase 6 the following features are requested:

Device Management / Monitoring:

- MACVLAN support: The system shall implement MACVLAN to deploy honeypots. This will allow the honeypots to be independently attached to the network rather than being deployed in a swarm.

Honeypots

The initial configuration of a honeypot is based on an Nmap scan of another system that the honeypot must attempt to replicate. With this input, the honeypot configures its simulated services so that an Nmap scan to the honeypot produces no detectable differences from the original system, while having as few attack surfaces as possible. In Phase 6 we would like to improve our honeypots as follows:

- The system shall have the ability to use a conventional device fingerprint to completely copy the network/interface of a target.

- The system shall provide the ability to use the captured data to modify the way the device builds packets, calculate fields in headers, and respond to specific traffic.

- The system shall provide the ability to deploy a honeytoken server

- The system should have a wide variety of honeytokens but at a minimum should include the ability to use email addresses, Word documents, PDF documents and API keys.

- The new functionality shall integrate with current Replay functionality.

- The system may use canarytokens as a basis.

Config Generation Tool

The Config Generation Tool is a standalone tool that will ingest packet capture of a system that has undergone a Nmap scan and the respective scan results and generate a configuration file for the Honeypots that can be imported. In Phase 6 the following features are requested:

- The system shall provide the ability to mimic operating systems and networking equipment using the newly requested fingerprint functionality.

- The system shall be resilient to handling large configurations.

- The previous two teams ran into some issues with adding large configs through the frontend interface. The system should be able to add large config files. If a config cannot be added, the server will reject it and the user will not be able to add/edit it.

Technologies and Other Constraints

Python3, NodeJS and VueJS are preferred development languages, and the TRIO library is recommended for multi-threading. Docker is currently used to easily manage and deploy honeypots and will remain the preferred solution for this phase. Nevertheless, all functionality of the Honeypot itself needs to be supported and tested on Linux without Dockers. CouchDB is the currently supported Database. Technology pivots are possible but require discussion with the Sponsors.

Each component of the system will be designed with large scale deployments (>100) in mind. Students should use best practices for the development of secure software and document in great detail. Students should also strive to design honeypots to be modular so that additional protocols can be introduced, and additional functionality can be created, e.g. RDP, SSH, SMB Honeypots, Honey Websites and active beaconing. This Project is published on GitHub and the Students will be required to follow GitHub processes and best practices.

Students working on this project will be most successful if they have a strong understanding of network principles such as the OSI Model. Prior successful participation in “CSC 405 – Computer Security” and “CSC 474 – Network Security” is recommended for this project. Previous experience with Nmap and OS configuration is an advantage.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Department Mission:

The North Carolina Department of Natural and Cultural Resources’ (DNCR) vision is to be the leader in using the state's natural and cultural resources to build the social, cultural, educational and economic future of North Carolina. Our mission is to improve quality of life by creating opportunities to experience excellence in the arts, history, libraries, and nature throughout North Carolina. The Department works to stimulate learning, inspire creativity, preserve the state's history, conserve the state's natural heritage, encourage recreation and cultural tourism, and promote economic development. Our goal is to promote equity and inclusion among our employees and our programming to reflect and celebrate our state's diverse population, culture, and history by expanding engagement with diverse individuals and communities. We encourage you to apply to become a part of our team.

Division Mission:

The North Carolina Division of Parks and Recreation exists to inspire all its citizens and visitors through conservation, recreation and education.

- Conservation: To conserve and protect representative examples of North Carolina's natural beauty, ecological features, recreational and cultural resources within the state parks system;

- Recreation: To provide and promote safe, healthy and enjoyable outdoor recreational opportunities throughout the state; and

- Education: To provide educational opportunities that promote stewardship of the state's natural and cultural heritage.

Data & Application Management Program

Serves to support the Division, sister agencies, and non-profits in web-based applications for various needs: personnel activity, Divisional financial transactions, field staff operations, facilities/equipment/land assets, planning/development/construction project management, incidents, natural resources, public education, etc. Using the data gathered from these applications, we assist program managers with reporting, data analytic, and public education needs.

Background and Problem Statement

The existing LAMP stack system was developed over the course of 25 years, with ad-hoc application development in a production only environment (mainly using PHP and MariaDBlanguages) to meet immediate business operational needs of the field staff. The legacy system is a production only [L]AMP stack without extraneous features, like environments for development, test, GIT, etc; if there are any features outside of PHP and MariaDB, this would need to be discovered, because we do not have documentation. The legacy system has gone through multiple ad hoc, quick migrations: from a classic MAMP, ZLinux, and now RHEL7. The legacy system is in dire need of upgrades to a more sustainable, future proof architecture and environment, based on industry standards, methods, and best practices, including integration of development and test environments. The current DPR applications support all aspects of NC-State Parks daily business functionality: from accounting/financial/budgetary, natural resource inventory and management, HR/personnel tracking; such system improvements will allow park staff to be more available to serve the public in their mission of conservation, recreation, and education needs.

Project Description

In a modern 21st century workplace, software application support for business functions is comprised of more than just our simple webpage and database interface. Our ideal solution would allow for a more flexible integration with other systems: IoT, other database systems, etc. The legacy system would need to be migrated and replicated, so regular operations may continue, from a 2 VM RHEL7 system [production internal and public servers] to a cloud, container OpenShift/RHEL8 GIT, dev, test, production system The envisioned cloud-based container system should allow us to continue to use our legacy systems and applications while transiting their integration over to the modern system. This would include a proper GIT system between development, test, and production, with branch push controls at each level. Our current internal and public facing systems are on two separate VM servers, but could be NATed in the container to still be separated out for security. This may require the integration of a SAML/SSO, shift from our current login method. Apart of the project, is our near future, 2024, will require a transition from RHEL7 to RHEL8, PHP5 to PHP7 (or whatever will be the latest version that NC-DIT will be supporting on a future system), and MariaDB 5 (with MyISAM) to 10 (with InnoDB). We foresee the need to eventually change our database from MariaDB to PostgreSQL, but are not set on this and open to suggestions. We are also integrating IoT devices for our visitation counts, facility maintenance monitors, and natural resource and fire drones; as well as, integrating with State Property Office, Department of Insurance, OSHR, OSBM,and vendor database systems: SAP-Hanna, Oracle, mainframe, SAP/DB2.

- Reservation system vendor uses a MS-SQL database and requires this for replication of dataset to us.

- Visitation counter vendor uses a MongoDB, but a JSON API can be utilized to replicate and insert the data into our MariaDB.

- We receive data in the form of files from other agencies or their vendor systems, appropriate accommodations for SFTP would need to be made in project.

- Packages, like ImageMagick and PDFLib, are utilized by applications in the php script files of the legacy system.

These needs have outgrown our current setup and have given us a glimpse into the pace of future needs. We are wanting to design a new system that allows for continued use of the legacy system and establishment of a system for the next generation. The legacy system will be modified accordingly to be migrated to the next generation system for continued use, until all developed applications can be migrated appropriately to be integrated into the next generation system.

Technologies and Other Constraints

Tools and assets are limited to what has been approved by the NC Division of Information Technology (NC-DIT). Most of the ‘usable’ constraints will be what is limited to NC-DPRs use through NC-DIT.

Cloud – suggested; AWS – suggested; Azure – possible

VMs – required; legacy system is built on them. Cloud/Container system could integrate, as necessary, to allow legacy system.

Containers – suggested; RedHat OpenShift – suggested; Podman – possible, but not likely available long term {Docker is currently not being offered/supported by NC-DIT; and we do not have an alternative method to support that system; this may change}

- Development system for developers (branches that can be utilized for individual modification and addition projects), specs for 10 simultaneous users

- Test system (for users to test requested developed applications; branches for individual projects); specs for 50 simultaneous users

- Production system; specs for 500 simultaneous users

- GIT push controls integrated throughout each level, with ability to modify workflow as needed, for hot fixes/patches.

- System backup methodology implemented. Integrated into replications on Dev and Test

GIT – required, as a methodology for version and branch control for merges and pushes; GITLab – suggested, as the final repository – other GIT repos used will need to be migrated/-able into GITLab

RHEL7 & 8 – required; RHEL7 {legacy system} needs to be ready for RHEL8 before 2024; container should handle both RHEL7 and RHEL8, with intention of RHEL8 being long term

PHP 5 & 7 – required; PHP5 is currently legacy system; some PHP7 readiness has been made, but full system and application check has not been done. There may be other PHP5 to 7 readiness steps that may need to be taken, that we have not done yet. PHP7 will be the

MariaDB5 & 10 – required; prepare legacy MariaDB5 databases and tables, uses the MyISAM database engine for MariaDB10, which uses InnoDB engine. User account controls, Indexing and increased speed of query processing

PostgreSQL – suggested; PostGIS – suggested

MS Server/SQL – suggested, for integration with vendor

JSON/MongoDB – suggested; for integration with vendor

SFTP/FTP – required; implementation method flexible; This is used to acquire and read data from other agencies and their vedor systems into the next generation systems databases. System would acquire file transfers from vendors/other agency sources for depositing, and we would read the data into our database

ImageMagick – Linux package – required; applications in legacy system utilize this; have experienced issues in the past, need to ensure it works on any new systems

PDFlib – required; applications in legacy system utilize this on both internal and public systems; need to ensure this will continue to work on any new systems with existing code files.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

The Geohazards and Earthquake Engineering (GeoQuake) lab is a research lab in the Civil, Construction, and Environmental Engineering Department at NC State. The GeoQuake team investigates how soils and rocks increase the damaging potential of earthquake ground shaking to civil infrastructure. The GeoQuake team also strives to advance education and outreach programs for women and Latin American students, which is why we designed the Earthquake Engineering and Seismology Community Alliance in Latin America (E2SCALA) program. E2SCALA means to “climb” and also to “scale” in Spanish and those are key drivers of this project; to help Latin American students reach their highest potential (to climb), while building a network that grows with the community’s goals (to scale up).

Background and Problem Statement

Underrepresentation of Latin students in undergraduate and graduate programs in STEM remains a challenge. Addressing the fundamental causes for having less than 2% Hispanics joining the STEM workforce is of special interest to NC, which has one of the highest Latin American population growth rates in the country. Additionally, the disproportionate effects of earthquakes in Latin American countries (e.g., the 2010 Haiti, 2016 Ecuador, and 2020 Puerto Rico earthquakes) relative to similar magnitude events in Japan and New Zealand reveal the critical need for community alliances to connect and engage professionals and students in Latin America who have an interest in earthquake engineering and seismology.

The COVID-19 pandemic has taught us that it is possible to be globally connected using virtual platforms. While not ideal, new frontiers can now be explored in remote learning, but not without challenges in the design and development of global, open access, educational and community-driven virtual platforms.

Project Description

The E2SCALA initiative will provide global, open access to educational resources, virtual mentorship, and collaboration opportunities to Latin American students in the US and at partner academic institutions in Latin America. To launch this online community and collaboration platform, the following five tasks are envisioned:

Definition of users, roles and privileges

There will be four main types of users in the platform:

- Administrative team: Includes the PI, Dr. Ashly Cabas and the graduate students and postdocs in her team at NC State. The Administrative team adds/removes/edits general content in the platform, approves partners’ content, invite/reject new partners, approves/rejects working groups (example of working groups), and have access to surveys requesting feedback from the general membership, and other statistical metrics of the platform (e.g., number of members, number of working groups, number of posts, number of visits, etc.)

- Partners: collaborators in academic institutions and the industry in Latin America (or the US) who will lead working groups and mentor student ambassadors (see description below). Partners can add content to the platform after approval by the Administrative team. The general membership (see description below) can apply to different working groups and Partners approve/reject applications. Partners post outcomes/goals/meeting agenda/meeting minutes. Partners will have a roster or listserv for their working groups as well as a calendar to post upcoming meetings and other events (e.g., webinars, conference of interest, etc.). Partners form the executive committee of E2SCALA.

- Student ambassadors: Students/professional nominated by partners to participate in the mentoring program. Two nominations per partner will be allowed and the Administrative team will approve/reject applications. Applications will be sent by email to the Administrative team for review. Student ambassadors can also create and post content after approval by the Administrative team.

- General membership: Professionals and students around the world will be able to join this online community by filling out an interest and background form. No official approval is necessary at this point, but the Administrative team will review general members’ profiles and has the right to reject/remove individual from the general listserv/roster.

Creation of membership database

Profile/Account: allow first time users of the platform to create an account and provide personal information and technical interests (example of profiles, example of survey). Requested information includes name, affiliation, number of years after last degree earned (identification of early career professionals and students), expertise (via a list of options created by the Administrative team), and list of topics of interest (via a list of options created by the Administrative team).

Visualization of platform metrics:

Member distribution per country (example)

Members per working groups (example)

Membership Summary info (i.e., table with member’s name, affiliation, working group he/she is a member of, etc. example)

Listserv: emails of the general membership should be collected, and a listserv created.

Platform’s technical content:

Working groups: separate tabs (webpages?) per working group to post deliverables, meeting agendas, etc (example of working group webpages, example of working group webpage2)

About page: Summary of partners’ and student ambassadors’ profiles (example 1, example 2, example 3)

Events page: Calendar of events relevant to the E2SCALA community (example)

Resources page: Four types of resources will be provided on the platform, namely

- Recorded Interviews to experts via Zoom (created by student ambassadors and partners, approved by Administrative team).

- Training modules and lectures (developed by partners, approved by Administrative team).

- Webinars (free, hosted on zoom, led by partners, offered live to people that register, and recorded to be posted on the platform, example1, example2).

- E2SCALA Workbench: library of hyperlinks to open source software and Jupyter workflows (contributed by partners, approved by the Administrative team).

Assessment of community building

Formative evaluations/surveys (e.g., google forms created by the Administrative team) sent to the general membership, partners, and student ambassadors via email. Collection of results in a central repository where only the Administrative team has access to.

Platform Stats (number of members, number of working groups, posts, per region) that only the Administrative team has access to, but can update automatically in strategic places in the platform (e.g., the About page and Working groups page).

Feedback from members: Allow users to easily provide feedback to help us improve the platform. Create/Provide general email address for members to contact the Administrative team and provide and online form/forum with feature requests, or webinars requests.

Management of the platform

Create interface for straight-forward managing of the platform (e.g., editing/updating content, reviewing platform stats, managing working groups, accessing the membership roster/profiles, etc.) by a non-CSC student (e.g., a Civil Engineering student).

Technologies and Other Constraints

This project needs to be web-based. Technologies to use are flexible and the GeoQuake team is eager to learn what would provide more versatility to the project, while making sure its maintenance is sustainable. Educational resources such as hyperlinks to Jupyter workflows, open source software, videos such as recorded webinars and zoom interviews will be hosted in the platform. Moreover, E2SCALA should be an accessible and inclusive platform (e.g., https://wave.webaim.org/).

Sponsor Background

Dr. Rothermel is the Head of the Computer Science Department at NC State. In this position, he is called on to assess the performance of faculty members, and to compare the performance of our faculty with those at other institutions.

Background and Problem Statement

In any organization, management looks for ways to assess the performance of employees and to measure that performance against the performance of competitors. Assessing performance, however, is complicated and there are many different metrics that can be used. In general, any particular metric one might choose has both advantages and drawbacks. Thus, it’s better to have multiple metrics.

In academia, one class of employees whose performance must be assessed is tenured and tenure-track faculty (hereafter, “TT faculty”.) TT faculty are typically expected to engage in several types of activities: these include teaching, research, and service (e.g., service to their profession or to their department). Performance in each of these areas needs to be assessed. Where this project is concerned, however, we are interested solely in research performance.

Standard metrics used to assess a TT faculty member’s research performance include 1) numbers of peer reviewed scientific papers published, 2) numbers of grants received, 3) total expenditures of grant money on research activities, and 4) numbers of students mentored and graduated, among others. To see an example of “1” in action, go to “csrankings.org”, turn the toggle next to “All Areas” to “off”, and down below under “Systems”, check the box next to “Software Engineering”. You’ll see that NC State is ranked second in the USA for research in this area based solely on the numbers of publications (in a small set of top conferences) of its faculty members.

All of the foregoing metrics provide value, but they do not adequately capture one of the key attributes that motivates research in the first place: research is expected to have an impact – to result in some meaningful contribution to the world, such as addressing a problem found in that world. Faculty members can write papers all they want, but arguably, until their work results in something (directly or indirectly) tangible, it hasn’t had impact.

How do we measure the impact of research? One way is to assess the actual products of research (i.e. research that translates into practical processes or tools). This is helpful, but it doesn’t account for the fact that when research leads to practical results, it is often over a long time period in which early results are adopted by other researchers to produce intermediate results (possibly with many iterations), which ultimately are adopted by other researchers into something directly applicable to addressing some problem in the world.

An alternative metric that can help account for the impact of research can be found by considering the ways in which a piece of published research is cited in subsequent papers by other researchers. The degree to which a published work is cited helps track the degree to which other researchers found that work to be meaningful in the context of their own work, and as such, indicates a form of “impact”. Ultimately, chains of citations connect initial conceptual research contributions to the real-world applications that the initial contributions made possible. While also not a perfect measurement in and of itself, citation data is already widely collected and used and accepted as a useful measure of impact. For an example, go to google and type “Google Scholar Gregg Rothermel” and you can see citation information presented in a few ways. One citation-based metric is the “h-index”, and often you’ll find faculty noting their h-index in their resumes.

As a Department Head, I can easily compare and assess faculty in terms of their numbers of publications, their grants received, their students mentored. But these metrics don’t capture impact. Comparing faculty in terms of citations could help with that, but doing so is more difficult.

The goal of this work is to provide Department Heads with a tool that can be used to compare and assess the impacts of faculty research (both within their own department and across departments) in terms of citations that research has received.

Project Description

I have been able to assess citations using a manual process, but it takes too much time for me to apply this process broadly. The process uses data provided by Google Scholar. Initially I attempted to just scrape Google scholar pages (via cut/paste) obtain information on publications and their citations and place the resulting data into a spreadsheet. With extensive manipulation I could turn this into data that lists paper names, years of publication, and numbers of citations. Doing this for multiple TT faculty I could obtain data that can be compared, and I could then use tools for displaying data sets (e.g., boxplots that provide a view of the distributions of citation numbers per faculty member).

This turned out to be much more difficult than the foregoing paragraph makes evident. For one thing, there can be a lot of “noise” in Google Scholar pages. For some faculty, lists of papers associated with them in Google Scholar include numerous papers that those faculty are not even co-authors of! These had to be weeded out. Also, some papers listed in Google Scholar have not been refereed, have appeared only as preprints, or have appeared only in minor venues that should not be considered.

One potential solution to this problem was to take as input, for a given TT faculty member, a list of their refereed publications, such as is typically present in their CVs (Curriculum Vitae – the academic equivalent of resumes). This is what I ended up doing in my manual process. Given a CV and a list of publications, I search for those publications in Google Scholar and record the information on their dates and citations, and I place the relevant information into a spreadsheet. Then I repeat this for each faculty member being considered. Depending on what I want to visualize of the data, I arrange the data in the spreadsheet such that it allows me to obtain specific visualizations. For example, to obtain a figure providing boxplots presenting the distribution of citations for each TT faculty member among a group of faculty members, I create a spreadsheet in which each column lists a given faculty members’ citations, and use Excel’s boxplot tool on this spreadsheet.

This project needs to provide an automated method for doing the foregoing, but a method that “mimics” my manual process is not required – rather, a method that achieves the same results as that process is what we’re seeking.

The best way to describe what the proposed system needs to achieve is to first list a number of “queries” that I would like a system to be able to handle. Examples are:

- Compare the citations for a given set of faculty members over their entire careers.

- Compare the citations for a given set of faculty members over the past 10 years.

- Compare the citations for a given set of faculty members over the period 2005-2015.

- Compare the citations for the 20 most highly cited papers for each faculty member in a given set of faculty members over (some period).

- Compare the trajectories in citations (i.e., the changes in citation numbers over time) for a given set of faculty members over (some period). A trajectory could consist of numbers per year, or rolling averages.

We could find a way to characterize the set of queries to be supported more generally, using parameters.

A second component of the description involves the interpretation of the word “compare”. To date my primary “comparison” method is to obtain boxplots that let me visualize the data. So “compare” translates to “provide a figure containing a boxplot that shows the citations….”. Other results may be useful, however. For example, a “comparison of trajectories” suggests the use of line graphs that trace changes in numbers over time. Another useful method would be to calculate h-indexes over the given sets of publications being considered. We could explore other options, but to scope this project I suspect that identifying a small finite set would be appropriate.

Obviously, obtaining data and storing it in a manner that supports the types of queries required is a bit part of the project. Presumably the data would be stored in a database where it could be used to provide results for the queries. This could be performed by some front-end tool. Then a back-end tool would enable queries and visualizations.

As a Department Head, I would find a tool that facilitated the following comparisons useful in several ways. Here are some examples:

- To assess the relative impacts of the research of Full Professors within the CSC department at NC State, for use in annual evaluations.

- To assess the trajectories of the impacts of the research of Associate Professors seeking promotion to Full Professor in the CSC department.

- To assess the relative impacts of the research (or trajectories of those impacts) of a set of prospective faculty members who are applying for faculty positions with the CSC department.

- To assess the performance of CSC faculty in terms of impact, relative to the performance of other CSC departments that are thought of as comparable, or that are examples of what we “aspire” to as a department.

Technologies and Other Constraints

I do not have any suggestions for specific technologies to be used; I leave this up to the project team.

I do ask for a solution that can be utilized on common desktop PCs or laptops, without using any proprietary software that must be purchased. Minimally, a first version should function on a recent version of an iMac or MacBook.

I don’t require students to sign over IP for this, but I do expect that the Department of Computer Science at NC State is given the rights to use the system free of charge.

Sponsor Background

The NC State College of Veterinary Medicine is where compassion meets science. Its mission is to advance the veterinary profession and animal health through ongoing discovery and medical innovation; provide animals and their owners with extraordinary, compassionate medical care; and prepare the next generation of veterinarians and veterinary research scientists. With world-leading faculty and clinicians at the forefront of interdisciplinary biomedical innovation, conducting solution-driven research that defines the interconnections between animal and human health, the CVM is a national leader in veterinary research and education.

Background and Problem Statement

Medical best practices for diagnostics in many species (both human and nonhuman animals alike) involves the use of diagnostic blood work to help pinpoint which body systems are functioning properly or not. Laboratory testing of blood samples yields precise values of physiological systems and their functions across a range of measurements, which—for many species—are well documented and understood. At the push of a button lab technicians can generate a report that contains the values for the sample tested as well as a comparison to reference ranges for healthy individuals of the same species. As commonplace as this is for animals like dogs, cats, or horses, no such system is available for poultry (chicken, duck, turkey, etc.). The currently available system involves researchers entering results in a local database and calculating their own ranges, with no compilation of data from multiple farms or projects. At NCSU-CVM, all the test results from poultry blood are entered into an Access database hosted on a faculty members computer, queries are run to export results into MS Excel, and then an Excel add-on comprising macros to compute reference ranges is run to generate a report. The process is labor intensive, ripe for human error, and limits usability of the data due to lack of web-access.

Project Description

In order to facilitate a more useful tool for poultry bloodwork collection and analysis, we will develop a web-based application that allows researchers, practitioners, and owners to submit bloodwork results (with appropriate access controls/anonymization) and review reports that will provide normal ranges for the species and age group derived from compiled data from previous bloodwork results. When test results of an individual are entered, the system will compare the data entered for an individual to the expected value ranges, identifying values that fall outside of the calculated reference values. Bloodwork data can be entered manually, but the system should also support extracting/parsing bloodwork results from uploaded documents such as PDFs or text files. As a stretch goal, users should be able to submit photos of printouts containing bloodwork results and the system should use OCR to extract relevant values.

Students working on this project will be given an existing Access database and the existing Excel macros, and will work closely with Drs. Crespo and Roberts to design, implement, and test a web-application that facilitates collecting bloodwork results, calculating reference values, limiting views of data based on access controls, and generating professional PDF reports that can be shared back with owners and practitioners.

Technologies and Other Constraints

Students have complete flexibility in selecting the technologies to use, provided the output of the project is accessible via browser, generates PDF reports, and can reasonably be hosted on a mid-range desktop PC (before scaling).

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

SAS provides technology that is used around the world to transform data into intelligence. A key component of SAS technology is providing access to good, clean, curated data. The SAS Data Management business unit is responsible for helping users create standard, repeatable methods for integrating, improving, and enriching data. This Senior Design project is being sponsored by the SAS Data Management business unit to help users better leverage their data assets.

Background and Problem Statement

With the increased importance of big data, companies are storing or pointing to increasingly more data in their systems. A lot of that data goes unused because a lot of the data is unknown. A data curator can manually inspect a small number of datasets to identify the content, such as travel information or sales records. However, manual classification becomes difficult data as data sizes grow. Storage facilities for big data systems are often called data lakes. As data sizes grow, data lakes frequently become data swamps, where vast amounts of unknown, potentially interesting datasets remain untapped and unused.

Imagine if you could tap into people’s knowledge to help with data curation; to classify, vet, and validate data stored in a system. Doing this sort of curation is hard work though, so why would people bother to do it without some sort of reward to encourage them to participate?

Could you make this process more fun by adding gamification? Similar to websites such as https://genius.com/Genius-how-genius-works-annotated , people that participate in classifying and curating data could earn genius points, gain levels, and earn the recognition of their peers for contributing to the overall knowledge base of data. Your application would serve as a data catalog with pointers to many datasets that could be interesting and useful to people, and that has been curated by knowledgeable end users.

Project Description

In the fall 2021 Senior Design Course, students created the foundation of a javascript web application for gamification called “Data Genius”. They built the framework, but many important features are missing, and there is no mobile option for the app.

The goal of this project is twofold.

1. Create a mobile version of the application

Why mobile? Since “playing” the app involves engaging users to do a somewhat tedious task (curate a dataset) when they have some spare time to do that task, having a mobile version of the application will allow them to perform curation in a fun and engaging way when they have time to do so. This will allow them to increase their standing on the leaderboard and will encourage usage to get more curations completed.

2. Add to the web application important pieces that are missing to make it more engaging

The students did a great job setting up the foundation (logging in, creating storage, initial UI, establishing some dialogs to do the curation, setting up the basic leaderboard), but there are many important features missing that would make the application more compelling for users to engage. Think about the games you play yourself and bring some of those elements into this project. Here are some features that could be added, and you may think of others:

- Can you provide a “reward” when users curate that is instant so that they get positive feedback for their contribution immediately? Something similar to the visual elements you see in something like Wordscapes, where users that complete a puzzle get a “brilliance” sun that shines a little brighter when they complete their task. You may think of other ways to reward users immediately for their contribution. This has been shown to be very effective, from a social science standpoint, in encouraging users to continue to make contributions.

- Should you provide templates or standards to ensure consistency in how curators respond? Right now its very free form, and users can enter anything they want. When curating data with tags, the tags may have some standards, something like schema.org for data. Can you provide a way to choose from previous standards, but still allow people to add additional tags if they need to?

- Can you add some machine learning intelligence to suggest tags that are most popular or are most similar based on the data?

- How can you encourage people to contribute more than once? More than 5 times, 10 times, etc.

- How can you socialize the contributions so that people feel good about what they have done? Gain recognition from peers?

- How can you prevent spammers or non-constructive content from entering data into this socially curated catalog?

- How can you prevent invalid tags, bad words, or content that you don’t want in the catalog from being entered?

- Can you provide some visualization that provides insights into the data and the tags: how many datasets have tag1, how many have tag2, etc.

- Hook in a collaboration tool like Slack and allow collaborators to communicate and tag datasets with additional information or converse about the information in the catalog.

- Provide a method to socialize validation of the curation prior to it being added to the system (to prevent invalid content from being added)

These are just some features, and you probably can think of many more that will make the application much more compelling and useful.

Here is some additional previous project work that can be leveraged for this project:

- This project has a UI design available, including UX research, from SPRING 2021 - CSC 454 - Human-Computer Interaction - DataGenius: Gamification of Data Curation. You may use this UI design as part of your implementation. The results from the UX research study conducted by that team may prove especially helpful. If needed, SAS can provide a package that includes all of the artifacts from the class. There are some of the important screenshots from this project included below in the references section as well.

You may also want to review and evaluate other applications that do this sort of thing socialization of knowledge for ideas such as genious.com (mentioned above), wikipedia, kaggle, google dataset search, and others you may think of. There are some links below that describe the social science around gamification that you also may find helpful and interesting as you build this project.

This project has the following objectives:

- Create a mobile application to complement the web application for curating an index of data assets. Be sure the mobile and the web app link to the same datasource so that people can curate in the mobile version or the web version.

- Update a User Interface that leverages gamification principles to encourage users to curate the data referenced in the index

- Add additional and useful features to encourage users to classify and document the data,

- Optional: Test your application with various users to gauge how well your application encourages participation in the data curation task

Technologies and Other Constraints

- Javascript React frontend

- Python

- Storage - any preferred storage that the students can work with

- Mobile application toolkit (will have to research the options here) or Objective-C if you want to write this for an Apple device

- The data format of the input data to be curated can be assumed to be structured/tabular data (rows and columns), such as datasets in csv format (or like excel files).

- You can leverage other senior project work for suggestions

You will gain some knowledge from this project of machine learning principals. Some background in this area might be helpful, but not required to be successful with this project. Knowledge of Python and REST interfaces will be helpful. You may want to contribute your design into the open-source community. You should have some knowledge of Javascript and will be learning React. You will learn about mobile application development.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Wake Technical Community College (WTCC) is the largest community college in North Carolina, with annual enrollments exceeding 70,000 students. The pre-nursing program in the Life Sciences Department runs a two-course series on Anatomy and Physiology, where this project will be used, with enrollments exceeding 800 annually. Additionally, this project is expected to assist over 1,000 biology students when fully implemented.

Background and Problem Statement

Biology students as well as pre-nursing students need to understand how the body carries out and controls processes. Proteins have a diverse set of jobs inside cells of the body including enzymatic, signaling, transport, and structural roles. Each specific protein in the body has a particular function and that function depends on its 3D conformation. It makes sense then, that to alter the activities within cell or body, proteins change shape to change function. One important example of this is hemoglobin. Hemoglobin is a huge protein found inside red blood cells and its primary function is to carry oxygen and carbon dioxide to and from cells of the body, respectively. Structures inside hemoglobin bind to oxygen dynamically at the lungs and then release the oxygen at metabolically active tissues.

As a beginning biology or pre-nursing student this is a difficult process to imagine from a 2D image in the textbook, and we have worked to create a tool that helps visualize protein dynamics using augmented reality. In various iterations, the tool has supported the use of AR tags to change the environmental factors that influence protein structure and function, basic animation of structural changes of 3D protein structures, and the creation of structured activities to support educational use—although never all at the same time. Integrating and enabling all of these features, alongside several new ones to make the tool more suitable for online education, is the emphasis of this project.

Project Description

Supporting decentralized collaborative AR experiences for teams of students or students and instructors through the use of real-time video collaboration and recording, integrating animation features with the use of multiple AR tags, and connecting to the instructor’s assignment specification and grading views will be the main goals. As a stretch goal, integrating with Blackboard (WTCC’s LMS) to facilitate rosters and grading is also desirable.

The existing AR app uses Three.js, existing crystallographic information files (.cif) and parsers (LiteMol), and a DB backend for identity and content management. The .cif files are an open format where structured data describing molecular structure are encoded. The files contain information about the components of the molecules, their locations, and their relationships. There are three main implemented use cases:

- Instructors can specify protein molecules (e.g., hemoglobin, see Figure) using .cif files, define the environmental factors (e.g., temperature, oxygen, etc.) that impact protein structure, and set rules for triggering structural transitions.

- Students can visualize the 3D structure of proteins by placing an AR tag on surface, loading the web-app on their mobile device, and moving around the environment. They can further manipulate the simulated environment by applying environmental factors (either through UI controls or AR tags).

- Instructors can define “lessons” for students that prompt them to answer certain questions by investigating the 3D structure and submitting responses through the web-app (multiple choice, short answers, or submit a screenshot).

These three use cases are supported to a varying degree in different implementations of the app, and bringing all of those functionality under one app version is a primary goal. In addition, in-person collaboration is currently feasible through the use of AR tags and multiple devices. An additional use case to design for is to support remote collaboration among students or students and instructors.

- Multiple students or multiple students and instructors can collaboratively view a 3D structure, even if not co-located, and share the role of applying environmental manipulations to influence protein structure.

Technologies and Other Constraints

- This must be a web-based app with student AR views supported on mobile devices, and instructor/backend systems supported on desktop browsers.

- Three.js (existing, can be replaced)

- AR.js (existing, can be replaced)

- LiteMol (existing, can be replaced)

- Spring (existing)

- Gradle (existing)

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Cisco (NASDAQ: CSCO) is the worldwide leader in technology that powers the Internet.

Our Purpose: To power an inclusive future for all.

Our Mission: To inspire new possibilities for our customers by reimagining their applications, securing their data, transforming their infrastructure, and empowering their teams.

Our Commitment: To drive the most trusted customer experience in the industry with our extraordinary people and great technologies.

CX Cloud, a brand-new platform for customer and partner technology lifecycle experiences. CX Cloud aligns Cisco technology solutions to customer needs. Currently, the CX Cloud platform performs network optimization with AI (Artificial Intelligence) based actionable insights, provides deployment best practices guidance, and maintains asset visibility. New tools, features, and functions can be introduced to customers through CX Cloud with minimum disruption to production environments.

Background and Problem Statement

“Virtual teams are like face-to-face teams on steroids – they are more cumbersome and provide more opportunities for team members to lose touch, become demotivated and feel isolated,” Brad Kirkman (Poole College) says. Dr. Kirkman spent time partnering with three companies – Cisco, Alcoa, and Sabre – to examine their virtual team operations and came away with three best practice areas virtual teams should focus on – leadership, structures, and communication.

Leadership - How a team is designed – which includes everything from who is included on the team, what their roles are, and which technologies and performance metrics are used. The practice of empowerment and shared leadership by allowing team members to take on some of the leadership responsibilities as they would typically oversee in a face-to-face setting – such as rotating team members’ responsibility for sending out the agenda, facilitating a meeting or taking notes in virtual meeting spaces.

Structures - Improving structures the team has in place to support healthy team processes – including coordination, collaboration, conflict management and decision making. Having a shared vision and language, and leaders who are regularly checking in on team members, is especially important for a diverse team.

Communication – What happens before, at the start of, at the end of and in-between meetings is most critical. Dr. Kirkman says. “I recommend leaders always take the first five minutes of any meeting for small talk – allow the team to chat, celebrate personal accomplishments and news and build that camaraderie that comes with being a team.”

To support Dr. Kirkman’s research and to support wellness of employees engaged in virtual teamwork, new software tools may be designed and created to provide employees the ability to feel more connected, motivated, and engaged. Dr. Kirkman’s research also provides focus on building a sense of psychological safety through asking for input early and often, making sure that virtual team members feel included and valued.

https://poole.ncsu.edu/thought-leadership/article/equipping-leaders-to-support-virtual-teams/

Project Description

Students will resume a Fall 2021 Semester project for software tools to assist employee wellness leveraging existing student design and software application to help measure employee’s self-reported wellness in key areas of leadership, structures, and communication to enhance collaboration, individual leader contributions, and communication to peers/management groups via AI personas.

Project design should extend methods for user-centered design, data collection, polling intervals, insight reporting, and wellness projections that provide participating user personas and their organizations to gain key insight areas for employee wellness and engagement.

Functional areas should allow participating users the ability to remain anonymous and combine their results with other participating users with trending, historical retrospectives, and future data projections to understand personal and team impact in key areas of leadership, structures, and communication as a foundation to employee wellness.

Added areas that the solution may explore can include:

- Areas of self-reported user feedback through anonymized summary data for indicators of employee's needs, concerns, and priorities

- Indicators/intelligent bots that leadership and management teams could leverage to improve meetings, teams, business goals, and employee wellness.

Software solutions should expand or redesign assessment indicators to support healthy team leadership, structures, and communications through standardized questions and measurement scale.

Example of indicator questions “I (was/was not) able to get my point across in my last meeting”, “I (love/loathe) tasks I need to complete from my last meeting”, “I felt (heard/unheard) in my last meeting”, “I felt that my last meeting was a great/good/poor/bad use of my time”, This meeting was “(productive/unproductive)”)).

Consider asking open ended questions in polls. (I.e., “I would keep doing ______”. “I would change ________”. “I value ____”, “I do not value _____”.

The goal is for users and leaders to gain deeper perspectives and help users to receive assistance/encouragement and build empathy to prevent burnout.

Technologies and Other Constraints

Software tools to aid employee’s wellness can leverage prior project code and data structures developed on AWS platform and should be accessible from web/mobile (to support use cases for hybrid work and team collaboration). Teams may also consider usage of API vs. Functions for reliability/extensibility. Team may also build employee, management, and virtual assistant profiles/personas for functional application testing.

Students should create a storyboard detailing a business organization, personas, and example interactions with the created software tools to help employee’s wellness or outcomes along with AI assisted personas to support employees seeking support resources. Students may solve for unidentified workplace issues/challenges and possible outcomes related to leadership, structures, and communication.

The Fall 2021 Senior Design Cisco project team built the current system using AWS EC2, MySQL, NGINX, Node.js, Express, JQuery and Bootstrap.

Constraints - No expected issues in development since users would be persona based. No expected application dependencies as a stand-alone app.

Students will be required to sign over IP to sponsors when the team is formed

Sponsor Background

Professors Price and Barnes @ NC State and Morrison @ UVA are working together to build tools and processes to help students do a better job solving computer science problems with programs.

Background and Problem Statement

Learning to write programs is a fundamental part of CS courses, but it can also be a primary source of frustration and discouragement for many students, with impacts on students' self-efficacy. Negative programming experiences may occur because students do not know where to start or how to decompose a complex problem into more manageable sub-tasks, because they have difficulty predicting how much time it will take to complete, or because they are unsure if they are making progress.

Our vision is to improve students' persistence, self-efficacy and learning in introductory CS1 and CS2 courses by providing tools that help students break a programming problem down into manageable sub-task tasks, and then solve those sub-tasks individually. This makes the problem more manageable, while also teaching decomposition. Our preliminary research suggests that this subgoal-based support can improve students' engagement and persistence in CS, and reduce failure rates.

Problem Statement: The problem is that novice students need help decomposing problems into plans for programs. We seek to build a tool that allows students to make plans at higher levels of abstraction than code, while still allowing students to execute their plans like a program. The senior design project should build a prototype tool that demonstrates the capability of performing problem decomposition, breaking a problem down into parts, specifying how the parts work together, and being able to execute the plan. Furthermore, the student should then be able to implement each part of the plan in code.

Project Description

The senior design team would build a usable planning tool, building on a prototype that Dr. Price has constructed, to assist novice programming students in breaking down programming problems into parts (i.e. "plan blocks"), and specifying how they work together to solve the problem.

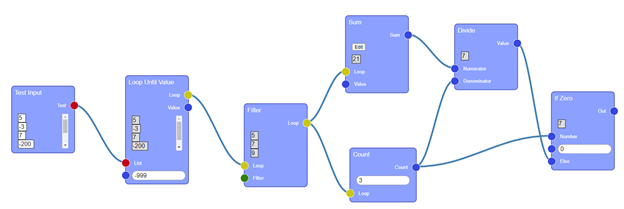

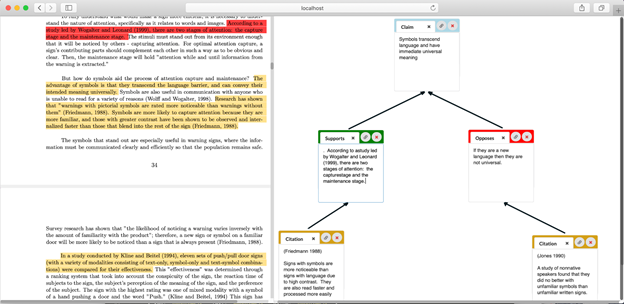

Above is a screenshot of the prototype planning tool, allowing students to select the core components of their program (blue blocks), define how they relate to each other (lines), and execute the plan with a test input.

Deliverables will include: a tool that students can use to plan solutions to example AP CSA problems and a set of documentation, consisting of video recordings of using the tool to solve each problem. Each video should demonstrate defining and connecting decomposed modules for solving each problem, specifying how the parts work together, including testing of individual modules as well as the overall problem solution, showing how each part is executable, and demonstrating how each part can be programmed and/or changed by students.

Features of the planning tool that the team will likely need to implement include:

- Additional features for the front-end:

- The ability for students to define and implement their own plan blocks, which can then be edited and used in other parts of the plan.

- An in-system textual code editor that supports Python, which can be used to write corresponding implementation code for each part of the plan.

- New plan blocks that support solving AP CSA problems from multiple years.

- A back-end, which supports:

- User accounts and logging-in.

- Saving and loading projects to a database.

- Logging/telemetry of student interactions with the system.

- A/B testing features that enable features to be enabled/disabled for studies.

- The ability to execute Python code in a sandbox to evaluate it (e.g. using existing libraries).

Technologies and Other Constraints

- The product must be web-based, written in Javascript or another web-based language.

- The current prototype uses Vue.js for the front-end, and this is suggested for this project.

- The project requires creating a back-end, and the technology for this is flexible.

- Student users must be able to write parts of programs in Python.

Sponsor Background

The Rumble Harvester project will be a co-sponsored effort between the Friday Institute and MCNC.

Friday Institute