Projects – Spring 2018

Click on a project to read its description.

Conversational Assistant for Portfolio Management

At CapitalOne, the Commercial Bank is a Top 10 U.S. bank providing banking services to business clients with annual revenue above $10MM. Comprised of more than 2,500 associates and more than 6,100 clients, Commercial Banking manages more than $70 billion in loan commitments and more than $50 billion in deposit balances, generating over $2.1 billion in annual revenue. The product and service groups within Commercial Bank include Capital Markets, Strategic Commercial Services, Commercial Business Risk Office, Commercial Operations, and Underwriting and Portfolio Management.

A crucial aspect of Portfolio Management is to better understand the metrics and risks associated with managing a diverse portfolio of loans, collaterals, and assets. The overall commercial portfolio is managed by a set of portfolio managers, which build relationships with their clients, and monitor the overall health and risk inherent in the portfolio. Considering this is lending to commercial clients, the risk to CapitalOne includes the client’s ability to generate revenue and the general health of the collateral underlying each loan. In addition, the portfolio managers are responsible for monitoring the commitments of performance made by our clients.

Background

This project builds on the Fall 2017 project that uses Amazon’s Echo Show to create a portfolio assistant for executives in the Commercial Bank. The existing project uses portfolio and rating data with elasticsearch to create an assistant to executives that includes portfolio managers up to the CEO of the Commercial Bank. The major feature of the assistant:

Conversational Assistant – Using elasticsearch technology along with the portfolio and ratings database allows the executives to ask questions about the portfolio. The students will be provided with a set of sample questions about the portfolio but the assistant should be developed to answer questions on a variety of topics.

For example:

- How many accounts does <person> manage?

- What is the risk rating for <borrower>?

- Who is our largest relationship?

About this project

In the Fall of 2017, the first iteration of the Conversational Assistant for Portfolio Management was developed by students at NC State as a part of their Capstone Project. This project is to continue that work to build the additional capabilities that were identified to move this forward as a viable product for the underwriters and portfolio managers at Capital One. There are technical and feature components to be completed during this semester:

Integration with Single Sign-On frameworks for authentication Natural Language Processing integration for more comfortable conversations Ability to view upcoming tasks to be completed by the Underwriter, sort of a “Dashboard” that is proactive

Natural Language Processing

Natural Language Processing (NLP) is available directly from Amazon using Lex or is available from third party libraries. Utilizing NLP over structured requests will allow the Conversational Assistant to be more conversational and a better utility for end users, enabling multiple pathways to get to the same data. Modifying the handful of existing queries and adding additional queries defined below in the Dashboard section that route through NLP-processors would provide strategic advantage over the current implementation.

Dashboard

The ability to see a visual representation of a portfolio of credits is incredibly beneficial when evaluating trends, monitoring health, or tracking action items. This information is all available within the PT database, and we feel there is potential to create a product similar to the Dashboard in PT within the visual virtual assistant.

Features:

- Ability to view deal team

- Ability to initiate a phone call from deal team page

- View trends of the credits you / your team manage

- Balance & Exposure

- Expected Loss

- Financial Trend

- Key Metric Trend

CapitalOne Support

The students will be provided with flat files which contain sample data used in CapitalOne’s proprietary and very cool Portfolio Management application, along with a data dictionary of those files. The files will be easily bulk copied into any standard database. In addition, CapitalOne associates will provide support at the beginning of the project for a project overview, and in creating the CI/CD pipeline, the initial AWS / Docker setup, and assistance in the software tool selection. CapitalOne will also provide each student with an Amazon Echo Show for the duration of the project. In addition, CapitalOne Associates will provide support and guidance throughout the project in person at the NC State campus at least one day every other week.

Architecture

The project is built using the AWS cloud and a true CI/CD pipeline. It is also suggested that the solution design include a true micro-service architecture. The technologies behind the application, service, and database stacks are at the discretion of the student, however leveraging React / Angular, NodeJS, AWS ElasticSearch, AWS CodeDeploy/CodeCommit/CodePipeline, and AWS RDS PostgreSQL are encouraged.

Students will be required to sign over IP to Sponsor when team is formed.

Eureka – Digital investing & financial planning platform driven by social network

About Capital One Wealth and Asset Management

At CapitalOne, the Commercial Bank is a Top 10 U.S. bank providing banking services to business clients with annual revenue above $10MM. Comprised of more than 2,500 associates and more than 6,100 clients, Commercial Banking manages more than $70 billion in loan commitments and more than $50 billion in deposit balances, generating over $2.1 billion in annual revenue. The product and service groups within Commercial Bank include Capital Markets, Strategic Commercial Services, Commercial Business Risk Office, Commercial Operations, and Underwriting and Portfolio Management.

Capital One Wealth and Asset Management (WAM) has over 80 years of experience in wealth and asset management, including its predecessors. WAM has over $10 billion in assets under administration and $4 billion in assets under management.

What WAM is Trying to Solve

Lack of trust: Investors (especially retail) are perplexed by investing and financial jargon. After the financial crisis, the lack of trust in financial advisors and lack of transparency in performance is affecting their participation in the markets.

Goal driven vs portfolio driven approach: While financial advisors discuss goals in portfolio objectives, their approach is portfolio driven to meet goals. WAM seeks to upend this by anchoring goals as the main factor. Investors should be able to buy and sell portfolios customized towards each goal rather than stocks or bonds customized to portfolios. Goals will not be geared towards investments alone but will be geared towards over the total asset-liability base.

Financial Social network to facilitate awareness: A social network where individual stocks or bonds are researched through crowd intelligence with analytics to support accuracy and other parameters.

Financial literacy: Promote financial literacy by tracking spending, savings, investing activities and introducing budgeting, financial alternatives and planning. Gamification or incentives can be used towards attaining these goals.

Project Eureka:

Eureka seeks to change investing for good by creating a different paradigm:

Create goals focused not only on investing but on total asset liability base and track it to optimize spending patterns and investing performance to achieve goals.

Create transparency in investing through a social network where investors are able to see other goal-based, risk-adjusted portfolios and their performance. If necessary, investors can invest in other such portfolios to achieve their goals.

Participate in and adapt to a rich suite of crowd-based research (using the social network) supported by machine learning analytics

The financial social network facilitates both crowd-based research and transparency by creating a digital marketplace for investment products which are driven by community and expert participation

Machine learning, feature rich UI will be key to this project. This project will broken down to tangible sub-set for the students to implement.

Suggested Technologies

It is suggested that the project is built using the AWS cloud and a true CI/CD pipeline. The technologies behind the application, service, and database stacks are at the discretion of the student, however leveraging React / Angular, NodeJS, AWS CodeDeploy/CodeCommit/CodePipeline, and AWS RDS PostgreSQL are encouraged.

CapitalOne Support

Capital One will provide a mock UI prototype which will explain the workflow and features as a high level overview. Capital One will provide any necessary data and work with the student to help them understand the tool and the APIs. Representatives for this project from CapitalOne will be available for the students at least once a week (or more as needed) through phone calls and meet the students twice a month to help the students.

Company Background

Capital One Financial Corporation, a Delaware corporation, established in 1994 and headquartered in McLean, Virginia, is a diversified financial services holding company with banking and non-banking subsidiaries. Capital One Financial Corporation and its subsidiaries offer a broad array of financial products and services to consumers, small businesses and commercial clients through branches, the internet and other distribution channels.

Students will be required to sign over IP to Sponsor when team is formed.

Team Resource Manager

Overview

The Trading and Fuels team at Duke Energy supports over 100 applications and is responsible for several delivery projects. Our team members need to be carefully balanced between supporting production applications and project assignments. Failure to do so could lead to production issues going unresolved, or projects not completed. The team manager regularly receives requests from project managers to assign a team member to a new project. These resource requests can easily be lost in email. Additionally, updating a shared spreadsheet can be a painful experience. Spreadsheet updates are often lost or overwritten. The team needs a system that will easily track requests, organize them, and efficiently allocate resources to projects and/or support work.

Requirements

The system will maintain a database of resources (Trading and Fuels team personnel) that are allocated to projects and production support. It will track skillsets of individuals, their typical role and what work (projects and/or support) that they are allocated to. Their work assignments should be tracked over time. There should be breakdowns of work assignments, individual assignments, and summaries.

The system must maintain the capacity of individual team members. The capacity reflects the percentage of time an individual is available to work. This is expressed as a decimal from 0 to 1; 1 indicates that the team member is 100% available to work on team related activities. In the example below, Frazier and Doeren are both 100% available. From January through March, Smith and Keatts are only 50% and 75% available to the team, respectively (this could be due to vacation, outside assignments, etc). Keatts’ capacity increases to 100% in April. This screen would be maintained by the team manager.

| Skill Set | Resource Name | Jan-18 | Feb-18 | Mar-18 | Apr-18 |

|---|---|---|---|---|---|

| DB | Frazier, Tom | 1.00 | 1.00 | 1.00 | 1.00 |

| C# | Smith, Joe | 0.50 | 0.50 | 0.50 | 0.50 |

| Java | Keatts, Kevin | 0.75 | 0.75 | 0.75 | 1.00 |

| MRS Reports | Doeren, Dave | 1.00 | 1.00 | 1.00 | 1.00 |

Individuals will be allocated to assignments, as below. These assignments could be either support activities or projects. This screen should show all allocations and be available for editing by the team manager. The units below are hours. 160 hours in one month is considered full-time.

| Resource | Jan | Feb | Mar | |

|---|---|---|---|---|

| Project - Web Trading App | Total FTE | 1.38 | 1.38 | 1.38 |

| Project - Web Trading App | Frazier | 40 | 40 | 40 |

| Project - Web Trading App | Keatts | 120 | 120 | 120 |

| Project - Web Trading App | Smith | 60 | 60 | 60 |

| Coal Purchasing App | Total FTE | 1.81 | 1.81 | 1.81 |

| Project - Coal Purchasing App | Frazier | 80 | 80 | 80 |

| Project - Coal Purchasing App | Keatts | 50 | 50 | 100 |

| Project - Coal Purchasing App | Smith | 160 | 160 | 160 |

| Support | Total FTE | 0.69 | 0.69 | 0.69 |

|---|---|---|---|---|

| Support | Frazier | 20 | 20 | 20 |

| Support | Doeren | 30 | 30 | 30 |

| Support | Smith | 60 | 60 | 60 |

The following screenshot shows team member allocations, based on capacity and assignments. Frazier and Smith are fully allocated in April. Frazier is 20% over-allocated in May, but 20% under-allocated in June. To extend the example, assume Frazier’s capacity was set to 1 in April and May. The allocation of 1.00 in April indicates that he has been assigned 160 hours of project and support work. The allocation of 1.2 in May indicates that he has been assigned 192 hours of work (and the field shows up in red, indicating a review is needed). For Smith, assume his capacity was set to 0.5 in April. In that case, the allocation of 1.00 indicates that he was assigned 80 hours of work. He too is considered fully allocated, because his capacity is half of Frazier’s. If Keatts’ capacity was 1.0 in April, then his allocation of .5 indicates that he too was assigned 80 hours of work.

| Skill Set | Resource | Apr-18 | May-18 | Jun-18 |

|---|---|---|---|---|

| DB | Frazier, Tom | 1.00 | 1.20 | 0.80 | C# | Smith, Joe | 1.00 | 0.80 | 0.00 | Java | Keatts, Kevin | 0.50 | 0.50 | 1.00 |

The basic flow described above will assist the scheduler in assigning team members to work and make sure that they are fully allocated.

In addition, there should be a way for project managers to request resources for projects. PMs should have the ability to log in to the system, enter new projects and submit requests for team members. They should be able to request individuals by name (Tom Frazier/Kevin Keatts) or by skill set (DBA, C# developer, etc). Requests will have a priority. The team manager will match requests with available resources.

Screens should be available to maintain team members, skill sets and projects, as well as providing user access to the tool. These screens would be edited by an application administrator.

A stretch goal will be for the system to intelligently assign individuals to projects based on request priority, resource need and availability. A basic algorithm should be created for making assignments. This “intelligent scheduling” could be kicked off at any time by the team manager in lieu of making manual assignments.

Application Roles

The following security roles should be set up in the application:

Project Manager: Can enter new projects and submit requests for resources.

Administrator: Can update all administrative screens: resources, projects, resource capacities, skill sets.

Team Manager: Has ability to do all things in the application, i.e. everything available to Project Managers and Administrators plus the ability to assign resources to projects/support and add new users to the system.

Technology

The application should be available on mobile devices. It can either be done as a native or web application. A relational database should be behind the front end.

Documentation

Full documentation of the system is required. This includes:

Source code documentation

A User’s Guide, in the form of a Word Document, fully describing the features of the system. This is to be a “how to” guide for the users.

A Technical Guide, in the form of a Word Document, that describes the architecture and major components of the system from a technical standpoint. The intended audience will be software developers who will be providing support for the application in the future.

LecturePulse

Background & Problem Statement

Educational technology in recent years has been the central figure in significant efforts by technologists to modernize the classroom. Personalized learning and educational technology have significant potential to revamp the classroom; in fact, studies have shown that individualized learning can lead to two standard deviations of performance improvement in an average (50th percentile test scores) student (Bloom, 1984). The heavy demand for ed tech innovation is heavy shows this potential. Y Combinator, one of the world’s most successful startup incubators, lists education as a top-priority startup category (Lien, 2017). Mark Zuckerberg recently wrote in an article regarding his foundation’s long-term goals that ed tech will be a top long-term priority, stating that “most economic issues today can be solved for the next generation by dramatically improving our education system” (Zuckerberg, 2017).

After attending many years of lectures ourselves, we have noticed two issues in the university lecture setting, issues which we believe can be solved with ed tech.

Problem #1: It’s hard for teachers to gauge, at scale and in real-time, students’ understanding during lectures.

Existing solutions to this problem include reading students’ facial expressions, explicitly asking, and conducting in-class polls. However, there are drawbacks to each: reading facial expressions and explicitly asking often yield blank stares and silence, and polls require setup and a physical device. A common problem among all of these options is that none are particularly scalable. Students have been shown to be influenced by social conformity when asked to speak up (Stowell & Nelson, 2007), or remaining silent due to social anxiety. Research has shown that electronic audience response systems correlate with higher class participation, feedback, and greater feelings of positive emotion during lectures (Stowell & Nelson, 2007).

Problem #2: Active & personalized learning techniques are not easy to adopt, and thus are not widely used.

There are many techniques and technologies that exist which attempt to bring active learning and personalized learning into the classroom; however, active learning in the classroom has not achieved the widespread adoption that it could.Though this use case is an already fairly established example of active learning (many NCSU professors use polling solutions such as Clickers from Turing Technology), it is only one technique, and it doesn’t always work well. Some professors at NCSU find it complex, too expensive for students, or that it often doesn’t “just work”.

Why haven’t more comprehensive active and personalize learning products come into use? There are a few active and personalized learning software solutions in existence, such as echo360, which seem to have a very compelling offering for active learning in higher education lecture environments. Echo360 however requires significant buy-in from the instructor; delivering a lecture essentially turns into using the echo360 product, and lecturers may not be willing to commit to such a radical change in lecture delivery. There are also a plethora of K12 educational platforms which have some active and personalized learning features, but are not geared to the university setting. However, none of the existing technologies we are aware of focus on active learning and personalized education as well as we believe we can, based on our comprehensive and novel feature set.

Low-tech solutions have limitations as well. One real-time low-tech solution to problem #1 involves periodically holding up red/yellow/green cards during lecture to indicate understanding. However, this lacks the leverage of data which an electronic version holds, and carries the social barrier of students having to broadcast to the class that they are struggling. Physically handing out “minute papers” and “muddiest topics” surveys is something else to print, carry around, and retrospectively keep track of, and cannot be associated with student accounts.

To solve these issues, we propose a “lecture delivery” software platform, which will at its core (a) help students give feedback on their level of understanding during lecture in real time, and (b) enable lecturers to effortlessly and seamlessly integrate active and personalized learning techniques into their teaching workflow. These two focuses combined contribute toward LecturePulse’s goal of helping lecturers deliver more engaging, interactive, and therefore effective lectures to improve student learning outcomes.

Product Vision

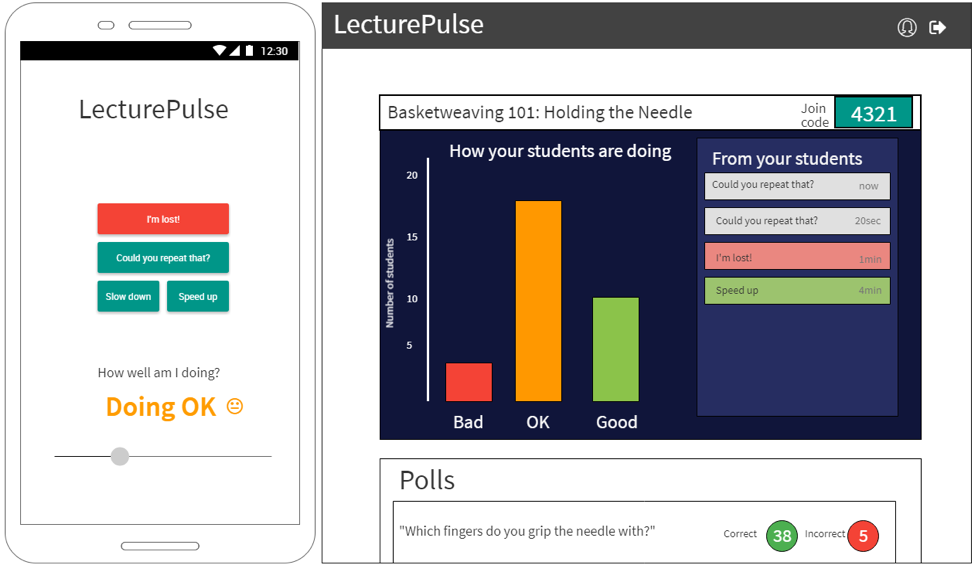

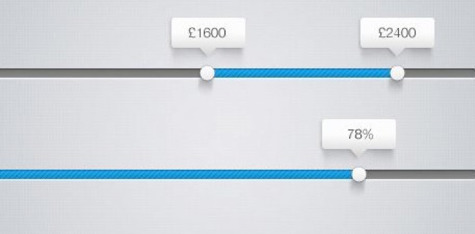

The platform will be composed of two parts: a mobile app with which students can provide feedback (left), and a dashboard for lecturers to glance at periodically while lecturing (right). A mockup is shown below.

Here’s how it works. First, the lecturer comes into class, logs in to the LecturePulse dashboard on the computer at the front of the class, and creates a lecture (“Basketweaving 101: Holding the Needle” in the example mockup). Each lecture has a “join code”, and the lecturer writes this on the board (4321 in the example mockup). Students, as they come into class, enter the code into the LecturePulse app and see the screen on the left in the mockup. At this point, the lecture is ready to begin.

As the lecture progresses, students adjust the slider at the bottom to indicate how well they understand (Doing OK in the example). A histogram of slider values is displayed on the dashboard. If the professor notices a significant leftward trend in the histogram, he/she can use that knowledge to slow down and re-explain a concept. Students also can click “message buttons” to communicate with the professor. In the mockup, there are two “Could you repeat that?” messages, one “I’m lost!”, and one “Speed up” message.

The lecturer can also conduct polls. Before or during the lecture, the professor can create a poll question and then click a “Start poll” button when ready to conduct the poll. Results will be gathered and displayed either at the end of the poll or later on at the lecturer’s discretion.

Sources

Bloom, B. S. (1984). The 2 Sigma Problem: The Search for Methods of Group Instruction as Effective as One-to-One Tutoring. Educational Researcher, 13(6), 4-16.

Lien, K. (2017, September 19). Request for Education Startups. Retrieved December 10, 2017, from http://blog.ycombinator.com/request-for-education-startups/

Stowell, J & Nelson, J. Benefits of Electronic Audience Response Systems on Student Participation, Learning, and Emotion. Teaching of Psychology, 34 (4), 253-258.

Zuckerberg, M. (2017, December 13). Lessons in Philanthropy 2017. Retrieved December 13, 2017, from https://www.facebook.com/notes/mark-zuckerberg/10155543109576634/

Machine-Assisted Data Mining

A tremendous amount of data is published online every day. With the “open government” and “open science” movements, we can expect this to only increase. Unfortunately, a lot of published data is not easy to use. There are two common challenges:

There are many “standard” ways to format common items like dates, times, and geo-spatial coordinates. Usually a data set will use one format consistently, but often this format is not specified. A person using the data must then visually identify the format (e.g.

"1 Jan 2018"as"dd month yyyy") and then write or find a parser for it.Often, a single field in a CSV, JSON, XML, or other structure is actually an encoding of several pieces of information. For example, in a U.S. government data set on chronic disease occurrence, there is a field that contains, e.g. “Prevalence of chronic kidney disease among adults aged >= 18 years”. This field is probably meant to be used as a description, but it contains the relationship “aged >= 18 years”. A person using the data may need to extract the variable (e.g.

"aged","located", etc.), the relation (e.g.">=","in","near"), and the value (e.g."18 years","Nebraska","San Francisco").

A promising way to address both of the challenges above is to deploy an extensible arsenal of parsers. Rosie Pattern Language (http://tiny.cc/rosie) is a pattern matching system that extends the idea of regular expressions, and includes a library of pre-defined patterns that match a variety of standard formats. RPL can be used as a command-line utility or within a program (Python, C, and some other languages are supported).

To address challenge #1 (above), a program (written as part of your project) could try all of the standard patterns in the RPL library to determine which standard formats are being used in a given data set. User confirmation may be needed when there are several patterns that match, and the user may need to create a new pattern when no existing pattern matches.

To address challenge #2, your solution could first apply basic patterns that match numbers, symbols, and words to a data field to recognize some field constituents. Ideally, your solution will then be able to identify cases in which there is a variable, a relation, and a value, and present this to the user. Then, the user can either confirm that the program identified these fields properly, or make corrections.

Your solutions to challenges #1 and #2 will extract sample data rows from a data set, and after interacting with the user, should be able to successfully parse the complete data set.

A good demonstration would be to process some of the files available from https://www.data.gov/, for example:

| Data source | Example entry (simplified for presentation) |

|---|---|

| U.S. Chronic Disease Indicators | 2015,NC,North Carolina,BRFSS,Chronic Kidney Disease,Prevalence of chronic kidney disease among adults aged >= 18 years,,%,“Black, non-Hispanic”,“(35.466220975000454, -79.15925046299964)” |

| U.S. Local Climate Data | WBAN:94846,CHICAGO OHARE INTERNATIONAL AIRPORT IL US,201.8,41.995,-87.9336,2014-03-01 00:51,FM-15,SCT:04 70 OVC:08 90,10.00 |

The ideal demonstration of the project would be something like this:

A selected data file is processed by your solution, and is then parseable. Your solution shows the user a list of the data fields and their types ( e.g. year, geo-location, description, relation).

The user enters some keywords (“chronic”, “kidney”) that must match one of the fields (e.g. description).

The user is presented with the relationships found in the data, and they choose a variable, a relation, and a value, e.g. (“aged > 60 years”).

All records (“rows”) matching the keywords and the relationship are extracted.

If the data set contains geolocations, the location of each row is shown on a map (using an existing mapping API, e.g. from google).

RPL Doc Builder & Explorer (and More)

The ease of use of open source projects varies greatly. Some projects are really easy to install, and have great documentation, but most do not. Rosie Pattern Language (http://tiny.cc/rosie) aspires to be easy to get started with, but needs your help to fulfill this vision.

Rosie extends the concept of regular expressions for pattern matching to include libraries of named patterns. Libraries can be created by Rosie users and shared online. There is also a set of standard libraries that are bundled with Rosie. Therefore, documentation for Rosie must include not only the RPL language, but also the libraries.

The RPL documentation is already written (in markdown, convertible to html). But the libraries of RPL patterns are not documented, and neither are the libraries written by users. Fortunately, the information needed to create the documentation automatically is in each RPL file: dependencies on other libraries, pattern names and comments, and examples (unit tests) of strings that the patterns will match and won’t match.

In this project, you will design and implement (1) an automatic RPL document generator, (2) a document explorer, and optionally (3) a browser plugin that lets the user browse RPL files online (e.g. GitHub) or on the file system, with documentation created on the fly and displayed for the user to read.

Here are some considerations regarding each component:

(1) Doc generator (for RPL files)

Should documentation be created directly, or should there be a (simple) data format produced first? I.e. should there be a front end which processes RPL files, and a separate back-end that produces html?

How can the documentation files be kept up to date, when the source RPL files may change?

Can the html output be used statically (without a web server)?

Should the generated documentation integrate with the RPL language documentation in a shallow way (just a link) or in a deeper way (e.g. links for each of the “RPL language features used on this page”)?

Does RPL need a convention for including a “docstring”, i.e. a comment that describes a pattern or a library (package) of patterns? What would it look like if this were included?

(2) Explorer

As a Rosie user, I want to find things in the RPL libraries that I have downloaded. If I enter some keywords, can I see all the RPL package names and pattern names that contain those words? Suppose a keyword is present in a literal string, or in a comment in an RPL file?

Should there be a CLI? If the Rosie user is working at a terminal, can they explore the documentation that way? Can html links in the output be used to direct the user to more complete documentation?

Should there be a web UI? How can it include both the generated documentation from (1) above, plus the ability to search for keywords, and to browse dependencies?

(3) (Optional) Dynamic doc generator/browser

Is a browser plug-in the right implementation? Would a web application be better?

What is the right user experience when the user is browsing, say, github, and they run across some RPL files? Should your solution recognize this and automatically generate documentation for those files? Should it instead add a button that allows the user to request this?

Should the generated documentation appear in a pop-up window? A split window? Some other, more integrated way?

Data Viz-Insight Engine

As information becomes more plentiful and valuable, the feedback on that data is crucial to sorting through the noise and finding the best parts. The same info could be positive or negative depending on various factors such as the viewer’s context or other geographic and business conditions. Putting all this information together and allowing customers access to the results can be a daunting task and consume many days of manual work.

Ipreo & Information

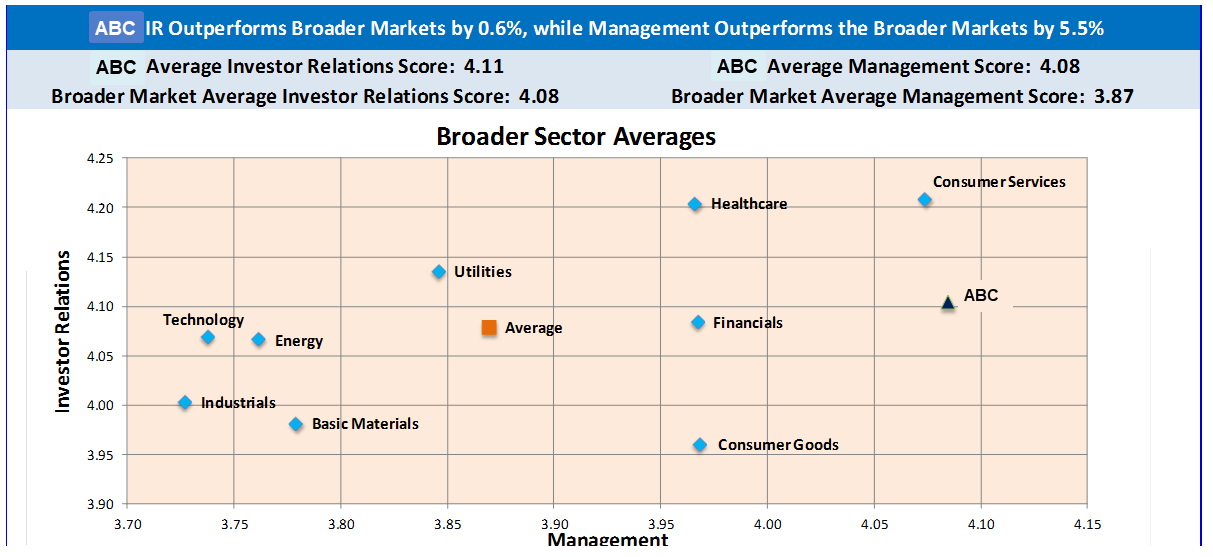

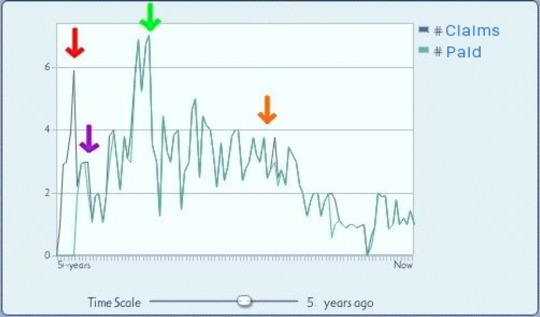

Ipreo is always looking for ways to improve the relationships between buy and sell side clients (for example, by understanding what drives their investing decisions using perception studies (https://ipreo.com/perception-studies/)). A large part of that work is to quickly visualize the feedback provided on the companies and information Ipreo has surveyed on behalf of our clients. Once available, the information needs to be explorable so they can find the core nugget or key point in what makes that data point special.

This Semester’s Assignment

We are looking for a group of creative students who can help design and develop a user-centric analytics tool to help Ipreo’s clients evaluate and review their data on their investors’ concerns as identified from perception studies. The ideal solution would provide for insightful data review and allow ah-ha moments for the client teams. The solution would embedded with our current offerings (see screenshot below) while allowing more exploration of the data and be extendable by future teams.

NCSU Student Experience

Senior Design students in the College of Engineering, Department of Computer Science and design professionals at Ipreo will have a unique opportunity to partner together over the course of the semester to develop a data visualization & insight engine for Ipreo, as they would experience in real world product development. Additionally, students will have access to top industry professionals to assist in Agile/Scrum practices, overall developing/coding and testing.

Students may be required to sign over IP to Sponsor when team is formed.

Computer Vision API, Handwritten OCR

Background

With advancements in artificial intelligence, we are seeing waves of new services offered by large and small cloud services providers. Digital documents, images and handwritten text are captured, analyzed, and predicted like never before with “Vision APIs” on the market. Though, while Optical Character Recognition (OCR) has been an established field in computer engineering for many years, some aspects were never fully solved until now.

LabCorp® is looking to sponsor a project for a handwriting and vision API, which allows to submit a handwritten note (from thousands of different users and/or systems) and images to identify the content, validate the content against several services like dictionaries, healthcare specific nomenclature, address validation services and much more. The project will include the research of strengths and weaknesses of publicly available services, the creation of service APIs to be consumed in real-time, and a user interface to submit and edit the outcome. Depending on the progress of this project, the service API should be able to learn from the outcome and enhance future Vision API requests.

Expectations and Goals

The student development team will engage on a regularly set schedule with designated mentors from LabCorp®. LabCorp® will provide guidance about enterprise scale architecture and technologies, but leave the students engineering creativity a focus point of the project. We will encourage and maintain an ongoing dialogue with the team about how modern research from the students can enhance existing approaches.

The outcome of the project should create a demonstrable solution, which allows the submission of a digital handwritten image and machine written document through a service interface. The documents will be translated in real-time and the service will return the identified content, the validated state of the content, and other meta data important for consuming systems to use the outcome. The idea behind this service is to enhance medical outcome and testing by reading handwritten diagnosis codes from physicians or other healthcare providers. The ultimate goal is to have information technology have a direct impact on millions of lives daily.

Skills, Technologies, Constraints

The preferred programming languages are Java and JavaScript for the service API. Input and output format should be JSON based. The service has to fulfill stateless and RESTful standards. The API layer can be created as a server based application, which is performant and can scale, or be a serverless implementation with the Serverless Framework (https://github.com/serverless/serverless).

About LabCorp®

Did you know that the largest healthcare diagnostics company is right in your backyard? Based out of Burlington, North Carolina, LabCorp® has a lot of different research and development centers around the country including the Research Triangle Park (RTP) in North Carolina.

Laboratory Corporation of America® Holdings (NYSE: LH), an S&P 500 company, is the world’s leading healthcare diagnostics company, providing comprehensive clinical laboratory and end-to-end drug development services. With a mission to improve health and improve lives, LabCorp delivers world-class diagnostic solutions, brings innovative medicines to patients faster and develops technology-enabled solutions to change the way care is provided. With net revenue in excess of $10 billion in 2017, LabCorp’s 57,000 employees serve clients in 60 countries. To learn more about LabCorp, visit www.labcorp.com, and to learn more about Covance Drug Development, visit www.covance.com.

Students will be required to sign over IP to Sponsor when team is formed.

Synthetic Data Generation (SDG)

Who We Are

PC Core Technology is a technology team in Raleigh, NC that’s part of Schwab Technology Services in Charles Schwab. They build comprehensive technology offerings for end investors and for financial advisors to help them manage their business and their client’s investments. This team develops applications for desktop, web, and mobile platforms that provide industrial strength solutions using technologies and frameworks such as C#, Mongo, SQL, C++, HTML5, Angular.JS, JSON, and REST. We concentrate on the fundamentals of architecture, design, development, and quality assurance to build applications that stand the test of time which is critical in the financial industry.

What We Are Looking for In the 2017 Spring Project

Synthetic Data Generation (SDG) is an application that will allow Schwab to efficiently plan generation of test data for load and performance testing of its applications. SDG will be a true application development effort with a focus on customer (Schwab managers) efficiency and a complete end product based on specifications.

Project Description

Synthetic Data Generation is the ability to generate load data based on the characteristics provided in the user interface. On a periodic basis there is a need to generate data for load and performance testing of various applications within Schwab. This is necessary to understand the performance of the applications during peak load. The characteristics of the generated load can be similar to the input data or can be tuned to represent a certain load condition that is atypical. SDG will offer a user interface to define the amount of load to be generated and the type of load to be generated based on certain tuning parameters. This tool will accept generic file format and tuning parameters and generate the load accordingly.

Data Input

The Schwab team will provide the config file schema that will act as an input to the tool. This needs to specify various parameters like field name, field data type, field location, etc.

Graphical Display

The UI layout should be dynamic and be based on the input configuration file. The UI should be dynamically generated based on the input configuration file giving the ability for the user to tune the synthetic load based on any input feature. For every feature in the input, the UI should be able to define its properties such as

Range, mean, median, variance for numerical data

Various levels for categorical data and the distribution of each level

Application Development

Using the parameters set on the UI, the application should then generate the necessary load with the characteristics for the load provided as the input.

Project Approach and Technology

The project will be based on some of the technologies Charles Schwab has been using for product development. This list may be altered depending on the teams’ existing technology strengths. Technologies may include:

C#, JavaScript, and/or HTML5 may be used for the project development based on the team’s abilities and desires

REST for web services if required

SQL for the database

Python and other machine/Deep Learning tools if necessary for TMR project

Charles Schwab strives to develop using best practices while remaining agile. We use the SCRUM and Kanban methodologies and will run the senior project within a similar methodology used by our teams. One mentor will be selected from our development team to be the primary interface with the project team, but other members of our staff will be brought in from time to time to assist with the project.

Project Success

Success will be based on the following accomplishments:

Participating using a SCRUM methodology to understand the benefits of agile development.

Requirements (epics and user stories) will be provided to the team by the Charles Schwab staff. The team will successfully groom the user stories to provide initial estimates.

Breaking down the user stories into logical tasks and providing task estimation. This will be used to prioritize features within the semester’s time box.

Architectural and design documentation produced for data and user interface components. A variety of UML and white boarding documentation will be used.

Producing quality code (well organized, performant, documented)

Developing a test strategy for unit, component, and overall quality assurance.

Working application based on the initial requirements and negotiated prioritization completed and demonstrated to internal Charles Schwab staff.

What Charles Schwab Gains From the Senior Project

Charles Schwab is interested in engaging with the brightest students in top tier computer science programs. New projects have allowed us to open a number of positions for recent and upcoming graduates. We hope to establish strong ties with the best students during their senior year which could then lead to jobs opportunities when they graduate.

Students may be required to sign over IP to Sponsor when team is formed.

Predicting Part Failure & Spare Parts Planning

Siemens Healthineers develops innovations that support better patient outcomes with greater efficiencies, giving providers the confidence they need to meet the clinical, operational and financial challenges of a changing healthcare landscape. As a global leader in medical imaging, laboratory diagnostics, and healthcare information technology, we have a keen understanding of the entire patient care continuum—from prevention and early detection to diagnosis and treatment.

Molecular Imaging (MI) and Managed Logistics (ML)

Medical imaging helps to advance human health globally, supporting early and precise diagnosis, a specific, less invasive therapy and solid after care. Answering to clinical needs in radiology, oncology, neurology and cardiology, Siemens molecular imaging systems (e.g., PET and CT scanners) provide solutions to help clinicians more confidentially diagnose, treat, and monitor disease.

MI systems will require maintenance (both planned and unplanned) throughout their life and spare parts are often needed to resolve issues. This is where Managed Logistics comes in. ML provides logistical support to our customers. We help to deliver confidence by effectively managing the spare parts supply chain. In other words, ML’s mission is to get the right part to the right place at the right time.

Project Overview

The current forecasting models ML executes to plan for future spare part demand is based on time series modeling. Using historical order volumes and these models, ML is able to achieve high part availability while at the same time keeping inventory costs low. Time series models are excellent at forecasting overall trends but often fail to predict unanticipated spikes and troughs in demand. Being able to accurately predict individual part failures in deployed systems would be a significant game changer for the way we manage supply chain operations.

Just as we in ML continually seek improvement, so do the engineers who develop the MI systems. With the advent of the Internet of Things (IoT), Siemens is developing “smart parts” to proactively alert potential failures. This access to large amounts of data streams from the machines in our MI installed base present a great opportunity for ML to leverage this information. Are you ready to turn this opportunity into reality?

Project Scope

This project will be broken out into three distinct phases:

Phase I – Data Parsing of MI System Logs

MI has created a data lake that stores the system logs that contain all discrete events for each machine, which is referred to as a functional location. The logs essentially contain every action performed at the functional location (e.g., power on/off, system initialization, scan initiation, failure tests, etc.). As you can imagine, these logs can get very large for machines running 12+ hours a day. Furthermore, these logs are long strings of text that cannot be easily read by the human eye. Although it might be challenging, we anticipate this log data can be parsed and formatted into a structured format. All logs are captured from the same “family” of functional locations within the Molecular Imaging technology. This means that all logs generated will be in the same format and similar discrete events will be tagged uniformly. We are asking you to develop the logic/queries/algorithm(s) necessary to transform these logs into a more conventional data format. Since all logs have the same format, the logic will apply to all logs from each functional location. The tools and methodology you employ is up to you. But we will require the output to be delivered in a commonly accepted structured data format (e.g., CSV, XML).

Phase II – Part Failure Prediction with Machine Learning

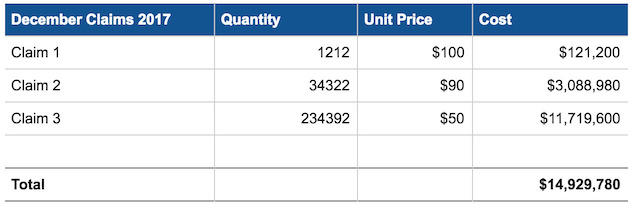

After the structured data set for the MI functional locations is created, the next step will be to integrate spare part consumption data for each functional location. The spare part consumption data is a small dataset that contains the date, part number, and quantity for spare parts ordered and installed at each functional location (example provided below). Combining these two datasets will enable us to analyze log data in relation to spare part consumption. We anticipate certain patterns will emerge in the MI log data that will help predict specific part failures.

Identifying all the potential combinations of system events and analyzing these patterns “by hand” is an arduous task to say the least. But there are several techniques in the field of machine learning that could yield highly effective prediction models. We are asking you to research and recommend potential machine learning techniques that could be implemented based on the dataset. After reviewing your recommendation, we would like you to implement the learning model with ~ 95% of the data set and apply that model to “predict” the part failures for the remaining data. In other words, we are asking you to build the prototype to predict the spare parts needed and evaluate the accuracy of the model. As with Phase I, we are flexible with the solutions used but we will require that all methods are clearly explained and documented. Recommended machine learning techniques to begin your research are Decision Trees (CART), Support Vector Machines (SVM), or Artificial Neural Networks (ANN) to name a few.

We at Siemens realize that as computer science students you might not have had a lot of exposure to data analysis and machine learning. But if you are a student that has taken analytics or data science courses we think this could be an excellent opportunity to combine your skills and talent to accomplish something very impactful. Because of the scope for Phase II, Siemens is committed to working closely with you to ensure that this project is solvable and successful.

Phase III – Reporting of Prediction Results

Based on the prediction models developed in Phase II, we are requesting that you develop a mechanism (e.g., dashboard, alert bulletin) that will help ML identify upcoming part failures. While the content of this tool is not defined at the moment, we envision that it will contain the spare part, functional location, and date associated with the impending predicted failure. In other words, show us the prediction results in a way that is easily interpreted.

Are you up for the challenge of developing this supply chain management game changing innovation?

Spare Part Consumption Data Sample

| Order Created | material_nr8 | quantity | FL |

|---|---|---|---|

| 12/1/17 12:11 AM | 10522041 | 1 | 400-010960 |

| 12/1/17 1:12 AM | 10412189 | 1 | 400-029235 |

| 12/1/17 12:06 AM | 10909856 | 1 | 400-028439 |

| 12/1/17 12:21 PM | 04361585 | 2 | 400-028440 |

| 12/1/17 4:26 PM | 05989228 | 1 | 400-028441 |

| 12/1/17 7:21 PM | 11296055 | 2 | 400-031821 |

| 12/1/17 9:56 PM | 07833192 | 1 | 400-024036 |

| 12/1/17 8:06 PM | 08423472 | 1 | 400-033380 |

| 12/2/17 1:21 AM | 10763443 | 1 | 400-014751 |

| 12/1/17 11:01 PM | 10412000 | 1 | 400-032146 |

Students will be required to sign Non-Disclosure Agreements and sign over IP to Siemens Healthineers when team is formed.

Facial and Emotional Recognition Monitor

Problem Statement

There are many jobs that require a person in a position of some power or authority to deal with people’s requests or make challenging decisions. In the fields of law, business, economics and others, it is often assumed in theories and lessons that all actors participating in the system are completely rational at all times – something that we as humans know is not always true. In fact, studies have shown that a person’s mood can have a huge influence over the decisions they make, even in the cases of huge decision-makers like business owners and judges.

There is a whole sub-field of economics called Behavioral Economics that studies the psychological, social and emotional factors that affect market prices. Furthermore, a well-known publication by the Proceedings of the National Academy of Sciences shows that the chance of a judge granting an inmate parole decreases from ~65% in the morning, to ~0% right before lunch, and back up to ~65% right after lunch. That’s a year of a person’s life in prison depending on if a judge is hungry or not! There are other more minor cases as well – sometimes we simply let our sour mood affect our dealings with our co-workers in a negative way. We would like to create an application that helps people to be aware of how their emotional state might affect their dealings with others – and if possible, uplift them.

Requirements

We would like to create a Universal Windows Platform (UWP) application that uses facial and emotional recognition software to plot a chart of the user’s mood throughout the day. The application would use the webcam already present in most people’s laptops to gather facial images for analysis (with the user’s permission, of course). The application will analyze the pictures it takes using facial and emotional recognition libraries, and store the resulting data. The application can then calculate an “average mood” statistic over a period of time, and compare new data against this to detect when the user’s mood is especially low or high for the current day and time. From that point there are a number of possibilities, such as displaying an animation or suggesting the user watch a funny cat video on YouTube. We would like to notify or uplift an aggravated user without causing them further aggravation!

Technology Preferences

The choice of technologies and libraries is mostly left to the team, but as Avanade is a Microsoft subsidiary we have a couple technology preferences:

Microsoft Windows development environment

Microsoft Azure for hosted services

Azure Cognitive Services emotion detection

Validated Crypto for Java Cryptography Architecture

Background

Cryptography is very important in today’s world. Improper or maliciously altered crypto implementations have been a big concern for the industry in recent years. To alleviate the risk, Cisco has been working with the National Institute of Standards and Technology (NIST) on finding ways to validate crypto implementations. The output of these efforts is the Automated Cryptographic Validation Protocol (ACVP). ACVP enables crypto implementations to interact with a server that provides crypto test vectors which the crypto implementation encrypts and sends back. The server can then check for correctness which would mean that the algorithms are implemented correctly. ACVP can be used for Federal Information Processing Standard (FIPS) validations of the cryptographic modules. Cisco has open-sourced an ACVP client that implementers can use to validate and certify their algorithm implementations against NIST’s or 3rd party servers.

One of the challenges of ACVP adoption and growth is that the client has to be customized to each specific crypto provider. Cisco has contributed a C-based ACVP client that integrates with OpenSSL. Concurrently, RedHat has contributed an ACVP client that integrates with NSS. The goal for this project is to create a new Java ACVP client that integrates with the Java Cryptography Architecture (JCA). In doing so, any Java crypto providers that implement the JCA interface could be validated using the new ACVP Java Client.

Description

In this project we want to introduce a Java ACVP client for the Java Cryptography Architecture. ACVP can be used to validate the integrity of Java-based crypto providers.

-

We want to extend the open-source ACVP client library to integrate and validate a single implementation (Sun or SunJCE) of the JCA. The updated ACVP client should be able to run the crypto algorithm implementations from these libraries against the NIST ACVP server test vectors to validate the crypto modules. Running the new Java ACVP client code in a web browser is encouraged, but not mandatory.

-

The existing ACVP client implementation must be ported to Java JDK 8. This includes server connectivity work to the existing ACVP server.

Required Student Skills

-

Students must have taken or be taking at least one course in security or cryptography.

-

Experience in Java programming and familiarity with JSON.

-

Familiarity with Git and GitHub.

-

Motivation to work on an interesting topic and try to make an impact.

Motivation

Students will be able to work on a new and interesting practical industry problem. Cryptography and security are hot topics today. NIST, Cisco and other companies have been trying to address the validated crypto issue for some time. Java Security is also one of the hottest topics in the industry today. Marrying the two subjects and planting the seed for standardized, validated crypto will allow the students to get familiarity with interesting topics and see where the industry is moving towards. They will also get to use common tools like Git and see how cryptography is implemented in the real world.

Since Cisco has been actively working on ACVP and validated security, this project will allow us to make the case for the industry to consider validating lightweight crypto in an automated fashion. With this project we will be able to prove that it is possible by integrating the ACVP library with at least one lightweight crypto library. We could also gain knowledge about potential changes to the ACVP protocol that enable its use for crypto validation in Java-specific environments.

Deliverables

-

A new fork of the open-source ACVP client should be created in the GitHub repository. The existing C-based client should be ported to Java JDK 8. A Git Pull request should be completed to deliver the changes into the public repo.

-

A new implementation of the Java ACVP client that integrates with and validates the Java Cryptography architecture.

-

A new, working main Java application should be included in this code demonstrating how the new ACVP Java client can be used in an application.

Reference Implementations for X.509 Certificate Generation and Revocation Status Checking

Background

One of the main pain points of Public Key Infrastructure (PKI) implementers is consistently verifying certificate status. Even though a lot of the functionality and command options are built into standard certificate libraries, there are no good native implementations that can be used by developers to check the revocation (CRL, ARL, OSCP, OCSP stapling). Additionally, there are no reference implementations that we are aware of that can be used to verify the CA transparency logs for a certificate. Thus, development teams that are inexperienced in Digital Certificates cannot enforce these checks easily.

Description

In this project the students will implement a reference implementation that will be used as a best practice for product teams within Cisco, and potentially the industry, who want to check the status and legitimate generation of a X.509 certificate. Revocation checks to focus on are OCSP, OCSP stapling, CRLs, ARLs and authorization and validation list (AVL). The reference implementation should be coded in C for the OpenSSL library and open-sourced as a GitHub project.

Long-lived certificates need to use revocation in the event of a compromise or an operational change, but short-lived certificates potentially don’t need the extra burden of revocation. There is no need to revoke a key that will expire in a few days anyway. Short-lived certificates seem to be gaining traction within the industry. We expect the students to investigate, research and perform an operational challenge (where things can go wrong) analysis of using revocation and CA transparency logs compared to short-lived certificates.

Required Student Skills

Students must have taken or are currently taking at least one course in security and have some familiarity with cryptography, PKI and X.509 Digital Certificates.

Experience in C programming.

Some familiarity with OpenSSL.

Familiarity with Git and GitHub.

Motivation to work on an interesting topic and an opportunity to make an impact.

Motivation

Students will be able to work on a practical industry problem. Cryptography and security are hot topics today. Cisco product teams have a need for this work, so the students will contribute to addressing a real industry need. They will also get to use common tools like Git, see how cryptography is implemented in the real world and get exposure to OpenSSL, an industry standard security library.

Since Cisco has a need for a reference implementation of certificate checks, this work will be evangelized and used within the company. We will also evaluate our stance on short-lived certificates based on the results of the analysis from the students.

Deliverables

Open-source reference implementation that performs the CA transparency and revocation checks should be published in GitHub.

Revocation, CA transparency challenges vs short-lived certificates analysis document.

Vehicle Network Solution for ecoPRT

Background

Personal rapid transit (PRT) is a maturing technology that could offer an alternative to traditional transit systems like bus and rail. EcoPRT (economical PRT) is an ultra-light weight and low-cost transit system with autonomous vehicles that carry one or two passengers at a time. The system has dedicated guideways where the vehicles are routed directly to their destination without stops. The advantages include:

Dual mode – existing roadways and pathways can be used for low install cost in addition to elevated roadways at a cost of $1 million per mile

A transit system that is much quicker and less costly than either bus and light rail

A smaller overall footprint and less impact on the surrounding environment so guideway can go almost anywhere.

The research endeavor, ecoPRT, is investigating the use of small, ultra-light weight, automated vehicles as a low cost, energy efficient system for moving people around a city. To date, a full-sized prototype vehicle and associated test track have been built.

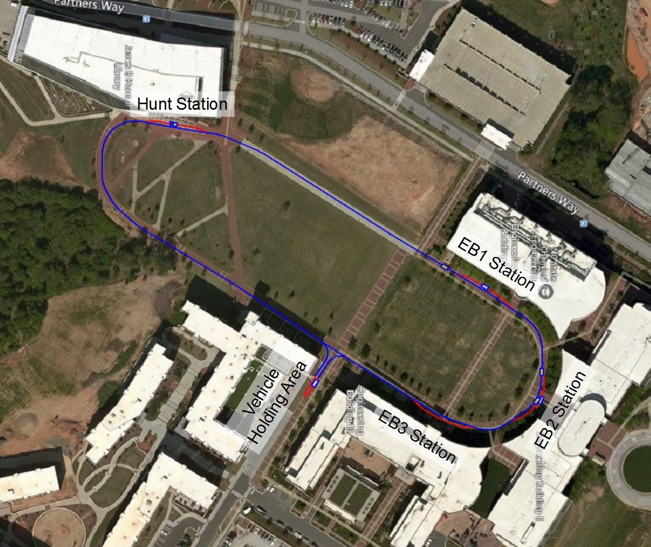

Oval Project

As a demonstration site, we are proposing running a fleet of 5 or more vehicles on the walkway connecting the engineering buildings to the Hunt library. (See figure). For each building there will be one station (5 overall) stopping near the entrances of EB1, EB2, EB3, the Hunt Library, and Oval Dining. Vehicles will be waiting at each location or can be called up through an app on a smartphone.

What is the specific problem or issue

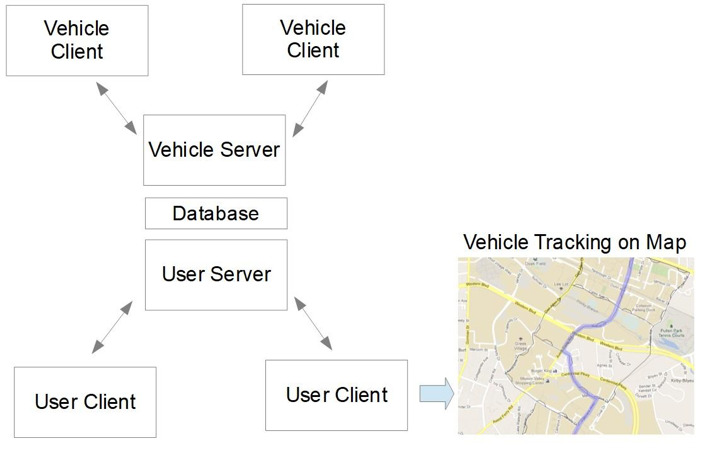

With the aim of running a multi-vehicle live pilot test on the Oval, the goal is to create a Vehicle Network Controller (VNC) to guide the vehicles and provide interaction to users. Currently, the architecture looks like the following where the vehicles communicate to a vehicle server, and vehicle data is saved into a database. On the user side, the user clients (web or mobile based) interact with a user server that shares the same database. The user server should provide some sort of tiered security access where nominal users would allowed to select destinations and view their vehicle on a map, but administrative users could see key vehicle and network statistics.

Authentication would require at the least “nominal users” and administrators. Some of the tasks the nominal users could do include calling a car to a certain location, finding out where available cars are currently located, or reserving a vehicle for sometime in the future. Administrative tasks could include: viewing all cars, overriding vehicle routes as needed, rerouting vehicles, viewing diagnostic data, receiving emergency statuses from the vehicles.

In later stages of development, algorithms could be developed that efficiently route vehicles from one location to another based on overall demand and vehicle locations of the network.

Simulation of the network will be accomplished by integrating an existing vehicle simulation client into the VNC network. This will be the main method of testing and verification before connecting to real vehicles.

Currently, a team of NCSU graduate students is working on all layers of this VNC. The team of students from CSC senior design will be integrated with the graduate students to work on all aspects as a whole. In effect, everyone will be working as a team on the project. Mentors will be meeting with the CSC Senior Design team weekly. Architecturally, there is a need to build out the user client and user server architecture along with demonstrating VNC with multiple (simulated) vehicles attached to it.

Project Team Specification

Desired Competencies:

Server/client programming experience. Interest in Angular and Node js framework, C++ socket programming as well.

Server architecture experience. Interest in database development. MySQL, PostgresSQL options

Interest in front-end user design

Interest in Autonomous Vehicles / Technologies.

Retirement Plan Fund Scorecard

Problem Statement

Plan Sponsors are individuals responsible for selecting the lineup of mutual funds offered by a retirement plan to participants of that plan. Sponsors have a fiduciary duty to select a balanced and diversified array of funds to make sure participants can effectively save for retirement. There are two common issues that Sponsors run up against which can expose their company to risk of litigation:

Plan Sponsors do not actively review fund lineups to insure that the funds are performing on par with specific benchmarks.

Plan Sponsors include mutual funds in the lineup because their company has an interest in the funds succeeding

- http://www.latimes.com/business/hiltzik/la-fi-hiltzik-ge-401k-20171017-story.html

Project Description

Build a web application that will display to Plan Sponsors the “Score” of their mutual fund line-ups. The app will:

Take as input an array of plans(mutual funds and investment options) offered by the plan and use current and historical performance data to compare the plans to specific benchmarks like the DJIA.

The application will “score” individual funds and roll up that information into a meaningful representation of the plan’s line up.

- NCSU team will be asked to develop scoring algorithm with help from Fidelity team.

The application will factor in fund fees, risk tolerance and potential Sponsor conflicts of interest.

The application will provide breakdowns based on asset class and sector.

Some info to get started:

Preferred Student Skills

- Angular / CSS / HTML

- Java / Spring

- Rest

- JSON

- Agile/Scrum

Elastic Stack Visualization and Analysis Application for StorageGRID Webscale

Background

StorageGRID Webscale is NetApp’s software-defined object storage solution for web applications and rich-content data repositories. It uses a distributed shared-nothing architecture, centered around the open-source Cassandra NoSQL database, to achieve massive scalability and geographic redundancy, while presenting a single global object namespace to all clients.

Supporting a scale-out distributed system like StorageGRID Webscale presents a unique set of challenges. If something goes wrong, the root cause analysis may require piecing together clues scattered amongst log files on dozens of individual nodes. Due to the complexity involved, a lot of information is recorded for every transaction, but this leads to an overwhelming amount of log data – often, identifying what information is relevant is the hardest part of solving a problem.

Project Goals

Implement a log ingest, indexing, and analysis tool for StorageGRID Webscale based on Elastic Stack (Elasticsearch/Logstash/Kibana).

The tool should have the ability to ingest and index StorageGRID Webscale log file bundles retrieved from multiple customer sites.

The tool should provide an StorageGRID-aware data analysis UI that makes it easy to answer questions such as the following:

Show me the transactional history of a specific object stored in the grid (when and where was it ingested, relocated, replicated, or redundancy-reduced, overwritten, et cetera)

I’ve found this curious-looking log message. How often do similar logs occur at other customer sites?

Oct 20 07:59:07 RZ2-S1 ADE: |21317532 80800 0000000000 ???? ???? 2017-10-20T07:59:07.026927| WARNING 0120 ????: Failed to execute cql: SELECT nameless_objects, s3_buckets FROM storagegrid.object_counts - Cassandra Driver Error(Read timeout):‘Operation timed out - received only 1 responses.’

Help me visualize the aggregate transactional history of objects in a specific customer grid along a variety of axes, so I can determine whether a few objects known to have suffered loss or damage are all members of a well-defined object family.

Stretch goal: These two support cases from different customer sites have similar symptoms. Are there any patterns common to the logs from those sites but not commonly found elsewhere that may point to root cause?

Deliverables

- Packaged Elastic Stack application suitable for installation into one or more Elastic Stack instances maintained by NetApp for StorageGRID support use.

Development Environment

On-premise or in-cloud Elastic Stack instance

Elastic Stack consists of Elasticsearch, Logstash, and Kibana

Elasticsearch is implemented in Java and provides a JSON-over-HTTP API; clients are available in multiple languages including Java and Python; it is the back-end fulltext search engine you will use for this project.

Logstash uses JSON-formatted configuration files to define how incoming logs should be processed and forwarded to Elasticsearch.

Kibana is a browser-based web interface for searching and visualizing logs, written in JavaScript.

NetApp will work with the student development team to determine whether a locally hosted or AWS-based Elastic Stack instance is the preferred development environment. Should AWS be selected, NetApp will cover reasonable costs associated with the development instance.

Exemplar StorageGRID log bundles provided by NetApp

Helping Hands Training Portal

About The Collaborative

The NC Collaborative for Children, Youth & Families (“The Collaborative”) is a non-profit group of cross-system agencies, families, and youth who educate communities about children’s issues, support the recruitment of family and youth leaders to provide input into policy and training, and organize support to local systems of care groups.

The Collaborative has been working to improve their website and use of social media to promote understanding of children’s issues. They need assistance with developing online training and ways to deliver their message to communities about children’s needs.

Social workers throughout the state come to this website to become trained and certified to be able to serve as a mental health consultant with children and families (they can also go to in-person workshops to be trained if they prefer). Employers pay for the training and, when training is completed, a box is checked off and the trainer is certified. Currently, there is no assessment or monitoring involved.

Project Description

The Collaborative would like to build more into their website that allows for assessment of a person who is trained (via something like a quiz) and then some sort of open-ended feedback on how participants use their trainings (this could be in the form of sharing of best practices, some sort of chat room, etc.). Eventually, the Collaborative would like to offer a pilot study to see if these additions improve the quality of service in the field.

The Collaborative would also like to offer additional trainings (other than the major one described above) via their website, with assessment, and perhaps a dashboard of some sort for participants to keep track of training hours and assessments (including the major one, above). Perhaps potential employers could also come to this site to view dashboards of potential employees or employees could point to dashboards from their resumes (requirements to be determined!).

Last semester, a Senior Design team created a framework for a training portal website where:

Users can register and log in/out.

Administrators can add/edit training courses, quiz pages, and timeline events.

More features are needed to complete this system.

Technologies used included: NodeJS, Sequelize, Express, Handlebars, MySQL, Bootstrap, and jQuery. This semester’s team will be given access to last semester’s codebase and documentation.

Vulnerability Management Residual Risk Calculator

BB&T Corporation, headquartered in Winston-Salem, North Carolina, and its subsidiaries offer full-service commercial and retail banking and additional financial services such as insurance, investments, retail brokerage, corporate finance, payment services, international banking, leasing and trust. BB&T Corporation is an S&P 500 Company listed on the New York Stock Exchange under the trade symbol BBT. More information about BB&T can be obtained at www.bbt.com.

BB&T has been enhancing its cyber security program and would like NC State students to help BB&T build a web application that calculates and reports inherent and residual risk for vulnerabilities in the environment.

The student team will create a web application that can be used to calculate a custom vulnerability risk score for BB&T utilizing industry frameworks and inputs as base criteria. BB&T would have custom risk inputs to calculate a residual vulnerability risk score modeled after CVSS (Common Vulnerability Scoring System). This project should incorporate the development of a web front- and back-end that utilizes a user definable risk ruleset. This will incorporate API development to automate collection of data from open and closed vulnerability frameworks such as NIST, CVSS, and Qualys.

Desired Outcomes

The development of an algorithm that can calculate and assign residual risk based on both programmatic and manual inputs.

The development of a platform-independent data store, which can be NoSQL or SQL-based.

A working proof of concept that can be leveraged by BB&T’s Vulnerability Management Team.

Implement scheduled pulls of vulnerability data from frameworks (i.e. https://nvd.nist.gov/vuln/data-feeds)

Platform Requests

- Python is the preferred development language

This independent project will provide BB&T with the ability to apply proactive protections on perimeter controls while helping refine prioritization efforts pertaining to new vulnerabilities.

Students will be required to sign over IP to Sponsor when team is formed.

PDF Review Tool

In 2014, the Developer Liberation Front began work on an open source tool called pdf-reviewer that made it easier for researchers to collaborate on providing feedback on each others’ writing. In short, the cloud-based tool takes a PDF file (example) with comments on it, then creates a GitHub issue for each comment in that PDF file (example). The tool ensures that the author can address each comment/issue systematically, that each comment/issue can be discussed by the author and reviewer, and that every issue can be linked to a change to the paper. In 2017, due to changes in the underlying cloud platform, it no longer became feasible to run the tool.

We are requesting a senior design team revamp our tool in our existing GitHub repository, reusing our existing codebase to the extent possible. For maintainability reasons, we request that development be done in Java. Completion of two basic development tasks are required:

Choose a new cloud platform that suits the needs of the tool. Criteria for the hosting environment include:

High likelihood of continued compatibility for the next decade

Ease of porting between multiple platforms, such as EC2, GCP, RHCloud, VCL, or even run as a program on desktop machine.

Free or low deployment and runtime cost, if only for educational environments

Enable the basic ability to turn a PDF’s comments into GitHub issues, as described above.

Furthermore, we request a variety of advanced features that the past tool also supported:

Archiving submitted PDFs into the reviews/ folder of the GitHub repository

Embedding of PDF snippets as images into issues, so that authors can see the context of the comment

Adding issue numbers into the PDF comments, and returning this modified PDF to the user

PDFs that are partially reviewed may be uploaded a second time once the reviews are complete, without overwriting old comments

Issue tags can be indicated in PDF comments using double square brackets (e.g. “[[must-fix]]”) and are automatically transferred to the associated issue. Shorthand is also supported (a “[[mf]]” comment tags the associated issue with “must-fix”, etc)

Workflow is very important- the process must be smooth and not be an added burden on the users (whether the writer or the reviewers)

Finally, more advanced features that the past tool did not support are also welcome. For instance:

Receive and process PDFs via email attachments, rather than a web interface

Archive submitted PDFs to any branch

Self-submitted reviews (as opposed to requesting a review from yourself, then uploading your own review)

Derived Personal Identity Verification (PIV) Authentication

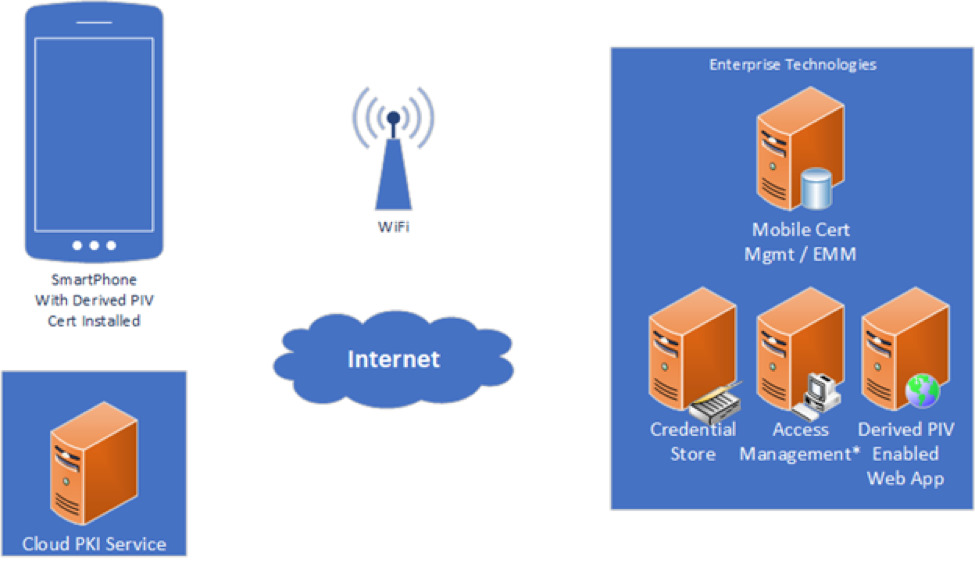

The goal of this project is to determine the feasibility of using a mobile device as a PKI (Public Key Infrastructure) Smart Card replacement. Merck is looking into leveraging PKI certificates as a multi-factor solution to provide a high-level assurance of identity. This PKI authentication could potentially eliminate the need for a known password as a method of authentication. Eliminating dependency on password for authentication will help reduce the risk of credential harvesting based attacks.

Rather than leveraging Smart Cards, which would require new investments in hardware and are not the most user-friendly experience, can we leverage the proliferation of mobile devices to improve the overall experience? The solution should attempt to answer this question with consideration for the following high-level requirements:

Cloud PKI Vendor - To reduce the overhead and maintenance of the solution, we would like to work with a PKI as a service vendor who issues cloud based certificates as part of the solution.

Credential Store - The solution should leverage a credential store for identity lifecycle management. Active Directory is Merck’s solution for this but others can be utilized if needed.

Certificate Management/EMM - The solution should have a mechanism for managing the certificates on the mobile device. Merck’s Enterprise Solution for this is MobileIron, but an alternative Enterprise Mobility Management (EMM) solution can be leveraged if more convenient. (Note: If the Cloud PKI Vendor in #1 can deliver these certificates to the target mobile device without EMM, that is an option for this project in lieu of this requirement.)

Derived PKI Authentication - The working prototype should authenticate a user, without a password, while leveraging a certificate stored on an android or iPhone device, to a single target web application. Given that Merck is largely an iOS shop, iOS is preferred but not required.

Web Application – The web application is unspecified, but ideally will be related to some aspect of life sciences. This will not be developed by the project team, but more likely an open source application.

(Optional) Access Management Platform – If needed, an access management platform can be leveraged to simplify the ability of the web application to accept the authenticated user. PingAccess would be our preferred choice here, but we’re open to alternatives.

Internet Access – Accessing this application from the internet is not a requirement. Local network access is sufficient for proving the concept.

Software Licensing - If any licensing is required for selected technologies, Merck will acquire and distribute them where appropriate.

The concept architecture (Figure 1) is merely for illustration and should not be construed as an attempt at system design.

The emphasis for this project is proving whether NIST’s 1800-12 proposed standard (link below) for derived PIV authentication is viable outside of a Federal regulatory environment. Evaluation of requirements, and their relative priority, should be weighed against this principle constraint. Success in answering this question will be with a working demo utilizing the agreed technologies.

Resources

NIST SP 1800-12 Draft - https://nccoe.nist.gov/publication/1800-12/VolB/index.html

- Cloud Identity Summit Presentation from NCCOE on the NIST Project

Preferred Technologies / Frameworks / Languages

- Credential Store: Active Directory

- Certificate Management/EMM: MobileIron

- Mobile Device: iOS

- Access Management Platform: PingAccess