Projects – Fall 2010

Click on a project to read its description.

Visualization of Network Topology

Background

AT&T Business Services (ABS) uses a combination of vendor tools and AT&T Labs developed tools to manage customer networks. One of the major components of the AT&T platform is EMC SMARTS InCharge. SMARTS is used in a fault management environment to detect outages on a customer’s network. SMARTS builds a topology of a customer’s network and then correlates outages based on that topology. So, if a large customer datacenter with hundreds of devices loses power, then AT&T receives a network alarm on a single device with the remaining devices listed as an “impact” of the outage. This helps to reduce the number of events seen by the IT staff.

Project Description

SMARTS provides a topology display tool, but it does not meet the specific needs of the engineers working for AT&T Business Services. When working customer issues, what is generally needed is a small snapshot of a specific location on a customer’s network. This needs to be easily available to retrieve and use by the engineers.

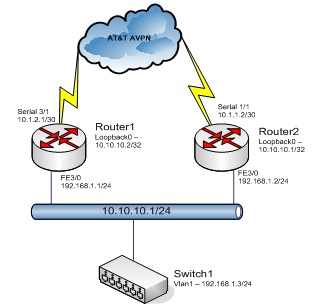

The project AT&T is requesting is to use the SMARTS topology to build a web based network topology diagram. Input will be a sample topology of a Fortune 500 company, provided as a text file. This topology information should be stored in a database. A web front-end will then be created to allow users to input a device hostname and how many “hops” need to be displayed. The tool will then provide a network diagram based on the provided information. The diagram should contain device names, IP addresses, and other critical information about the devices being displayed. This information will all be provided in the topology data. Below is a diagram, drawn in Visio, which is a good example of the output of the tool.

Additionally, the database that is built from the topology information will provide additional benefits to AT&T in the future, and could lead to other NC State projects. Easy to use topology information can be used to create network audits, design recommendations, and other services to customers.

Benefit to NC State Students

Many of the tools used by AT&T for network management are industry standard tools. EMC SMARTS InCharge is a widely used network management tool and will benefit students interested in the IT industry. Additionally, this project will need experience with MySQL (or another database system), Perl, PHP, graphical rendering tools (PHP drawing libraries as an example) and other web programming languages.

Benefit to AT&T

The tool would be used to assist IT engineers in troubleshooting customer issues. Currently, when engineers need a topology diagram, they use pen and paper to recreate a site diagram by hand. This tool will save valuable time and will provide a level of accuracy and detail that is currently not available.

Blackberry Server Interface

Duke Energy financial systems are critical to the company’s operation and require 24/7 maintenance. As a result, it’s necessary for the support team to handle issues remotely, at a moment’s notice. The students will create a mobile application that will be used to resolve support-related issues remotely. The platform for the mobile application will be a Blackberry. The initial version of the application was developed by an NCSU team in the Spring of 2010. This version was for Windows servers only, and includes the following functionality: the ability to start/stop Windows services; retrieve server specifications (such as disk space, memory, CPU, uptime, etc.); and the ability to start/stop scheduled tasks. The Fall project team will enhance this functionality, fix defects, and also extend the core functionality to cover the Unix environment as well. The Blackberry SDK can be used for UI development, which provides emulators, negating the need for a physical device. Students are encouraged to reengineer the application as necessary to best incorporate the new functionality. Code and documentation from the Spring team will be provided.

iSER Performance Evaluation Project Continuation

NetApp is a leading vendor of hardware and software to help customers store, manage, and protect data. NetApp clients have very high performance needs. They typically deal with petabytes of data and high-load systems. NetApp is constantly looking for ways to improve performance of their systems. Stated in simplest terms, this project is part of a continuing, year-long effort to evaluate a set of hardware and software technologies which promise to provide a more efficient mechanism to transfer data across a network. NetApp will use our analysis to determine value-added for different applications, which includes cloud computing and virtualization.

Below is a list of terms useful in understanding project goals:

SCSI: "Small Computer System Interface." This is an interface protocol used to access hard disk drives that has been around for many years.

iSCSI: SCSI over TCP/IP. This protocol is newer, and allows a computer to access SCSI devices located across a Storage Area Network (SAN). This type of application typically has high bandwidth and low latency performance requirements. iSCSI functions by opening a TCP connection over the network and exchanging commands and data. The computer that has the physical SCSI disk drives, and that accepts incoming iSCSI connections, is termed the "target". The computer that connects to the target in order to access SCSI devices is known as the "initiator".

iSCSI with TOE: iSCSI with "TCP Offload Engine". Typically TCP is implemented in the kernel, so the CPU carries its overhead. A home broadband connection might have a bandwidth of 30Mbps, which is considered slow by industry standards. The kernel implementation of TCP, then, does not affect network performance in such cases. This project, however, deals with 10 Gbps speeds - nearly 3,000 times faster than home use. In this kind of environment, the CPU's protocol handling adds a significant burden and degrades throughput. A newer technology known as "TCP Offload" was developed to take the TCP protocol and implement it in the hardware of the network adapter, thus relieving the CPU of this load.

DMA: "Direct Memory Access." This is a hardware mechanism in modern computers that allows some I/O devices to directly transfer blocks of data into main memory without the intervention of the CPU, thus freeing CPU cycles. This technology has been around for many years.

RDMA: "Remote Direct Memory Access." This technology takes DMA a step farther, and allows a remote device/computer to perform a DMA transfer across a network while avoiding constant CPU intervention on either end of the transfer. This is accomplished using certain protocols to negotiate DMA transfers, as well as hardware support to actually perform the data transfer using existing DMA hardware.

iSER: "iSCSI Extension for RDMA." This technology allows an iSCSI target/initiator pair to perform data transfer over the network without involving the CPUs of the nodes using an RDMA protocol. This relieves the CPU from copying large amounts of data from the network adapter's I/O buffers to main memory, saving even more CPU cycles than TOE alone. Also, since data does not remain in the network adapter's buffer for long, these buffers can be made significantly smaller than is necessary when using just TOE, decreasing costs and increasing scalability.

TOE: "TCP Offload Engine". See "iSCSI with TOE."

Fall 2010 Project Statement

- Using the configuration, setup information, and results documented by the Spring 2010 team, reproduce baseline performance runs to confirm that results are consistent with the previous team’s results.

- Incorporate multiple initiator target pairs into the configuration, and integrate the Cisco Nexus 5000 switch. Run the performance benchmark tests and compare to the baseline results, providing analysis of the results.

- Configure the system to leverage multiple cores and run baseline performance runs, providing analysis of the results.

Available project resources

Four Dell T3400 computers, each with:

- Quad Core Intel Processor with 1333Mhz FSB

- 8GB RAM

- Operating system: CentOS 5.3 (64-bit)

- Chelsio 10G optical network card, in a PCI-E x8 slot with Fiber Optic Cabling

Currently these four T3400 are assigned to two pairs, each pair containing a “target” and “initiator”. The computers have been labeled as such. The Chelsio cards on each pair of computers are directly connected via fiber cable.

Two Dell PowerEdge 2950 servers, each with:

- Quad Core Intel Xeon E5405 with 2x6MB Cache 2.0Ghz 1333Mhz FSB

- 8GB RAM

- Operating system: Linux RHEL 5.3

One Cisco Nexus 5030 10Gbit Ethernet Switch

Two Dell D380 computers with NC State Realm Linux installations are available for team use. These are not intended as test bed machines.

Using Business Analytics and Social Media Data to Get Better Answers Faster

When you hear the words "FaceBook," "mySpace," or "Twitter" in a conversation, the mental images that are conjured up are most likely vacation pictures, witty status updates, or weekend party planning. While it's true that social media networks began as rather unplanned, unstructured, and viral growing entities, many businesses are now seeing business value in the enormous data that's collected, and more importantly, the true insights that can be gleaned from analyzing that data. If a theme park opens a new ride, a business can wait weeks, months, or even years for a newspaper review or a Fodor's Travel Guide to point out the pros & cons from the perspective of a single writer. As an alternative, a business could tap the social media datastream and analyze the daily social media traffic which contains feedback from hundreds or thousands of real-world critics. The popularity of social media outlets has generated the raw data that feeds this process, but recent advances in unstructured text mining, sentiment analysis, and interactive node link visualization indicates that the real and complete value still lies within that data. The goal of this project is to use SAS Business Analytics, coupled with publicly available social media API's, to build a computer system that allows questions or problems to be framed and the results analyzed in an illuminating way. Will we come up with a better Amazon recommendation engine? Better suggestions for "FaceBook friends you may know?" A better algorithm for filling your NetFlix queue? We don't yet know, but we do know that the answers are in the data, and Business Analytics are the only suitable tools for unlocking those answers!

Secure Code Execution in a Compromised OS

Background

Modern computing systems are built with a large Trusted Computing Base (TCB) including hypervisors and operating systems. The TCB provides security and protection services for both data and processes within a computer system. As a result, if any portion of the TCB is compromised, then the security for a system is also compromised and secrets input to the machine are no longer protected. Given the size and complexity of the TCB in modern computers, it is very difficult to protect the TCB from attack and ultimately provide protections to the entire computer system.

The impact of any vulnerability found within the TCB affects the entire system and anything that depends upon it. Examples of the impacts can be as simple as disclosure of financial information or as complex as power outage due to failure of a control system. Much of the world we live in today is enabled by computer systems and those systems rely upon the TCB for protection of their critical processes and data.

Project Statement

This project will seek to create software that provides a critical function with high security assurances by leveraging the features of Intel's Trusted Execution Technology (TXT) and Trusted Platform Modules (TPMs). In particular, it involves developing a dynamic software environment with a very small TCB. While this software is not intended to replace conventional computing systems, it will need to independently implement some of the same functions. One such function is basic input from the user. The goal of this project is to develop a secure software environment capable of performing secure input from the user and encrypting the data for later use. The data input from the user will be protected even if the operating system or other software is compromised. Software will be developed in C under Ubuntu.

Specifically, the project will involve developing a system that does the following:

- Suspends execution of the computer’s operating system and turns control over to a trusted module

- Unseals a secret using the TPM only if the correct trusted module is loaded

- Blinks the keyboard LED's with a specific pattern

- Writes a keyboard driver to read the key strokes from the user directly with the trusted module

- Encrypts or hashing an input password and nonce

- Resumes execution of the operating system

- Integrates the secure system with a web service

- Develops a secure protocol to interact with the web service

Code for suspending and resuming the operating system and passing control to the module will be provided although it may require some small modification.

Project Deliverables

- Source code and demonstration

- Technical presentation

- Written paper (optional academic paper for submission to a workshop)

Benefit to Students

Students will learn a great deal about the details of modern x86 architectures, developing low-level code, computer software security concepts, and current research challenges in the field of security. Of particular benefit, students will learn how to use the hardware enabled security and leverage the TPM. Both of these technologies are anticipated to provide the basis for many trusted computing environments and other security applications to come over the coming years.

Suggested Team Qualifications

- Interest in computer security (basic knowledge is beneficial)

- Basic background in operating systems

- Experience with Assembly and C programming

- Basic understanding of computer architectures

Suggested Background

- CSC 405 Introduction to Computer Security or CSC 474 Information Systems Security

- Courses or Interest in Operating Systems or Computer Architecture and Multiprocessors

About APL

The Applied Physics Laboratory (APL) is a not-for-profit center for engineering, research, and development. Located north of Washington, DC, APL is a division of one of the world's premier research universities, The Johns Hopkins University (JHU). APL solves complex research, engineering, and analytical problems that present critical challenges to our nation. That's how we decide what work we will pursue, and it's how we've choose to benchmark our success. Our sponsors include most of the nation's pivotal government agencies. The expertise we bring includes highly qualified and technically diverse teams with hands-on operational knowledge of the military and security environments.

Project requires a Non-Disclosure Agreement to be signed

Bus Tracking Android App

Project Description

TransLoc was formed by NCSU students with the goal of revolutionizing campus transit. Today, universities all over the country use the technology first used here on the Wolfline.

Not content with the limitations inherent in the mobile web version of our system, last Spring we released a widely popular iPhone app to let students track their buses on the go. Android devices, for their part, keep proliferating at a blistering pace so naturally the top question we now get is "where is the Android app?" This is where you come in.

We need an intuitive, easy to use app that will allow riders to locate their bus anywhere from the convenience of their Android smart phone. Much like our iPhone app, the app will allow riders at any of our tracked transit agencies to

- track any number of routes they choose on a map that features live moving buses, stop markers, and our signature interleaving route patterns,

- click on stop makers to see their name and which routes they serve,

- see a list of all stops served by a route and which ones will be serviced next by a bus,

- locate themselves on the map, and

- keep up with the latest announcements that impact their trip.

In short, we'll give you the data feeds, and you take it from there. But to not just leave it at that, you will be asked to design and implement further intuitive and clever enhancing features that increase the utility of the app. Make this app a truly indispensable app; this is your chance to improve the daily lives of thousands of your peers.

We will be asking you to follow Android interface design paradigms to create an aesthetically pleasing and intuitive app that meets Android user experience expectations.

Finally, as you will come to know the mobile app world is fast paced, so to give you a feel for it as part of this project you will be releasing the app to the Android Market once a stable base version is reached, and you will solicit feedback from users to drive the direction of development. Not many projects let you get your work in front of real users while you're still working on it, so don't pass up this opportunity!

Instant Replay for Bus Tracking

Project Description

TransLoc was formed by NCSU students with the goal of revolutionizing campus transit. Today, universities all over the country use the technology first used here on the Wolfline.

Most riders are familiar with our live moving map at ncsu.transloc.com that lets riders spot the current location of their bus. What many don't realize is that we record and store the current location of all buses at all times. This data is used by those who run transit systems to look back and review any problems, such as addressing rider complaints and verifying why a bus may have departed late.

Therefore, we need a tool to replay past events much like you can with a DVD. In short, picture our live map that instead lets you go back and replay what happened at any given time in the past (including 10 seconds ago!) in real time, 2x, 5x, 10x, etc., forwards and back, with the ability to jump to any time. Furthermore, as with our live map, users will still have the ability to limit their view to just the routes they're interested in, or even just a bus.

In addition, the map will be augmented with extra information, such as how on time the buses were running (did they just leave a stop late or early), how far apart were buses on the same routes (e.g. 14 minutes apart), as well as many other helpful details.

Part of our success has been because we collect bus locations faster than anyone else (up to once a second per bus). This is powerful because it gives riders the most up to date information possible. However, this also means this tool will need to dig through massive amounts of data points. The challenge will be on creating an easy to use, intuitive interface that is both flexible and highly responsive despite the large quantity of data points to parse.

With this project you can make a real impact on the operations of transit agencies across the country, including the Wolfline, by making it easier for transit administrators to review issues so that they can better understand their fleet and use that knowledge to improve service in the future.

This project will use the Google Maps API for the mapping component, with either JavaScript or Flash used on the client side with HTML and CSS, and Python (with Django) or PHP used on the Linux server side.

Serious Games: An Interactive Visualization of the Electrical Wiring Code

Project Description

The North Carolina State Board of Electrical Contractors (NCBEEC) certifies electrical contractors by administering an examination to confirm that applicants possess the knowledge required by the National Electrical Code. Currently, test questions for this exam are randomly generated from a large examinations database and tailored for the level of certification being sought by the examinee. These questions are presented on a monitor. Examinee answers are input from a keyboard or from a paper copy of an answer sheet. All exam questions are multiple-choice with a typical exam containing approximately forty questions.

The goal of this project is to develop an interactive 3-D graphic model of a one- or two- story single family dwelling (home) including a visualization of residential electrical wiring details. The intent is to develop this model as a proof-of-concept to determine if the electrical examinations administered by the NCBEEC can be given to potential electrical licensees based solely on the three dimensional model. The NCBEEC will provide questions and answers to be incorporated into the 3-D model. The model is to be developed so that visualizations of test questions relevant to individual room wiring can be randomly generated and swapped in or out at the discretion of NCBEEC or test administrator, without the need to redesign the home structure. For example, one question may ask about positioning of an electrical outlet near the vanity wash basin, while another may address the circuit breaker for a Jacuzzi tub in that same area. The 3-D housing model should have the capability to zoom in on a particular room or area in a room and then highlight a particular wall outlet, panel box, kitchen counter or special electrical appliance wiring, etc., that is the subject of the question.

The NCBEEC will form an advisory committee of electrical experts that will assist in guiding this project. This committee or designated representative will interact as often as needed with the project liaison to the board, project team advisor(s) and others associated with this project. NCBEEC is aware that at the beginning of the project, this interaction may be weekly.

Bridging Project Management Tools

A variety of on-line tools are available to assist tracking of project details such as task lists, resources required, deadlines, status, etc. These tools have general applicability in that all projects (e.g., build a bridge, fly a space mission, etc.) have steps, resources, time-lines, and other elements that must be tracked to facilitate project success. The types of projects of interest here, software development projects, are no exception. Most project management tools provide special components for, or can be adapted to software development. One such system, Microsoft Project (MSP) is oriented to managers and provides features and spreadsheet-like computations important to overall project success. Another approach is illustrated by an Open Source tool, JIRA (a brief web search will turn up the curious genesis of the name “JIRA”). JIRA supports a subset of features that MSP supports, but presents features in a way that better suits developers in day-to-day project operations.

The overall goal of this project is to investigate the possibility of linking the best features of each of these systems. For example, if a developer reports some detail to JIRA, is it possible to reflect that detail in MSP? Similarly, if a manager changes some element of the project using MSP, is it possible to reflect that change in JIRA? Each of these systems is built around a database; each of these systems supports generation of XML files. Plug-ins are available to allow Microsoft Project files to be imported to JIRA. Can this process be automated? Is it possible to import JIRA-generated XML files and represent their content in MSP?

VPN access to local installations of MSP and JIRA are available for dedicated team use.

Objectives of this project are to investigate features of MSP that might have counterparts in JIRA, to investigate features of JIRA that might have counterparts in MSP, and to build an automated software bridge between the two systems. One important question to be answered concerns the update rate of information between the two systems. How fast can changes in one system be reflected in the other? These and other requirements are to be refined in consultation with the sponsors.

TeraData Shopping Pal

Project Overview

The goal of this project is to develop an application targeted for the Android mobile phone that allows users to opt-in to receive offers from businesses based upon the user’s geographic location. The application, called Shopping Pal, will deliver location-based offers to consumers that have opted into this service. For example, a user driving within a pre-determined boundary around a Starbucks might receive an offer to purchase a Starbucks coffee at a discount.

Project Organization

The project will be run within the Agile Scrum framework. Agile development provides the benefits of early and consistent customer engagement. The Teradata representative will serve as the Product Owner for the Scrum team in order to provide application requirements and to assist with backlog grooming and acceptance criteria. Development will occur in two week Sprints. Planning for tasks will occur at the beginning of the Sprint, checkpoints and backlog grooming will be scheduled mid-Sprint, and demonstrations of progress will happen at the end of each Sprint in a Sprint review.

Sample code illustrating web service calls and interactions will be provided. Development will be done using the Android SDK and Eclipse IDE plug-in. Testing will be accomplished using the Android SDK Simulator or Android phones, as appropriate.

Company Background

Teradata Corporation is the world’s largest company solely focused on raising intelligence and achieving enterprise agility through its database software, enterprise data warehousing, data warehouse appliances, consulting, and enterprise analytics. Teradata produces analytical solutions that leverage enterprise intelligence in order to solve customer business problems, including customer management. Customer management is the practice of building enduring, lasting and profitable relationships. Teradata Relationship Manager, which is developed here in Raleigh is designed specifically to meet the needs of businesses in establishing and maintaining strong customer relationships. The Teradata Corporation has approximately 2400 employees worldwide. In the Raleigh office, Teradata has an organization of 100 employees with 50 software engineers.

Shopping Pal Requirements and User Stories

Technical Requirements

- The shopping pal customer user interface will be deployed as an Android mobile phone application.

- The shopping pal application will connect using a geo-location service to locate nearby businesses.

- The shopping pal application will connect to RESTful web services to retrieve application data including participating businesses and offers for stores.

- The shopping pal application will be able to run in the background to poll for new offers that have become available and display a visual indicator for the user.

Searching for offers

- As a user, I would like to view a list of nearby businesses that have offers available.

- As a user, I would like to view the offers available for a nearby business that I have selected.

- As a user, I would like to bookmark an offer from a nearby business so that I can access the offer information directly.

- As a user, I would like to get point-to-point directions to a nearby business that I have selected.

- As a user, I would like to subscribe to a service that will send me an SMS or text message when I am within range to a business with available offers.

Receiving automatic offers

- As a user, I would like to sign up to receive automatic offers for selected business when I am nearby.

- As a user, I would like to browse a list of all of the participating businesses.

- As a user, I would like to select business for which I would like to receive offers automatically.

- As a user, I would like to unsubscribe from the automatic offer service.

Publishing offers

- As a business, I would like to publish offers for customers.

- As a business, I would like to delete old offers that have expired.

- As a business, I would like to receive data on the customers that have signed up to receive offers.

MONDRIAN: PERFORMANCE TEST HARNESS

Problem Statement

Online Analytical Processing (OLAP) is an approach to providing access to business information for decision making that is summarized, multi-dimensional, and delivered at high speed. One capability offered by Thomson Reuters products is OLAP analysis against healthcare data, to better understand past history and project future needs and optimizations. Typical analyses would examine the cost of care, quality of care, access to care, or investigations of fraud, waste, and abuse. More detail is provided below about a product called Advantage Suite that allows sophisticated OLAP reports to be written and executed against a relational database.

One software technology used inside Advantage Suite is an open source tool called Mondrian (http://mondrian.pentaho.org/). Mondrian is a so-called measures engine that enables OLAP processing against a relational database by translating queries in the Multi-Dimensional Expressions (MDX) query language into standard SQL. Client tools submit MDX queries to Mondrian via the “XML for Analysis” (XMLA) web service interface. Thomson Reuters has made code contributions to improve the performance of Mondrian, and would like to see the Mondrian community adopt a performance test to guard against performance regressions as the code base continues to evolve.

The Mondrian code base already includes a sample “food mart” database that is used for functional testing. For performance testing, it would be desirable to have a database with a non-trivial amount of data, and a set of queries that constitute a representative workload. We believe that a draft standard benchmark TPC-DS from the Transaction Processing Performance Council (http://www.tpc.org/tpcds/default.asp) could provide such a foundation.

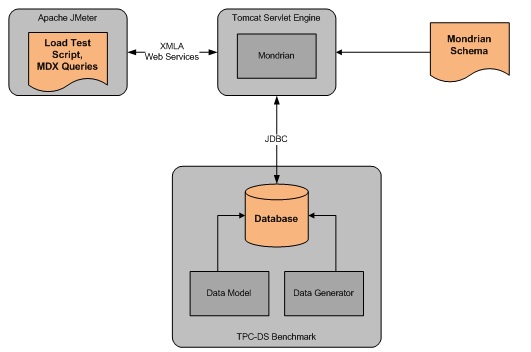

The goal of the senior class project is to create a performance test harness for Mondrian. Such a test should include:

- A sample relational database in a “snowflake” schema, with sample data, based on the TPC-DS draft standard or other suitable database.

- A Mondrian schema definition consisting of descriptive metadata for the measures and dimensions in the database.

- A set of test queries in the MDX language.

- A test script for the open source Apache JMeter load test tool (http://jakarta.apache.org/jmeter/) so that single user or multi-user workloads can be applied to Mondrian.

These components are depicted in the figure below. The desired outcome is to end with throughput measurements for a multi-user query workload, and with recommendations for further refining the test harness.

Student Insights Gained

Students will be exposed to business intelligence concepts such as data warehousing and OLAP while working on integration tasks associated with relational databases, Mondrian, and web services. All of this is in the context of performance engineering for a sophisticated open source project. At the same time, students will be working with Thomson Reuters staff members who are developing commercial software for one of the most dynamic areas in the U.S. economy – healthcare. Along the way students will be responsible for all aspects of technical design and implementation, interact with subject matter experts via conference calls, and present findings to company representatives.

Required Skills and Experience

Java programming and a basic level of familiarity with relational databases will be required. Thomson Reuters staff can provide samples and assistance in getting up to speed on Mondrian, MDX, and Apache JMeter.

Development and Test Environment

The software involved in this test is Java-based and works on all popular operating systems. It will also be required to run a relational database such as MySQL. Student laptops or NCSU computers can be used for development activities. No access to Thomson Reuters servers will be required. All the required software is open source and can be downloaded from the web.

Background

Advantage Suite is the flagship product in the Payer Management Decision Support sector of the Healthcare business of Thomson Reuters. It consists of a suite of applications for healthcare analysts, and a component called Advantage Build, which is used to construct and regularly update a data repository that the applications query. Advantage Suite users can perform analyses of quality of care, access to care, and cost of care, conduct disease management and case management, initiate fraud, waste and abuse investigations, and much more.

Advantage Suite includes a powerful ad-hoc healthcare analytics tool, and belongs to the class of Domain Specific Business Intelligence (BI) software. Unlike other BI tools such as Cognos or Business Objects, Advantage Suite “speaks the language of the healthcare analysts,” allows the construction of sophisticated reports that resolve into a series of queries, and comes with a so-called Measures Catalog containing over 2,500 analytical metrics including simple measures (“average length of stay of a hospital admission”), ratios/rates, (“cost of healthcare expenditures per beneficiary per time period”) definitions of populations ( “people with Type 2 diabetes,” “asthmatics,” etc.), and more than 175 pre-defined report templates (“Prescription Drug Cost Monthly Trend Report,” “Top 300 Hospital Profiles”).

After the healthcare analyst has defined a report, she can immediately submit the report for execution, or schedule it via an agent. The report resolves into a series of queries that are run against the underlying healthcare analytics data mart. Reports produce on average between six and ten fact queries (i.e., queries that pull data from the fact tables), with some reports generating more than 100 queries.

Analysis and Reporting of Automated Testing Results

Tekelec is a Morrisville-based company with approximately 1,150 employees worldwide. Tekelec provides hardware and software which enables network operators (e.g. communications companies) to offer IP-based multimedia services while continuing to employ existing SS7-based infrastructure. Most of Tekelec’s solutions are required to be of five 9’s quality; so software and hardware verification becomes critical. Many of Tekelec’s software applications run on third-party equipment from vendors such as Hewlett-Packard. The operating system used on these servers is a Tekelec-created refinement of CentOS, which is in turn based on Red Hat Enterprise Linux. This OS is called the Tekelec Platform Distribution, or TPD.

Tekelec employs a small group of verification engineers whose primary assignment is to test new builds of TPD. There are often two active streams of TPD in development, and each stream is available in 32-bit and 64-bit builds. Each week’s software builds require testing a variety on hardware platform with multiple configurations. This results in a large verification matrix requiring the same or similar tests to be executed on different server types with different software builds.

This Design Verification (DV) group must also verify some middleware (such as a management GUI, a Tekelec-optimized database framework, and an installation service) which are released to application groups each week. The sum of these components is referred to as the platform, and it is essential that the platform released each week be stable across several server types.

The Platform DV group has employed automation over the years (for example, bash scripts to run repetitive tests), but in late 2009 the group began a large scale automation development effort. This effort is currently underway, and it employs technologies such as Apache, PHP, Perl, XML, jQuery, MySQL, Zend, Google Charts, Bash, and Selenium to provide the following capabilities to platform verification engineers:

- Creation and execution of test plans which are scoped automatically based on target hardware and software

- Collection of results of tests in a MySQL database

- Basic reporting of results on a real-time basis

- Basic management of users and servers

Because the system has been in place for months, and has been used on a daily basis, there are already over 500,000 individual test results in the database. At this time, the only analysis available shows the current execution status of a handful of test suites (that is, groups of test plans) on certain combinations of hardware, software, and architecture.

So the problem proposed for the Tekelec Senior Design Project is to use the above mentioned technologies (and others if necessary) to provide comprehensive analysis and reporting of the automation results for previous, current, and future Platform DV test activities. Following are the goals of this analysis and reporting:

- Common resource for viewing records: A user of the automation system (not necessarily a verification engineer, but also a manager, project planner, executive, or other interested party) will be able to view results of test plans, related problem reports, and user comments. The user will also be able to view similar information for test suites.

- Graphical and visual displays: The user will be able to view the results using graphical methods such as offered by Google Charts or a similar utility. Interactive capabilities (such as click to drill down, drag and drop, etc.) could provide users with additional dynamic information.

- Trend analysis: The user will be able to track the progress of test plans and suites over time, which will enable more accurate supervision of ongoing tests and allow estimates of test execution for upcoming builds. Similarly, the user will be able to track problem reports and user comments associated with a test plan over time.• Resource analysis: The user will be able to track the usage of test equipment on a historical basis, thereby allowing calculation of occupancy rate of available facilities, and estimates of future needs.

- Effort analysis: The user will be able to track the time required for engineers to execute various test plans, in order to improve estimates of future efforts.

- Customization: The user will be able to establish predefined common reports, allowing quick access to information needed on a regular basis.

- Publication: It will be possible to send reports to other consumers of this information, for example as a link in an e-mail.

This project will give the seniors the opportunity to apply knowledge and data modeling to an existing but dynamic collection of verification data. The resulting framework and user interface will become an important and regularly-exercised component of Tekelec platform verification.

Project Archives

| 2026 | Spring | ||

| 2025 | Spring | Fall | |

| 2024 | Spring | Fall | |

| 2023 | Spring | Fall | |

| 2022 | Spring | Fall | |

| 2021 | Spring | Fall | |

| 2020 | Spring | Fall | |

| 2019 | Spring | Fall | |

| 2018 | Spring | Fall | |

| 2017 | Spring | Fall | |

| 2016 | Spring | Fall | |

| 2015 | Spring | Fall | |

| 2014 | Spring | Fall | |

| 2013 | Spring | Fall | |

| 2012 | Spring | Fall | |

| 2011 | Spring | Fall | |

| 2010 | Spring | Fall | |

| 2009 | Spring | Fall | |

| 2008 | Spring | Fall | |

| 2007 | Spring | Fall | Summer |

| 2006 | Spring | Fall | |

| 2005 | Spring | Fall | |

| 2004 | Spring | Fall | Summer |

| 2003 | Spring | Fall | |

| 2002 | Spring | Fall | |

| 2001 | Spring | Fall |