Projects – Spring 2021

Click on a project to read its description.

About Bandwidth, Inc.

Bandwidth is a software company focused on communications. Bandwidth’s platform is behind many of the communications you interact with every day. Calling mom on the way into work? Hopping on a conference call with your team from the beach? Booking a hair appointment via text? Our APIs, built on top of our nationwide network make it easy for our innovative customers to serve up the technology that powers your life.

Background and Problem Statement

Kari’s Law:

https://www.bandwidth.com/blog/karis-law-compliance-mlts-911-and-what-that-means-for-your-business/

Kari’s Law requires businesses using multi-line telephone systems (MLTS) to implement notifications to designated personnel when a 911 call has been made. These notifications can take the form of a phone call, email, SMS/text message, or conspicuous on-screen message.

Bandwidth currently has a solution for voice, email, and sms, but we don’t have a mobile-push-notification solution.

Project Description

We really need a Proof-of-Concept. We want to see if this is feasible to generate a mobile push notification with our API’s.

Adequate notification necessitates an awareness of end-point location. A simple notification without the location of the 9-1-1 call is rendered useless when the emergency takes place in a multi-floor building, large campus environment, warehouse, etc.

This means that your communication system will need to provide both notification of a 9-1-1 call, as well as alert someone as to where that call is coming from within your enterprise.

Possible Personas:

Hotel staff: Medical emergency happens on Hotel grounds and you need to direct staff to the location of the emergency.

Campus Security: Emergency happens and you need to direct campus security to an area of the campus that requires security keys.

On the Emergency-Voice-Services team, we save lives for a living. With larger organizations, like school campuses and giant hotels, you can’t expect emergency services to show up at the front desk and wait to figure out where a 911 call came from.

This feature/POC will assist organizations and emergency services with rendering help faster and directly to the location.

Technologies and Other Constraints

Super Flexible here.

Would encourage cloud technologies like AWS Amplify, Microsoft Azure Notifications Hub, or Google Firebase.

Super Flexible here. We would prefer Bandwidth API's to trigger the notification. We would expect at least 1 type of phone vendor as the end notification. This could also be a mobile simulator.

The cheaper the better.

About BCBSNC

BCBSNC is a healthcare insurance provider that needs to exchange data consistently and quickly with many other organizations.

Background and Problem Statement

BCBSNC is looking to expand use of private blockchain technology for sharing data with outside organizations. Configuration of the blockchain technology is very manual and we are looking for a way to simplify the administration.

Project Description

This project is a deep dive into the Hyperledger Fabric Blockchain technology and building graphical user interfaces to administrative command line tools. It will create a project that simplifies setting up a peer node and managing it using AWS ECS deployments.

Much of Hyperledger Fabric technologies are managed through cumbersome command line utilities and JSON / Yaml configuration files. This project aims to simplify the administration and reduce potential errors.

Technologies and Other Constraints

Required:

- Docker and AWS ECS

- Hyperledger Fabric

- YAML, JSON and Protocol Buffers

- Node.js and React

Flexible:

- Electron based client installed application

Desktop (preferred) or web-based

Background

Every semester, the Computer Science Department has to schedule all of the courses that will be offered in the next semester. Typically, this means finding an appropriate time for around 200 separate sections (lectures and labs).

The faculty members who teach these courses are available only on certain days and times, and classrooms are often in short supply. Furthermore, there are courses that should not be scheduled at the same time, because they are likely to be taken in the same semester by the same set of students.

Today, the course scheduling is done mostly manually, using Google Forms to collect faculty availability and (many) Google Sheets as simulated calendars into which courses can be placed and then moved around as needed.

Project Description

In this project, you will design and implement a web-based scheduling utility with a drag-and-drop calendar interface and the ability to enforce a configurable set of rules. The key features are as follows.

- The GUI will provide separate calendar-like views for scheduling tasks (e.g. one view will be "Monday-Wednesday courses on Centennial Campus"). One view will contain all the classes that have not yet been scheduled. This GUI will replace the current use of spreadsheets for placing courses and moving them around.

- The scheduling utility requires a database to store the contents of the scheduling views, the rules, and a few other tables of useful information, such as a list of the CSC faculty and a list of courses. In order to populate the database at the start of each semester, the utility shall read an input file (e.g. CSV or JSON) for each table. And to archive the data at the end of each semester, the utility shall be able to write its data to a set of files in the same format.

- A configurable set of simple rules will help the users to avoid scheduling mistakes. Some rules are generic, such as "an instructor can be in only one place at a time". Other rules are very specific, such as "CSC 316 and CSC 326 should not be scheduled at the same time". A key design challenge is to design the representation for rules and their user experience. (How are the rules viewed and edited? What happens when the user tries to schedule a course that would break a rule?)

Technologies

The scheduling utility should use Shibboleth authentication, and authorization can simply be part of the application configuration.

No other specific technologies are required. The platform, implementation language, front-end framework, and database choices should be made with two constraints in mind. First, the CSC IT staff will be hosting the scheduling utility when it is done. Second, we hope to use the scheduling utility for many years, so we value stable technologies that rarely introduce breaking changes.

About Laboratory for Analytic Sciences

The LAS is a mission-oriented translational research lab focused on the development of new analytic technology and intelligence analysis tradecraft. Researchers, technologists, and operations personnel from across government, academia and industry work in collaboration to develop innovations which transform analysis workflows.

Background and Problem Statement

Big data is complicated and hard to monitor. To adjust to large volumes of data from multiple sources, companies have built data pipelines to normalize and standardize data before ingesting into corporate data repositories (CDR). To speed up processing, each pipeline typically executes tasks on batches of files. Some examples of processing include:

- Transforming the data into a new format (e.g. JSON to csv)

- Removing duplicate data within a batch (e.g. after removing a column or two you may have the same information repeated)

- Data validation - confirming field values are non-empty and conform to standards

To monitor the data pipeline, CDR managers are often interested in summarized information such as:

- Total amount of data / batches processed

- Specific data batches that have errored during processing

- Validation of data distributions for a longer time period than a batch (e.g. daily, weekly, monthly, yearly, etc)

- Data throughput (e.g. are we processing at the same rate as last week?)

In large repositories fed by numerous pipelines of inhomogeneous data, CDR managers have need for even more complex metrics:

- Overall distribution of data, to align with corporate priorities (e.g. keeping a ton of log data when it’s not being used / low return on investment)

- Overall data rates - where do you experience processing bottlenecks?

Can a user-friendly UI be developed to enable pipeline owners/CDR managers to centrally manage monitoring and data quality information?

Project Description

The student team will need to create a web application with an API, database, and UI, having the following functionalities:

- Ingest, process, store, and export data collected from a hosted data pipeline. The data will mainly be used to monitor the system health of data pipelines.

- Control access to information associated with individual data pipelines (e.g. very simple database table that has users and permissions -- no need to create a complex user management features)

- Minimal effort: SQL scripts to update a database table

- A little effort: Python CLI to update the tables

- More effort: UI to manage

- Display the ingested data within a tabular grid (e.g. ag-grid)

- Create an API endpoint with associated script

- The owner should be able to copy & paste and save the script in the UI (e.g. ace editor)

- Sending data to this particular API endpoint will run the script with the data as input and return the result as the response. Example usage include:

- Have we seen this particular piece of data before? (hashed info) Run this data through a machine learning algorithm

- View/log the last N number of requests and the responses

- Create monitoring scripts for an associated data pipeline

- Ability to copy & paste and save the script in the UI (see above). Notification API used within the script will allow emails sent to the user

- Set scheduled times for the script to run

- For specifically generated output, allow the data pipeline owner to view the information in an interactive graph (e.g. plot.ly)

The UI/API developed will enable CDR managers and data pipeline owners to monitor and manage their systems with greater effectiveness. Specific examples include:

- Automatically detect processing errors within a data pipeline

- Automatically get notified when data quality has changed

- Capability to add machine learning and custom API responses to dataflowse.g. De-duplicate data across batch processing

Technologies and Other Constraints

Below are acceptable options for various components.

- Database - SQL based (e.g. MariaDB, Postgres, MySQL)

- Backend - Python (Flask or Django), Node.js (Adonis), or PHP (Laravel)

- Frontend - Vue.js, Angular.js, Angular, or React

- End result should be using Docker

- To bundle frontend - webpack (suggested not required)

Web application targeting the latest Chrome or Firefox version.

Public distributions of research performed in conjunction with USG persons or groups are subject to pre-publication review by the USG. In the case of the LAS, typically this review process is performed with great expediency, is transparent to research partners, and is of little to no consequence to the students.

About Laboratory for Analytic Sciences

The LAS is a mission-oriented translational research lab focused on the development of new analytic technology and intelligence analysis tradecraft. Researchers, technologists, and operations personnel from across government, academia and industry work in collaboration to develop innovations which transform analysis workflows.

Problem Statement

Imagine overhearing the following conversation:

GEORGE: Jerry! Jerry, my hair-dryer ruined the picture! And I need another one or I can't get back into the forbidden city!

JERRY: Who is this…

GEORGE: Jerry! I need you to get another picture of Man-Hands. I'm begging you!

JERRY: If I get it for you, will you take me to that club and show me a good time?

GEORGE: Yes! Yes, all right--anything!

Now, imagine being asked to explain what they are talking about.

Finally, imagine that this conversation is not taking place in your native language.

Language Analysts frequently encounter this situation in their work. Trying to understand verbal communication is like trying to understand a random scene from a random season of a never-before-seen TV show--in a foreign language. Not only does a language analyst have to translate the foreign language into English, they need to add meaningful context to their transcripts so others will understand what is happening. Who is related to whom? What is the overall context? What code words do they use? Who lies to whom? Etc Depending on an analyst's level of expertise and the obscurity of the relevant information, adding this type of contextual information to transcripts can be very time-consuming, requiring research across various tools and resources.

By automatically extracting and organizing important contextual information from previous conversations or communications, relevant information can be suggested to analysts as they do their work. Ideally, this complex, interrelated data will be stored as a Knowledge Graph rather than a more traditional, relational database. Knowledge Graphs are a collection of interlinked relationships of entities, such as people, events, products, facilities, and religious or political groups. Most of the current open-source packages and models in the space of Natural Language Processing (NLP) and Knowledge Graph formation focus on extracting structured knowledge from narrative text such as news articles or Wikipedia. Extracting knowledge from dialogue is a more challenging problem, and the proposed senior design project addresses one challenge in this space.

Project Description

Typically, a Knowledge Graph dataset is comprised of sets of triplets consisting of subject, predicate, and object parts of speech. For example, such triplets might include (Elaine, works at, Pendent Publishing) or (Jerry, is neighbors with, Kramer). Visually, you could represent this knowledge and these relationships with a graph, where the subject and object of triplets are nodes and the predicate is the edge connecting the nodes.

Automatically collecting all subject-predicate-object triplets using NLP techniques in dialogue yields many "uninteresting" triplets. This process is relatively successful on wiki articles or news stories since most sentences in such sources contain important long-term knowledge--not a lot of filler. In dialogue, however, many triplets such as (George, ran into, Tim) have only short-term relevance; this triplet might be important in that moment, but is likely not something we need/want to store long-term in a Knowledge Graph.

Students working on this project are asked to develop techniques using rule-based or AI models to extract only the triplets with long-term relevance from dialogue data. Sponsors will provide some basic suggestions regarding how to assign values to triplets, though students will be encouraged to follow their intuitions to design their own method(s). Students will be given access to a complete set of Seinfeld episode transcripts with which to work. The prevalence of inside jokes and nicknames in the show is a common feature of dialogue; understanding these types of references is one of the key challenges for language analysts.

Students working on this project will likely want to begin by learning how to use some of the popular, open-source NLP packages currently available. By executing these state-of-the-art models and techniques on both narrative text such as news articles as well as on Seinfeld dialogue, students will be exposed to the current NLP capabilities as well as limitations.

Processing the text in order to automatically extract subject-predicate-object triplets will likely require named entity recognition as well as some coreference resolution, both of which are non-trivial tasks especially when dealing with dialogue data. If time permits and there is interest, students are encouraged to explore these topics as well. To facilitate this project, however, students can be provided with a NER model that has been specifically trained to perform well on dialogue data and works with the Python spaCy NLP package.

Students will need to develop criteria to determine which triplets are worth storing in a knowledge graph, and which are not. As mentioned above, sponsors will suggest several basic rule-based approaches but students will be encouraged to formulate their own methodology if intuition strikes. If the team desires, and time-permitting, the sponsors can request that language analysts label a set of triplets created by the students to enable more complex supervised ML approaches for triplet filtration, perhaps employing word embedding techniques to determine semantic similarity.

If time remains, stretch goals are plentiful for this project. Application of graph/network algorithms (clustering, community detection, centrality, distance, etc) could be used to enable a variety of features and to help measure the performance of the graph filtration methods developed by the students. Creating data visualizations to display results of this work would also be desirable, though development of a UI is not a current goal.

To test KG triplet filtration techniques, we will be using transcripts from the television show Seinfeld. The data set includes:

- 9 seasons (180 episodes) of dialogue from Seinfeld, most of which have been manually cleaned to ensure consistent formatting

- 4 of these seasons have also been broken into scenes (455 scenes) and manually annotated with contextual comments of the type that an analyst might be required to insert into a transcript

- episode synopses scraped from Wikipedia, with information about the "real world" (ie, actors who played characters) removed, so that the synopses focus only on the Seinfeld Universe

Benefits to End Users

The capability to automatically generate a relevant knowledge graph from transcription data could support any number of subsequent applications. The application focus of this project is to enable language analysts to efficiently annotate transcriptions with supplementary contextual information that may be critical to a reader in understanding the true meaning of the dialogue.

Technologies & Other Constraints

- General Development – Prefer Python, but others are fine (C/C++/Java/etc)

- KG Database – Many options exist (Grakn, Neo4j, GraphDB, KGTK, even a basic triple store, etc). We anticipate exploring and discussing the pros/cons of these options

- Containerization – Docker - requirement

- Cloud storage – Not a requirement, but an option if students desire

- Interface – Linux command-line interface is acceptable though an extremely elementary GUI could be developed for demonstrative purposes

- Preferred paradigm: Desktop, command-line interface is acceptable.

Public distributions of research performed in conjunction with USG persons or groups are subject to pre-publication review by the USG. In the case of the LAS, typically this review process is performed with great expediency, is transparent to research partners, and is of little consequence to the students.

Background

Surprisingly, perhaps, the most common format for data sharing among scientists, economists, and others may be the lowly CSV (Comma Separated Values) file format. And the most commonly used tool for basic data science seems to be the spreadsheet, despite the fact that Microsoft Excel is known to misinterpret certain kinds of data read from CSV files. Even worse, the data is mangled silently by Excel, which often results in the corrupted data being saved by person A, who then exports a CSV file with some bad data to share with person B. The genetics community has been hit hard by this, with many data files shared online, e.g. through conference web sites, containing bad data.

Some of the most common issues when spreadsheet users import CSV files are:

- Gene names are misinterpreted as dates by Excel. (Source: https://www.biostat.wisc.edu/~kbroman/publications/dataorg.pdf)

- Large integers can cause a variety of problems, such as automatic conversion to floating point with a loss of precision. Also, many long integers are actually identifiers (e.g. serial numbers, product codes, account numbers) in which leading zeros are important. Excel will remove leading zeros, resulting in data loss. (Source: https://www.onlinesurveys.ac.uk/help-support/common-csv-problems/)

- Different locales having different formats (e.g. for dates and currency) and separators (e.g. the "thousands separator" may not be a comma), which can confuse Excel. (Source: https://news.ycombinator.com/item?id=12041655)

- IT security is also an issue when spreadsheets like Excel read data from CSV files. A "CSV Injection Attack" occurs when an Excel formula is present in the data file. (Source: http://georgemauer.net/2017/10/07/csv-injection.html)

You would think that anyone working with data would simply avoid Excel. However, most other spreadsheets have reproduced the same bad behavior in order to work the way people expect a spreadsheet to work, which is the way the Excel works.

We can help economists, scientists, and many other people avoid spreadsheet-induced data corruption by building a tool that processes a CSV file to change the way data is represented such that it can be safely imported into Excel and other spreadsheets, like Google Sheets. We can also detect and mitigate CSV Injection Attacks.

A prototype of such a data pre-processing tool has been written in Python (by a former NCSU student) using the Rosie Pattern Language to detect a variety of data formats. This project can leverage that prototype to whatever degree it proves useful.

Project Description

In this project, you will design and build a solution for the pre-processing of CSV files for data science. Your solution will handle the problems listed above and others, as demonstrated by the prototype that you will be provided. The functionality should surpass that of the prototype in several ways. First, it must be transparent and configurable, meaning that statistical inferences and thresholds will be visible and changeable. Second, it should be extensible (by a sufficiently knowledgeable user). Third, the functionality should be accessible from the command line, from an Excel plug-in, and via a web page where users can upload files for analysis and processing, with a cleaned-up file available for visual inspection or download.

Technologies

The only required programming technology is the Rosie Pattern Language (https://rosie-lang.org), which has a library of patterns to recognize common date and time formats, as well as many others. You may need to write new patterns (which are similar to regular expressions) in your work.

Both Excel and Google Sheets should be used for testing.

Python is a candidate implementation language, due to its ubiquity and popularity in the data science community, although this is not a hard requirement.

About Siemens Healthineers

Siemens Healthineers develops innovations that support better patient outcomes with greater efficiencies, giving providers the confidence they need to meet the clinical, operational and financial challenges of a changing healthcare landscape. As a global leader in medical imaging, laboratory diagnostics, and healthcare information technology, we have a keen understanding of the entire patient care continuum—from prevention and early detection to diagnosis and treatment.

At Siemens Healthineers, our purpose is to enable healthcare providers to increase value by empowering them on their journey towards expanding precision medicine, transforming care delivery, and improving patient experience, all enabled by digitalizing healthcare.

Background and Problem Statement

Our service engineers perform planned and unplanned maintenance on our imaging and diagnostic machines at hospitals and other facilities around the world. Frequently, the engineers order replacement parts. The job of our department, Managed Logistics, is to make the process of sending these parts to the engineer as efficient as possible. We help to deliver confidence by getting the right part to the right place at the right time.

In order to provide the quickest and best service possible, we have a network of smaller warehouses around the country, keeping frequently needed parts closer to our customers than they would be in one central warehouse. Our goals are to verify whether our current warehouses are in a location that minimizes wait for our customers and to determine the best possible locations for additional warehouses.

Project Description

We are looking for software that can make use of our recent order history along with traffic data in the contiguous 48 states to show optimal depot locations. The software will have a user interface that allows users to input constraints and variables, and displays potential locations on a map.

One great way this will help us is to solve the problem mentioned in the problem statement. The more efficiently we can deliver spare parts, the quicker our engineers can have medical machines back online for hospitals.

Technologies and Other Constraints

We hope the students will be able to determine the right technologies to use.

Web-based using a maps/traffic API

Non-disclosure Agreement will be provided

Skyward Federal

Skyward Federal leads development of secure cloud-fusion technologies supporting sensitive data processing. We develop modern & secure infrastructure along with privacy-centric applications to help our clients operate securely and efficiently.

The NCSU team project will focus on developing and enhancing the next generation of secure multi-tenant cloud compute. In particular, the NCSU team will help develop a platform and pipeline to facilitate rapid application development and deployment for security-minded clients and facilitate secure data transportation and separation.

Problem Statement

SELinux is used to provide secure mandatory access control (MAC) in industries that rely heavily on confidentiality and integrity of data. SELinux assumes that multiple nodes use the same security policy when transferring data from one node to another. There is no mechanism in SELinux to coordinate security policies between multiple machines. This lack of coordination presents a challenge to both security and performance of any horizontally scalable system using SELinux; thus there must be a method to ensure SELinux security policies are applied uniformly across all nodes in a system.

Project Overview

To coordinate across multiple nodes, the development of an SELinux policy server with the Linux kernel module is needed. This policy server will operate similar to the Windows Network Policy Server such that security policies are immutably enforced across nodes.

NCSU students will first familiarize themselves with the process of developing Linux kernel modules as well as developing SELinux security policy modules. Skyward will provide resources to assist in this process.

The Policy Server will consist of two components: a server and client. The server will be deployed on a single machine which will also contain the source code for an SELinux policy module. The server will have the capability to compile and push a .pp file (SELinux policy package) to multiple machines running the client. The client will be notified of this change and build the policy module to the local node.

Project Benefit and Utility

The successful project will serve as a prototype for further policy server development with production multi-node applications that rely on mandatory access control to enforce data separation among multiple types of users through SELinux. The knowledge and experience during development will be invaluable as students continue in the field of secure software development.

Constraints

- The senior design team shall develop the SELinux policy server and clients as Linux kernel modules on virtual machines running either CentOS 7 or CentOS 8 unless another OS is agreed upon.

- The policy server and client shall be developed as Linux kernel modules. C or Rust is recommended as the language to use for writing these modules.

- SELinux policy modules shall be written using the SELinux Kernel Policy Language.

Background

Bank of America provides banking services to millions of customers. Within the security division, we are responsible for protecting the assets for those customers against all possible forms of cyberattack.

Problem Statement

Search the GitHub repository on a regular cadence for code that can potentially harm the bank.

Project

Products exist to analyse code downloaded from GitHub for malicious content. What we are interested in is going a step further while also being more specific to interests of the bank. We would like to search GitHub for code that contains content that might be harmful to the bank. Examples of this include: the development of an app that looks similar to Bank of America’s app, the use of BofA’s logo or name in any code, the use of credentials related to BofA (e.g., hardcoded username/passwords that are used to access bank assets, or that could belong to BofA employees or clients), or any code that looks like it is intended to access bank assets or attack the bank.

Basic functionality:

- Code will need to be developed that searches through GitHub for possible bank references, and so will need to operate at this scale

- Students will need to develop code that can alert (via screen and/or email) when questionable code has been identified. Alert should indicate why the code has triggered an alert.

- Needs to be able to run continuously.

- Initial target: identification via regular expression of bank references (e.g., BofA, Bank of America, etc.) in code comments or even code (e.g., BofA as a variable name, or references to bankofamerica.com)

- Code that might not reference BofA directly, but might contain comments that demonstrate that they are generic to attacking banks, or might be aimed initially at other banks but could also be turned against BofA

- Needs to be modular so that it can be extended easily with other types of code analysis

Front-end interface:

- Display as a web page that accesses historical data

- Provide a dashboard showing the types of trigger code that has been identified, the numbers of repositories in each category, the date for the most recent addition to that category, etc.

- Provide ability to drill down into the details for any individual repository that has been identified

Testing:

- Testing can be performed against, for example, a generic GitHub repository set up by the students with different types of trigger code, and against a subset of the GitHub repository

Stretch Goals/Functionality:

- Code that includes the bank’s logo

- Code for mobile apps that look like it might be emulating BofA’s mobile app

- Code that is being directed towards the bank (e.g., password guessing tools developed to specifically target the bank)

Technologies and Other Constraints

- Technologies: Python, GitHub API, potentially others as recommended by students.

- Constraints: results are easier to import into the bank if they are not containerized.

- Other limitations: the students will need to sign an NDA and assign IP rights to the bank.

Problem Statement

Timely, meaningful assignment feedback is critical for students enrolled in introductory programming courses. At NCSU, some CSC116 instructors have begun incorporating automated feedback for students through NCSU GitHub repositories. Feedback often includes descriptions of Checkstyle issues, messages that indicate whether the correct project structure is being used, and whether the student code meets the teaching staff design. A Jenkins continuous integration server is used behind-the-scenes (students know there is automation, but are not aware of the continuous integration server) to help facilitate the generation of feedback. Feedback is currently provided in plaintext format through a ‘feedback’ branch of each student repository, but more structured/detailed feedback reports could be beneficial for both students and teaching staff members.

Project

For this project, the senior design team should create a web application to facilitate automated, meaningful feedback for students in introductory programming courses. The system should allow teaching staff members to:

- Upload a course roster

- Create and configure assignment details

- View reports of student performance/progress

The system should allow students to:

- Review feedback about any compiler errors

- Review feedback about GitHub directory structure/contents

- Review JUnit test case statistics

- Review Checkstyle violations

Technologies

For this project, Java is the required primary language.

Problem Statement

Continuous integration tools are often used in industry to facilitate software development. At NCSU, several undergraduate courses incorporate the use of version control, continuous integration, and various other tools to promote software engineering best practices. However, adapting these tools for use in academia and configuring the tools for different courses can be time consuming and challenging. For example, in a course with 250 students, each coding assignment might require using multiple scripts to manage tasks such as creating 250 different repositories, creating 250 unique Jenkins jobs, closing repositories after a deadline, extracting information necessary for grading, and generating feedback PDF files for students. If any student requires individual extensions on an assignment, a member of the teaching staff must manually execute the steps required to reopen/close repositories, retrieve grading information, and generate PDF feedback files.

Project

For this project, the senior design team should create a web application to facilitate assignment management for courses that use GitHub version control and Jenkins continuous integration servers. In particular, the software should allow teaching staff members to:

- Upload a course roster

- Create repositories in NCSU GitHub for each student

- Close repositories after deadlines pass

- Create Jenkins jobs for each student repository

- Enable individual student extensions

- Retrieve data necessary for evaluation, grading, and similarity detection

Technologies

For this project, Java is the required primary language.

About EcoPRT @ NCSU

Autonomous vehicles technology is maturing and could offer an alternative to traditional transit systems like bus and rail. EcoPRT (economical Personal Rapid Transit) is an ultra-light-weight and low-cost transit system with autonomous vehicles that carry one or two passengers at a time. The system can have dedicated guideways or alternatively navigate on existing roadways where the vehicles are routed directly to their destination without stops. The advantages include:

- Dual mode – existing roadways and pathways can be used for low install cost in addition to elevated roadways at a lower infrastructure cost than existing transit solutions

- A smaller overall footprint and less impact on the surrounding environment so guideway can go almost anywhere.

The research endeavor, EcoPRT, is investigating the use of small, ultra-light-weight, automated vehicles as a low-cost, energy-efficient system for moving people around a city. To date, a full-sized prototype vehicle and associated test track have been built. For a demonstration project, we are aiming to run a fleet of 5 or more vehicles on a section of Centennial campus. The Vehicle Network server will serve as the centralized communications and vehicle routing solution for all the vehicles.

Background and Problem Statement

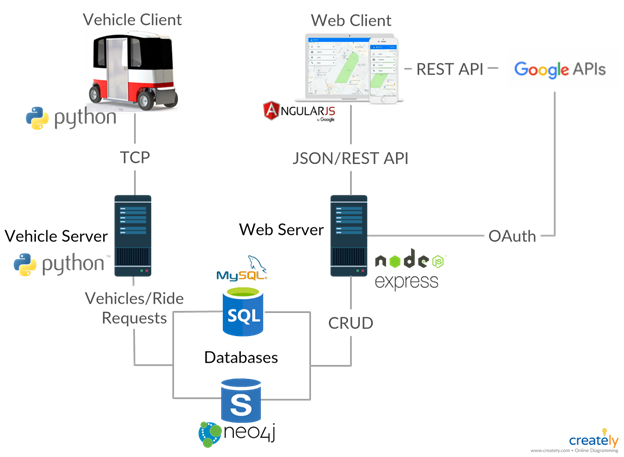

With the aim of running a multi-vehicle live pilot test on Centennial Campus, the overarching goal is to create a Vehicle Network Controller (VNC) and the associated Robot Operating System (ROS) vehicle client software to guide the vehicles and provide interaction to users. Please refer to the architectural diagram below showing the server architecture of the solution. The VNC will manage a fleet of cars, dispatching them as needed for ride requests and to recharge when necessary. It will also provide interaction to users to both make these requests and manage the network itself.

The work on the VNC would be a continuation of the work from another senior design team from Spring 2020. The current VNC solution provides a means for administrators/users and vehicle clients to interact with the server in different ways. Though still considered an alpha stage at this point, there is a need to further develop the VNC to make it ready to be used with physical vehicles.

Project Description

The goals of the project involve further enhancing, improving, and testing the system as a whole. Specific goals are outlined below:

- build out logic to assign vehicles upon demand.

- build out database to keep updated vehicle status current

- build instances of emulated vehicles. Consider how this can be done either through either a docker, a virtual OS, or running multiple instances on Linux

- simulate multiple vehicles on network. There are a number of smaller details that need to be worked out to full simulate:

- emulate ride requests

- generate multiple vehicle instances

- develop KPI’s for success (i.e. dwell time, ride time)

- explore the vehicle routing algorithm

Much like a smaller version of an autonomous Uber network, the vehicles are confined to a certain area or fixed route and need to be routed according to rider requests. Further building out and simulating the solution would be the next step with an overall goal of doing a pilot of multiple EcoPRT vehicles on campus.

Technologies and other constraints

NodeJS, Python, NPM, MySQL, Neo4j, Bootstrap, AngularJS, Express, HTML5, REST, Socket.io ,CasperJS, Mocha, C++, ROS

Linux server, web-based mobile, virtualization

Background

LexisNexis® InterAction® is a flexible and uniquely designed CRM platform that drives business development, marketing, and increased client satisfaction for legal and professional services firms. InterAction provides features and functionality that dramatically improve the tracking and mapping of the firm’s key relationships – who knows whom, areas of expertise, up-to-date case work and litigation – and makes this information actionable through marketing automation, opportunity management, client meeting and activity management, matter and engagement tracking, referral management, and relationship-based business development.

The key to successful business development is the strength of your engagements with prospective clients and how that changes over time.

LexisNexis InterAction® has an algorithm that, on the basis of meetings, phone calls and email exchange, calculates the strength of an engagement between two individuals.

Whilst working at a large law firm, however, what is more significant in the overall health of the engagement (for example: robust, fragile, etc.) between all members of the firm and all employees of a company.

There may be gaps in the engagement between different legal practice teams, or excessive dependency upon the engagement of a few key players.

Project

After taking an anonymized set of engagement data from a large law firm and showing the individual connections with employees at different companies, a solution would then identify and classify repeating patterns in the engagement graphs between the firm and a company, looking for risks (e.g. should a key node be removed) or opportunities (e.g. where the engagement or experience in one area could be used to strengthen another). An existing system visualizes small networks of relationships, which could be reused for the current project.

It is anticipated that some element of Machine Learning may be necessary as part of this project.

Support will be provided by Product Development and Data Science teams at LexisNexis.

Data Sources

An anonymized engagement data set will be provided, together with metadata to identify practice area and company.

Technology

The team may choose their technology stack with any mix of Javascript, Python, and C#.

Angular 9 should be used for any web-based front end.

Ngx-charts and D3 should be used for any visualizations necessary.

About Merck

Merck's Vision is to discover, develop and provide innovative products and services that save and improve lives around the world. Merck's IT cyber organization is charged with protecting Merck's resources, processes, and patients by ensuring our technology is protected from unauthorized outside entities.

Project

Merck would like to investigate developing a web based application for entitlement management (managing access to software, tools, resources, etc.). The senior design team will create a component architecture for the entitlement management system, then begin implementing the components in collaboration with the sponsor.

The idea is based on a few basic premises.

- Both business users and partner delegates must be able to manage the provisioning of users to entitlements

- The entitlement management system must be able to identify who is provisioned to what resource at any point in time, at any point in time.

- Rules for the engagement determine if changes to the metadata about provisioning events have downstream implications to historical provisioning (for example, a resource upgrade might restrict access to the resource until training requirements have been met)

- Provisioning events live within an active workflow, and that workflow remains open until the deprovision of the entitlement is closed (for example, a chemist’s access/entitlement to specific resources is removed at the end of the chemist’s scheduled 6 month engagement with Merck)

- The system must be able to handle user-initiated provisioning (e.g., a user requests access to a particular system) as well as event based provisioning (e.g., a new employee or company is contracted and automatically given access to the relevant system resources).

The Building Blocks

More detailed examples will be provided during the initial sponsor meeting about the following components of an entitlement management system:

- Kit – The kit is the layer of the architecture that executes the actual provisioning to the entitlement.

- Enterprise – enterprise business rules to provision to this entitlement (for example, a user cannot be provisioned to a system resource until a related training course is completed; a user cannot be provisioned to any system resource until a non-disclosure agreement is signed by their company)

- Application – application business rules to provision to this entitlement

- API – API or workflow to provision to this entitlement

- Package – The package is a collection of kits created by a business user for a specific role on an engagement. The package becomes the assignment to a given role on a project (for example: project manager, bench chemist, biologist, manager, etc.). The Package becomes the container for the metadata necessary to provision to the kits.

- Business Engagement – The Business engagement is the collection of the packages and metadata about the engagement pertinent to both provisioning and compliance.

- Engagement details (Business Area, Business Owner, Authorized provisioners both employee and delegate, duration, etc..)

- Engagement roles (roles are assigned to package templates and adjusted based on need)

- Engagement rules (things like do not adjust history, or adjust going forward, or do not adjust provisioning based on metadata changes. Or a flag for don’t provision until engagement start date, what to do on engagement end date)

- Entitlements data lake – This is the authoritative source of all provision activity

- Workflow engine – This is a comprehensive workflow engine that will manage entitlement events to their conclusion.

- Rules engine – a way to easily build rules that can be executed as code

We envision a few interfaces to this application:

- Application Owners would build the kits for their entitlements

- Enterprise governance would own the enterprise rules creation for the kits

- Engagement leads would assemble the kits into packages as the role of the engagement became clear

- Engagement Owners would build the engagements

- Provisioning Delegates would provision users to the engagement and assign them to

Background and Problem Statement

Have you been finding yourself putting together lots of jigsaw puzzles lately?

A common strategy applied to put together jigsaw puzzles is to form the edges of the puzzle first. Finding all the 'straight edge' pieces just to create the border can be challenging! They can really get lost in those large 1000+ puzzles. Even after touching every piece, it’s easy to struggle to find edge pieces.

Project Description

We would like students to create an augmented reality mobile app that can help users find straight edges in puzzle pieces through the mobile device’s camera. Once a straight edge piece is found, the camera view can highlight identified pieces with some visual indication, such as by lighting up the pieces’ outline.

Beyond finding edge pieces, perhaps this app could also capture a scan of an open piece on the board that has all four sides in place (easy to find) to maybe just one open side (difficult). These open slots could be scanned and locked in and then compared to the available pieces to find possible fits. This could be based on the unique outline of the piece, but might also include visual features to increase the probability of a match.

Technologies and Other Constraints

Students are welcomed and encouraged to find and use the appropriate technologies to solve this problem!

STUDENTS WILL BE REQUIRED TO SIGN OVER IP TO SPONSOR WHEN THE TEAM IS FORMED.

Background

XVIII Airborne Corps rapidly deploys ready Army forces in response to crisis (manmade or natural) or contingency operations anywhere in the world by air, land or sea, entering forcibly if necessary, to shape, deter, fight, win, and successfully resolve crisis. The Corps headquarters provides mission command as an Army, Joint or Combined Task Force headquarters.

The XVIII Airborne Corps is “America’s Contingency Corps” and Fort Bragg is the home of the Army’s Airborne and Special Operations Forces. When America needs her armed forces in a hurry, the first phone calls come to us. The Corps, the Army’s strategic response force, is skilled, tough and ready around the clock to deploy, fight, win and successfully resolve crisis. Fort Bragg is the Army’s flagship power projection platform that provides world-class support to America’s Airborne and Special Operations warriors and their Families.

Problem Statement

How does the XVIII Airborne Corps deploy at a moment’s notice anywhere in the world with enough relevant social context to rapidly gain and maintain positive and effective influence that shapes perceptions on the ground and ensures mission success?

The XVIII Airborne Corps’ mission is to deploy troops to unstable and hazardous areas within hours of notice. Because of the quick response nature of this mission, it is critically important for the division to be able to have as in-depth of an understanding of the deployment zones. Such understanding is multifaceted including: weather and climate, geographical, political, economic, and social considerations. While well-established systems abound for most of these issue areas, determining the social climate is increasingly complex in a world dominated by the relentless pace of social media. For small conflicts, this can even break down to the microscopic level of determining the beliefs, positions, and attitudes toward certain topics in specific social circles. Additionally, unlike the geography of the area which mostly changes at a glacial cadence, social mood and structures are extremely dynamic. Capturing this social climate is incredibly powerful information for administrators and soldiers to have as they respond to crisis.

Project

Develop a web-based application to provide both a list-based and geographical view of prevailing social sentiment/concerns, influencers, conduits, and mediums (i.e. social media posts) within a given geographic region. Such tweets and markers should be annotated with emotional and sentiment text analysis. Initially, the project shall take in a list of accounts to follow, the system should search for additional accounts based upon social networks and geographic regions. The system should utilize automated text translation services to convert foreign languages into English.

Requirements:

- Sentiment analysis

- Local concerns

- Key influencers (these may shift from peacetime to crisis)

- government and non-government

- segmented by topic/issue

- segmented by demographic

- Conduits

- Mediums (Apps, etc)

About ISE

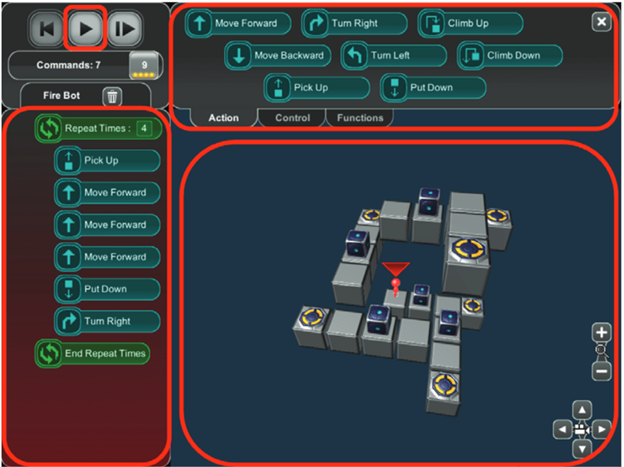

Our current project which is in collaboration with Intel, and sponsored by the National Science Foundation, seeks to develop tools to support the management of new product introduction in high-tech industries. This involves the collaboration of multiple autonomous engineering and manufacturing groups. The project seeks to construct a role-playing game environment to help both researchers and practitioners understand the complexities of the problem, and the importance of collaboration to an effective solution.

Background and Problem Statement

The two principal organizational units involved in new product introductions in high tech firms, such as semiconductor manufacturers, are the Manufacturing (MFG) unit and a number of Product Engineering (ENG) units. Each Product Engineering unit is charged with developing new products for a different market segment, such as microprocessors, memory, mobile etc. The Manufacturing unit receives demand forecasts from the Sales organization, and is charged with producing devices to meet demand in a timely manner. The primary constraint on the Manufacturing unit is limited production capacity; no more than a specified number of devices of all sorts can be manufactured in a given month. The Product Engineering units have limited development resources in the form of computing capability (for circuit simulation) and number of skilled engineers to carry out design work. Each of these constraints can, to a first approximation, be expressed as a limited number of hours of each resource available in a given month.

The Product Engineering groups design new products based on requests from their Sales group. The first phase of this process takes place in design space, beginning with transistor layout and culminating in full product simulation. The second phase, post-silicon validation, is initiated by a request to Manufacturing to build a number of hardware prototypes. Once Manufacturing delivers these prototypes, the Engineering group can begin testing. This usually results in bug detection and design repair, followed by a second request to Manufacturing for prototypes of the improved design. Two cycles of prototype testing, bug detection and design repair are usually enough to initiate high-volume production of the new product. Especially complex products or those containing new technology may require more than two cycles.

The Manufacturing and Product Engineering groups are thus mutually dependent. capacity allocated by Manufacturing to prototypes for the Product Engineering groups consumes capacity that could be used for revenue-generating products, reducing short-term revenue. On the other hand, if the development of new products is delayed by lack of access to capacity for prototype fabrication, new products will not complete development on time, leaving the firm without saleable products and vulnerable to competition.

Project Description

We seek the development of an educational computer game where students assume the roles of MFG or ENG managers to make resource allocation decisions. The initial module of the game would focus on a single MFG and ENG units. Resource allocation decisions will be made manually, giving the players of the game a feel for the unanticipated effects of seemingly obvious decisions.

The game will have one MFG player, and can have multiple ENG players, with each player trying to maximize their own objective function. We shall assume for sake of exposition one player of each type, and a given number of time periods T in which each player must make its resource allocation decisions.

Lots of variations are possible, and we would like to leave the maximum flexibility to enhance the game by adding more sophisticated procedures. Our NSF research is looking at auction procedures that use resource prices obtained from approximate solutions to optimization models by each player - a price-based coordination solution. So, we would ideally like to be able to use this game engine to simulate the solutions we get from our auction procedures and compare them to the solutions obtained by players making decisions manually.

Technologies and other constraints

We have had two previous groups working on this, and they have both elected to use web-based platforms which is fine with us; we would prefer the current group build on what the previous groups have done so the base product can be enhanced and developed to add new functionality, especially unpredictable events such as unforeseen development or manufacturing problems.

Web-based to build on previous work, and provide maximum flexibility to users.

About Katabasis

Katabasis, Inc. is a non-profit organization that specializes in developing educational software for children ages 8-15. Our mission is to facilitate learning, inspire curiosity, and catalyze growth in every member of our community by building a digital learning ecosystem that adapts to the individual, fosters collaboration, and cultivates a mindset of growth and reflection.

Background and Problem Statement

The modern education system trains us to break down problems into small pieces and form cause and effect relationships. If we rely exclusively on this approach we risk losing the insights from considering the whole system (an approach called systems thinking). The focus of system thinking is that “the whole is greater than the sum of its parts” and that a system’s structure can provide additional insights about its behavior over time.

Katabasis would like to introduce middle school-aged students to concepts in systems thinking via an educational game that simulates the spread of an infectious disease through a population. By exploring a topic that’s relevant to the students' lives, we hope to increase engagement with the material and promote healthy behaviors.

Last semester, an initial version of this game was created using the Unity game engine. It includes a basic city layout, a government policy system, and intricate citizen behaviour (responding to personal needs and deciding on tasks). While these systems have laid the foundation of the game, it is still missing a few core features we would like to see added this semester by Senior Design. In particular, we would like to see logging of player actions and the game state, and the ability for players and potential modders to create their own city layouts. Additionally, we would like to have the ability to generate new levels in the game using procedural content generation, giving students more cities to reflect over and deepen their understanding of the complex systems at play.

The game, though it deals with infectious diseases, is most similar to turn based and real-time strategy games that have a heavy emphasis on managing large groups of people. The Civilization game series and the Tycoon game series are well-known series that have similar mechanics. Building off of work completed by the fall 2020 Senior Design team, this project will be focused on the refinement of the existing game and the addition of a logging system, a city builder function, and procedural generation of levels.

This product will be serving many audiences, in particular, middle school students and education researchers. For the middle schoolers, it will provide them with an engaging learning opportunity and promote healthy behaviors. They will be able to use trial and error to experiment with the learning concepts and see for themselves what does and does not slow the spread of the disease. This will help them understand all the factors that create the complexities of the system of public health, and apply it to their lives.

For education researchers, this system will serve as a platform to create levels which can help them verify hypotheses which could be answered using this simulation. One such hypothesis could be that students will use personal protective equipment (PPE, like masks) more readily in their lives if they first see the effects of quarantining and then do levels that allow less drastic protective measures (like using PPE).

Project Description

This semester, the project team will focus on creating an action logging system and a city building feature to allow for students and academic researchers to create custom scenarios within the game. The action logging system would keep track of all the actions players take during the course of the game, to better facilitate the learning process after the fact, as well as provide some useful post-play data to the player and any other interested parties (teacher, researcher, etc.). The city building feature will allow users to add or remove various buildings on an isometric grid.

To enhance the replayability of the game, the Senior Design team will also be asked to create procedurally generated cities so that middle schoolers have virtually unlimited environments to test their containment strategies. It would also be desirable that new levels attempt to elicit new strategies from the students, to help students experiment with new policies and think more deeply about how different cities necessitate different approaches. We would want this to be the last feature worked on (after logging and city building) to make sure that the project stays within scope and time constraints.

User Experience Focus. We would like students on this team to conduct two think-aloud sessions online with middle school students. Katabasis will supply the middle school testers. The first think-aloud session would ideally be the midpoint of the course (upon completion of the logging and city building features), with the second think-aloud session happening towards the end of the course (once procedural content generation has been implemented). The think-aloud session is intended to solicit player feedback that will be used to inform improvements to the game, UI, and generate ideas for policies and buildings. We value this feedback very highly, and as such would like to see that the team takes it into account in their development of the game. Senior Design students will work with Katabasis team members to select additional features to incorporate over the second half of the project, in response to personal impressions and feedback from the sessions. The second user feedback event will be held at the end of the project (same format as first event) and the Senior Design team will include, as part of their final deliverables, documentation of suggested next steps in the development of the game.

Accuracy to real life data is not the goal but high level trends over time should be representative of what infectious diseases could do in the simulated environment. Our goal is to keep it similar enough to real-life to be identifiable and relatable, but dissimilar enough when needed to facilitate the gameplay or learning experience. Katabasis welcomes any input from the Senior Design students in the development of educational content, user feedback surveys, user agreement forms, etc. but to keep the project within scope we will head up this work ourselves.

Technologies and other constraints

The game is implemented using the Unity Game Engine in C#. The target platform is the web browser (using Unity’s WebGL Export), but would like to make it mobile-accessible if time permits. We will likely be asking our team to conform to a premade testing environment and to develop some robust tests for the new features they add.

Upon the completion of the project, the system will be hosted on Katabasis.org, and accessible to middle school students and education researchers. The associated Senior Design students' efforts will be acknowledged in the game credits, if desired.

STUDENTS WILL BE REQUIRED TO SIGN OVER IP TO SPONSOR WHEN THE TEAM IS FORMED.

LexisNexis® InterAction® is a flexible and uniquely designed CRM platform that drives business development, marketing, and increased client satisfaction for legal and professional services firms. InterAction provides features and functionality that dramatically improve the tracking and mapping of the firm’s key relationships – who knows whom, areas of expertise, up-to-date case work and litigation – and makes this information actionable through marketing automation, opportunity management, client meeting and activity management, matter and engagement tracking, referral management, and relationship-based business development.

One of the main value proposition of Customer Relationship Management (CRM) systems is giving insights into relationships a company has with its customers. Often, when employees are looking to make new inroads with a customer, they need to know anyone at their company that knows anyone at the customer's company. There are also other constraints in play, like looking for the strongest relationship or relationships to specific personnel. If no one is known at the customer's company then the query may expand to include alumni of the customer who could make introductions.

InterAction is currently built on a relational database which is not well suited to queries that more akin to graph queries in nature. As such, we are looking to investigate embedding a graph database (or something similar) into one of our components. We would vet the feasibility by testing the performance of queries, such as the who knows whom query mentioned above.

About Outer Banks Blue Realty Services

Outer Banks Blue is a vacation rental management company representing approximately 300 properties located on the northern outer banks of North Carolina that has been in business since 2005. Sandbridge Blue, established in 2010, manages the rentals for approximately 80 properties in the Sandbridge, Virginia area.

Background and Problem Statement

In 2018, a laundry operation was started in Elizabeth City, NC to wash the sheets and towels that are provided with each rental property. The laundry plant puts together packout bags, which contain clean sets of sheets and towels customized for each property’s needs, to be delivered to each property every time there is a departure. Each bag typically contains a mix of sheet sets and towels such that the weight of a single bag is no more than 40 lbs. Properties may require anywhere from 2 to 8 bags depending on the size of the property. Different towel types included are bath towels, hand towels, bathmats, and washcloths.

Packout bags are put together at the laundry plant based on the information provided by each of the delivery locations (Sandbridge and Kitty Hawk) in sticker sheets. Employees in the laundry plant print out these stickers (Avery 5160 size) to label each packout bag indicating its contents and the property to which it should be delivered. During the summer, one delivery day may involve 400+ packout bags. To organize these, a set of tags is created to label laundry bins (5x7 cardstock), where bags are grouped by cleaner (cleaning crews that have less than 10 bags are grouped together). The laundry bin stickers include information about all the properties that packout bags in it are for.

Currently, sticker sheets are created weekly through a manual and error-prone process that involves pulling information from several places (spreadsheets, our reservation system, emails, and others) into a Word document. If any last minute arrivals are scheduled, an email or text is sent to the laundry facility and stickers are manually made up. Another large opportunity for error comes by property owners changing their bedding configuration (for example, changing out a queen bed for bunk beds) and sticker sheets not being updated accordingly. When mistakes are made, packout bags may be delivered with the wrong contents, causing delays and extra work for our employees.

In addition, if any ancillary materials are needed (welcome bags, paper towels, soap, etc.) the individual delivery locations (Sandbridge and Kitty Hawk) would request these be added to packout bag delivery via email to the laundry plant, which makes it hard to track inventory and do proper billing.

Project Description

We have a property management system that we use to manage reservations. For this project, we would like to create a new and separate system for personnel of the laundry operation and the housekeeping teams in Kitty Hawk and Sandbridge to manage the creation of packout bags and their corresponding bag and bin labels. This includes the ability to configure the items in packouts (sheet sets and towels) for each property as well as the list of ancillary items. The system should allow employees of the laundry operation to print out labels for bags and bins.

To support this process, the system should also keep track of the properties at each location and their needs (types of sheets and towels used). More specifically, we are requesting the following features:

- Support for multiple locations (Outer Banks and Sandbridge) containing different properties

- Support for different types of users: overall administrators, employees of the laundry plant, and employees of the housekeeping teams at each location. This includes having different levels of access to the features described here.

- Ability for housekeeping staff to order packout bags on a particular delivery date for a property

- Ability to update properties by importing a file out of our property management system and/or manually.

- Ability to configure a property to specify the number of floors/levels and sheet and towel requirements for each floor in the property.

- Ability to add on extra items (Bath Towels, Hand Towels, or Pool Towels) to individual properties on a specific delivery without altering the configured sets of items for that property.

- Ability for the locations to order ancillary items (with quantities).

- Ability to configure the types of sheets and towels available from the laundry facility, including the expected weight of each item.

- Ability for the laundry facility to keep track of an inventory of ancillary items available

- Ability to assign a local cleaner to a property

- Ability to produce the packout and bin stickers into an easily understood format (Avery labels 5160) grouped by cleaner assigned. Target weight of each bag is 30 lbs, so if a property’s configuration exceeds this weight multiple bags should be created. Separate bags, and their corresponding stickers, should be created for different floors on a property.

- Email notification/confirmation to appropriate personnel (with the ability to change who those are) when ancillary items are ordered for a property.

Although this should be a standalone system, we invite students to explore the APIs exposed by our property management system to see if there are opportunities for automation, such as triggering an update of a property when it is modified in the property management system, or placing an order for a packout on a property based on a reservation being made.

Technologies and other constraints

The system should be web-based so that it can be accessible from multiple locations simultaneously with a centralized database.

We are flexible on the specific technology stack used for this project as long as it’s compatible with our infrastructure.

STUDENTS WILL BE REQUIRED TO SIGN OVER IP TO SPONSOR WHEN THE TEAM IS FORMED.

About PRA Health Sciences

PRA Health Sciences provides innovative drug development solutions across all phases and therapeutic areas. PRA Health Sciences Cybersecurity Team is a small group of professionals that share a passion for the topic. Our team is excited to cooperate with NCSU students on the fourth project to further develop the RePlay Honeypots.

Background and Problem Statement

Honeypots are systems that behave like production systems but have less attack surface, less resource requirements, and are designed to capture information about how potential attackers interact with it. Their intent is to trick an adversary with network access to believe that they are a real system with potentially valuable information on it. The adversary’s effort to break into the honeypot can reveal a lot of information about the adversary and enable defenders to detect and gain an advantage on this threat, so the honeypot will log the attacker’s behavior.

Previous Senior Design teams have developed Replay Honeypots--a low-interaction production honeypot system that can be configured and deployed easily, remaining cost effective in large numbers. The system is architected around three main components: 1) a central management system that provides a way to monitor and control honeypots and serves as a central repository of data collected by each honeypot it controls; 2) a series of lightweight, secured honeypot that supports various methods of deployment and will simulate network behavior of other systems; 3) a configuration generation tool that will ingest packet capture (PCAP) and Nmap scan outputs, and generates configuration files that honeypots use to determine how they should behave. The Project has recently been released as an Open Source Project on GitHub and the team will actively contribute.

While this system has come a long way, there are still some areas for improvement:

- The current implementation of the Config Generations Tool requires the user to copy the output JSON and paste it into a frontend page. This process would be more streamlined if tools could connect and insert into a database directly.

- The current implementation of the honeypots gets a request, checks it against the request in the configuration and determines if it passes the similarity threshold, and if it does, it then responds with a predetermined byte sequence from the config. However, some packets might contain information such as timestamps or random values that would give the honeypot away on closer inspection.

- The current system doesn’t have the ability to mimic Telnet, SSH, SMTP and RDP

Project Description

For this project, we would like the following enhancements to be implemented.

Central Management System

Here is where users create, view/monitor, and modify honeypot configurations. In Phase 4 the following features are requested:

Device Management / Monitoring:

- Remediate Vulnerability: A recent Server-Side Request Forgery vulnerability was released regarding the Axios package (CVE-2020-28168). In order to remediate this vulnerability, the system shall upgrade the axios package to a version >= 0.21.1.

- Device Grouping: The system shall allow for the “summary & details” views to only present data from a given device group

- Health Metrics: The system shall allow for displaying supported health metrics on the frontend for each device & device groups.

Honeypots

The initial configuration of a honeypot is based on an Nmap scan of another system that the honeypot must attempt to replicate. With this input, the honeypot configures its simulated services so that an Nmap scan to the honeypot produces no detectable differences from the original system, while having as little attack surfaces as possible. In Phase 4 we would like to make our honeypots more believable as follows:

- The system shall provide functionalities to mimic popular communications protocols if enabled in the configuration (Telnet, SSH, SMTP, RDP).

- The system shall have the ability to use a conventional device fingerprint to completely copy the network/interface of a target

- The system shall provide the ability to use the captured data to modify the way the device builds packets, calculate fields in headers, and responds to specific traffic.

- Fix any issues/bugs that are found

- STRETCH GOAL: The system shall provide full SSH honeypot functionality

- The system shall provide the ability to harvest credentials

- The new functionality shall integrate with current Replay functionality

- The system shall fake SSH and telnet connection to another system

- The system shall log sessions and store in a UML compatible format for easy replay

- The system shall provide SFTP and SCP support for file upload

- The system shall save files downloaded with wget/curl or uploaded with SFTP and SCP for later inspection

- The system should allow for JSON logging

- The system may use cowrie as a basis

- STRETCH GOAL: The system shall have the ability to capture malware for future analysis

- The system shall trap malware exploiting vulnerabilities in a format that the malware cannot execute

Config Generation Tool

The Config Generation Tool is a standalone tool that will ingest packet capture of a system that has undergone a Nmap scan and the respective scan results and generate a configuration file for the Honeypots that can be imported. In Phase 4 the following features are requested:

- The config generation tool shall connect to and insert configs into the database directly, rather than the user having to copy the output JSON and paste it into a frontend.

- The system shall provide the ability to mimic operating systems and networking equipment using the newly requested fingerprint functionality

Technologies and other constraints

Python3, NodeJS and VueJS are preferred development languages, the TRIO library is recommended for multi-threading. Docker is currently used to easily manage and deploy honeypots and will remain the preferred solution for this phase. Nevertheless, all functionality of the Honeypot itself needs to be supported and tested on Linux without Dockers. CouchDB is the currently supported Database. Technology pivots are possible but need discussion with the Sponsors.

Each component of the system will be designed with large scale deployments (>100) in mind. Students should use best practices for the development of secure software and document in great detail. Students should also strive to design honeypots to be modular so that additional protocols can be introduced, and additional functionality can be created, e.g. RDP, SSH, SMB Honeypots, Honey Websites and active beaconing. This Project is published on GitHub and the Students will be required to follow GitHub processes and best practices.

Students working on this project will be most successful if they have a strong understanding of network principles such as the OSI Model. Prior successful participation in “CSC 405 – Computer Security” and “CSC 474 – Network Security” is recommended for this project. Previous experience with Nmap and OS configuration is an advantage.

STUDENTS WILL BE REQUIRED TO SIGN OVER IP TO SPONSOR WHEN THE TEAM IS FORMED.

Background and Problem Statement

PuzzleScript is both a language and an open-source game engine to create 2-D puzzle games. A single PuzzleScript file defines a whole game, including assets, rules, and levels. PuzzleScript games are written and saved in a browser-based editor (https://www.puzzlescript.net/editor.html) that includes features such as syntax highlighting, saving and loading from libraries, running the game, a level editor, a sound library, an output debug console, and the ability to export the current game as a standalone html file.

Although the PuzzleScript editor works well enough, it lacks more advanced editing features that are now standard in modern code editors like Visual Studio Code, such as code completion, version control integration, find and replace, and the ability to open/save local files.

Project Description

In this project you will create a PuzzleScript extension for Visual Studio Code that should provide and enhance most of the features of the web-based editor. Leveraging the existing PuzzleScript runtime is welcome. At a minimum, this extension should support:

- Creating new PuzzleScript files with a blank/template structure. This is similar to what the web editor does when creating a new game.

- Syntax highlighting. The extension “.pzls” is suggested for PuzzleScript files. You can get inspiration from the current color scheme in the PuzzleScript editor, but creating a new color scheme is okay, especially to support dark/light modes and other VS Code themes.

- Code hinting and completion

- Game preview (similar to how VS Code does Markdown preview)

- Level editor. As the web editor does, placing the cursor on a level and opening the editor will edit that level.

- PuzzleScript console output

- Exporting a game as a standalone HTML

Many of these features are already provided and implemented in the PuzzleScript codebase and will need just integration into an extension. Although this normally works by default, you should verify that these features work well with VS Code’s standard functionality, such as Git integration and common extensions such as Live Share.