Projects – Spring 2012

Click on a project to read its description.

Device Outage Aggregator

Overview

AT&T Business Solutions manages large and small customer networks. Customers purchase many different services based on needs, pricing, and availability. Sometimes customers may outsource entire IT networks to AT&T, while other times they may only outsource portions of the network such as VOIP management, or Server Management.

AT&T has a wide catalog of potential services to sell to customers to meet these needs. Each service may take advantage of different tools and systems to satisfy customer requirements. Depending on the choice of services a customer requires and how these are managed, AT&T’s network management tools might not perform as needed or desired.

The goal of this project will be to glue together the network management tools for customers that have bought multiple services from AT&T. This will create better customer satisfaction, and will assist AT&T operations teams with day-to-day support of our customer’s networks.

Technical Details

AT&T uses SMARTS In-Charge for much of our network management tooling. This is an excellent tool for customers who are supported from a single management server. Unfortunately, when customers buy services that need to be managed from multiple servers, we lose network correlation. Correlation is when a customer’s networking topology is known by the tool so that it can make decisions on root causes of outages. An example would be when a customer loses power at a site, where AT&T is managing 5 networking devices. Since all 5 devices would not be operational during the power outage, AT&T only needs a SINGLE alert about the outage, with a list of devices impacted. This works perfectly when all customer devices are managed by a single server, but AT&T may receive multiple alerts when they are not.

The goal of this project will be to build Layer 2 and Layer 3 topology correlation tables for devices on multiple servers. AT&T will provide sample data from multiple customers to use for this project. Data will include Interfaces with IP Addresses and Subnet Masks, Routing Tables, MAC Address tables, and ARP tables. Additional data might also be needed, and will be provided.

The NC State team will need to design Layer 2 and Layer 3 correlation algorithms to build a database of connections between the devices. The team will use this database to aggregate multiple outage alerts into a single alert for all affected devices.

Programming Languages and Software

AT&T uses Perl as its primary programming language for this type of software development. Our software runs on RedHat Linux and we use MySQL for all databases.

Customer Value Geographic Visualization Tool

Students will work together to build a multi-tier service providing a customer zipcode analysis and visualization tool. Bronto has geolocation information on many customers. Utilizing Hadoop, students will use map-reduce algorithms to determine which customers reside within a given distance from a zipcode or set of zipcodes. The resulting customer sets will be returned to a web application to be listed or viewed visually on a map. Additionally, where customer conversion (value / spending) data are available, that data will be utilized to visualize zipcodes in a relative heatmap, depicting both size of the customer base and relative value.

Technologies

Software, programming languages and constructs will likely include Hadoop, Pig, Java, JSON, a REST service, PHP, Zend, MySQL, HTML, CSS, and jQuery/JavaScript.

Company Background

Bronto Software provides the leading marketing platform for online and multi-channel retailers to drive revenue through email, mobile and social campaigns. Over 1000 organizations including Party City, Etsy, Gander Mountain, Dean & Deluca, and Trek Bikes rely on Bronto to increase revenue through interactive marketing.

Bronto has recently won several awards:

- NCTA 21 Award for Software Company of the Year 2011

- Stevie Award for Best Customer Service Department in 2009 and 2010

- CODIE Finalist for Best Marketing Software in 2011

- Best Place to Work by Triangle Business Journal in 2010 and 2011

- CED Companies to Watch in 2010

In 2002, Bronto was co-founded by Joe Colopy and Chaz Felix out of Joe's house in Durham, North Carolina. Since its humble beginnings, Bronto has emerged as a leader with a robust yet intuitive marketing platform for commerce-driven marketers.

Bronto's long-term focus on its customers, products and employees is now resulting in accelerated growth - its 60% growth in 2010 contributed to being listed as one of Inc Magazine's Top 100 fastest growing software companies.

Using a NoSQL/DHT-based platform for an OpenFlow/SDN Controller

Software Defined Networking (SDN) changes the current per-device intelligence of geographically diverse networks to a model of a central, or at least apparently central, controller. While data forwarding is still carried out autonomously by the individual devices, once the flows have been set up, defining those paths and implementing that policy on each device in the data path now becomes the responsibility of that central control function.

As such, the role of the controller is to maintain a database of endpoints, services and capabilities, and select the correct flow-path. It is therefore essential that these flows are inserted both accurately and in a timely fashion such that the operation of the controller has no appreciable impact on the setup time of the flow. This becomes especially difficult where the flow is being set up over a large geographical distance or across networks of varying quality or between different spheres of control, such as across two-or-more service providers.

Traditional database methods such as SQL with replication and publisher/subscriber contexts all suffer with their ability to maintain consistency over the WAN. They also make federations of information repositories difficult to achieve, as a tight relationship between each of the datastores is required. A NoSQL approach seeks to alleviate these issues via a Distributed Hash Table (DHT).

The overall goal of this project is to investigate alternative approaches to the distribution and management of data in the OpenFlow/SDN controller by utilizing a “NoSQL” approach. A NoSQL approach would replace the SQL-based table system employed in one of the open-source OF/SDN controllers such as Floodlight from BigSwitch with a DHT-based NoSQL database such as the Apache Cassandra project or similar. Once this is accomplished the impact on both forwarding setup delay for the data plane and WAN utilization for database updates/control plane traffic can be investigated. Programmability of the network from a single "centralized" controller is the goal of SDN. None of the current controller implementations, either closed or open-source, use NoSQL and only two have an extensible SQL framework.

To investigate how the underlying database technology will impact overall network performance, specific goals are:

- Goal 0: Research basic concepts of SDN, SQL vs. noSQL, related open source, and study and understand the experimental setup.

- Goal 1: Define a set of potential bottlenecks (or interesting data paths) for comparison.

- Goal 2. Derive baseline performance information by running SQL-based software on a given "workload."

- Goal 3. Run noSQL-based software and derive comparison data.

Time permitting, the project should also consider and study the implications of multiple, federated zones-of-control such as would occur between two independent service providers. The implementation should ideally be carried out on a Cisco Nexus 1010 and take advantage of features in that platform that link the network availability to the underlying network.

Deliverables:

- Step-by-step documentation of setup and experiments, enabling continuation of future related projects.

- Research report on performance results.

- Open source software modifications.

Mobile Stock Trading & 3-D Virtualization: Game On!

Getting folks to come back for more and more is the name of the game! An NCSU Senior Design team worked hard last semester, successfully developing a stock trading game. Now it’s your objective to take the game to a new level, one that users can’t resist!

Last Semester’s Assignment

Students created a game which simulates stock trading. Players of the game are able to create and maintain mock stock portfolios that perform according to real world conditions, buy & sell stocks, as well as earn achievement points throughout the game. NSCU students successfully completed this project, creating an engaging game with a lot of potential for enhancement.

This Semester’s Assignment

We are looking to YOU to take the game to a new level, a level that makes players want to continue playing, over and over! This goal will be advanced by implementing two features:

- Develop a Mobile Application: Students are asked to develop a mobile component for this application, which would allow a user to manage his or her portfolio on-the-go. From their mobile devices they should be able to review stock information and buy or sell stock on the game application (Android development is suggested).

- Mobile 3D Virtualization of Data: In the current application, a player’s portfolio can be illustrated visually through a 2D visualization. Students are required to make the application visually interesting, by developing/integrating a 3D model virtualization of the players portfolio data.

Usability testing should be built into development plan.

NCSU Student Experience

Senior Design students will have access to top industry professionals to assist in the Design principles, Agile/Scrum Practices, overall developing/coding and Testing.

Game development experience is a plus.

Outage Reporting Mobile App

With the proliferation of smart phones, Duke Energy has increasingly relied on iPhones and Androids to communicate with our customers. This communication is not one-way, particularly during power outages. Customers need the ability to quickly report outages, and Duke Energy must provide timely status updates. The student team will create applications for both the iPhone and Android that allow customers to report outages to Duke Energy and also receive updates from Duke Energy on outage status. The GUI should be simple and user-friendly. Customers should have the ability to report outages with one click. Students are encouraged to be creative with technology and functionality. The ultimate goal is to create an application that is both effective and widely downloaded.

Project requires an Intellectual Property Agreement to be signed

Metarena

High Level Description

We intend for our Senior Design project for the 2012 Spring Semester to develop an application called “Metarena.” For the semester, we will attempt to implement an application and a website to play a Territorial Control style game (similar to Risk) based on the player's progress in a popular video game. We are currently considering using one of the following games: Starcraft II: Wings of Liberty, Team Fortress 2, or League of Legends. While the entire project’s scope is multiple video games, we expect to only integrate one video game by the end of the semester, with an emphasis on making the application easily extensible to other popular video games.

Problem

Our project solves the problem of providing players that utilize multiple games and platforms a consistent metagame objective while playing certain video games. Unlike the popular achievement system, this project will provide a more nuanced metagame between friends and strangers. Ranking systems today only have a risk and reward for the participating team/player; our system will make every win and loss significant to a community of players. Previous games like Mech Assault 2 have integrated a game style similar to Metarena, a style that had greatly enhanced the multi-player experience. We aim to achieve the same advantages, but not by creating our own game, but by extending the multi-player experiences of games people already love to play.

Backup Load Generation & Analysis

Background

Data Domain is the brand name of a line of disk backup products from EMC. Data Domain systems provide fast, reliable and space efficient on-line backup of files, file systems and databases ranging in size up to terabytes of data. These products provide network based access for saving, replicating and restoring data via a variety of network protocols (CIFS, NFS, OST). Using advanced compression and data de-duplication technology, gigabytes of data can be backed up to disk in just a few minutes and reduced in size by a factor of ten to thirty or more.

In our RTP Software Development Center we develop a wide range of software for performing backups to Data Domain systems including application libraries used by application backup software to perform complete, partial, and incremental backups.

With ever larger amounts of data to backup during constantly decreasing backup time periods, performance and especially scalability of performance is critical. Coupled with the increasing number of processor cores in modern hardware systems this presents a challenging environment for maximizing overall system performance and throughput. This project will develop a backup load generator that simulates a backup application program and use it to measure and characterize system and library software performance in multiple operational modes.

Project Scope

We want to develop an application level load generating tool that will simulate a backup application by “saving” (writing) data to a Data Domain backup system and/or “restoring” (reading) data previously saved on a backup system. The load generating application will run on a client-side system and use a set of provided interfaces to access the Data Domain system over a standard network connection.

We then want to use this load generating tool to measure and characterize the performance of some of the Data Domain application libraries. Of particular interest to us is how the performance of multiple single-threaded application processes compares to the performance of a single multi-threaded application process. We are also interested in seeing if there are system tuning operations that can improve the performance and scalability in either of these cases.

The project is proposed as two phases: creating the load generating tool and then using it.

Phase One

Produce a backup load generating application and usage scripts that:

- Allows specification of various load generation parameters such as number of threads/processes to run, type of backup operation (read, write, both), number and size of read/write operations.

- Creates and runs one or more independent threads (tasks, or other execution entity within a single process) in parallel to carry out the various read and write operations.

- Generates detailed and summary performance statistics including a log of actions taken.

- Is platform independent (runs without modification on Windows and Linux).

Phase Two

Phase 2 is an open ended performance measurement and analysis phase that will use the load generating program produced in Phase 1 to do one or more of the following. Which of these items are done will depend on the capabilities of the load generating program and the time and resources available after Phase 1 is completed. Some of these may involve using other standard system or open source performance tools as well as the load generating program.

- Determine if client system hardware resources are being used appropriately and as expected. For example, when running the load generator with four threads on client hardware with four processor cores, are all four threads running on the four cores simultaneously?

- Measure/characterize the client system performance when running a single load generating process with varying numbers of threads under constant load to determine client-side performance and scalability using multiple threads.

- Measure/characterize the client system when running a varying number of load generating processes each with a single thread under constant load to determine client-side performance and scalability using multiple processes.

- Measure/characterize the client system when running a varying number of load generating processes each with a varying number of threads under constant load to determine client-side performance and scalability using multiple multi-threaded processes.

- Do one or more of the above performance experiments running the application load generating software on different client platforms (such as Windows and Linux).

- Use the load generating application to determine if any system bottlenecks exist and whether any system tuning or parameter adjustment (such as priorities, etc.) can address any such problems.

Materials Provided

- A Data Domain hardware loaner system including all necessary system software for saving and restoring files via a backup application for the duration of the project.

- Documentation for administering the Data Domain system.

- A set of binary libraries with documented Application Programming Interfaces (APIs) that can be linked with application software that acts as a backup application by calling the provided APIs.

Benefits to NC State Students

This project provides an opportunity to attack a real life problem covering the full engineering spectrum from requirements gathering through research, design and implementation and finally usage and analysis. This project will provide opportunities for creativity and innovation. EMC will work with the team closely to provide guidance and give customer feedback as necessary to maintain project scope and size. The project will give team members an exposure to commercial software development on state of the art industry backup systems.

Benefits to EMC

As storage usage worldwide continues to grow exponentially providing our customers with the features and performance they need to better protect and manage their data is critical. The demands of ever growing amounts of data and reliability complicate the roles of development engineers in designing and implementing new features and maintaining scalable performance in existing software. The proposed load generating tool and performance measurements based on this tool will provide a basis for architectural and design decisions in current and future versions of Data Domain backup application software and system software.

Company Background

EMC Corporation is the world's leading developer and provider of information infrastructure technology and solutions. We help organizations of every size around the world keep their most essential digital information protected, secure, and continuously available.

We are among the 10 most valuable IT product companies in the world. We are driven to perform, to partner, to execute. We go about our jobs with a passion for delivering results that exceed our customers' expectations for quality, service, innovation, and interaction. We pride ourselves on doing what's right and on putting our customers' best interests first. We lead change and change to lead. We are devoted to advancing our people, customers, industry, and community. We say what we mean and do what we say. We are EMC, where information lives.

We help enterprises of all sizes manage their growing volumes of information—from creation to disposal—according to its changing value to the business through information lifecycle management (ILM) strategies. We combine our best-of-breed platforms, software, and services into high-value, low-risk information infrastructure solutions that help organizations maximize the value of their information assets, improve service levels, lower costs, react quickly to change, achieve compliance with regulations, protect information from loss and unauthorized access, and manage and automate more of their overall infrastructure. These solutions integrate networked storage technologies, storage systems, software, and services.

EMC's mission is to help organizations of all sizes get the most value from their information and their relationships with our company.

The Research Triangle Park Software Design Center is an EMC software design center. We develop world-class software that is used in our NAS, SAN, storage and backup management products.

EMC where information lives.

Project Manager’s Dashboard

Fidelity Investments spends a significant amount of money each year on technology projects. With so many projects in flight, it is often difficult for executives and managers of the organization to know where they should put their effort. To help resolve this, Fidelity would like to create a web-based "Project Manager's Dashboard" which allows Fidelity to visualize the scope and health of projects under its portfolio. The solution to this issue must:

- Incorporate data from many disparate sources, including Rational Team Concert (Task/timeline data), Quality Center 10 (Code quality data), HP's Portfolio and Project Management (financial data), and Microsoft Project (task/timeline data).

- Allow users to configure the project they want tracked, such as selecting development methodology (Agile, Traditional), data sources to use, etc.

- Provide clickable/drillable representations of financial and project health data (such as horizon graphs, heatmaps, etc.) which help mangers pinpoint areas needing attention.

Google Apps as an Enhanced Classroom Response System

NC State now provides Google Apps for Education to all students, faculty, and staff. This allows for the use of Google forms as a classroom response system. Faculty can pose questions to students during class using Google forms. Students can answer, and the instructor can look at these answers to find out how well the students understand the material. Instructors can modify their lectures based on student’s understanding of recently covered topics.

As a classroom response system, Google forms are quite usable today. But further innovation would make them much more attractive to instructors and students. We are interested in the following enhancements to Google spreadsheets and forms:

- Determine correctness of students’ responses. This would include determining correctness of free-form responses that may include pieces of code.

- Update graphs generated by student responses in real time (add data points to graphs as the students submit them; for an example, see the exercise on creating a graph of sorting-algorithm run time).

- Support for automatic copying of forms between semesters. We want to retain previous semesters’ data without requiring a manual creation of forms for the following semester.

- Provide summary data of students’ responses to questions, so that instructors can tell “at a glance” the level of student understanding.

- Automatically create a summary of student usage information into a single spreadsheet. We need to know …

- which students filled out each form;

- which students gave correct answer(s) on each form;

- which students partnered with someone who filled out the form, and/or gave correct answers;

- a summary, for each student, of the number of forms filled out (and/or filled out correctly) on each class day.

- Allow students to view only their response(s) to a question (maybe in conjunction with the exercise solution) without showing any other responses. This should also be allowed if the student was not the person submitting the form.

- Allow students to view their current exercise grade (without showing any other student’s exercise grades).

- Package the apps into an easily distributed package that others can use.

The enhancements to Google should be implemented using Google App Scripts, which is a JavaScript language for automating tasks within Google products. Information about Google App Scripts may be found at http://code.google.com/googleapps/appsscript/. There are several tutorials and an API. The starting points in the API are Spreadsheet Services and Charts Services.

The project sponsors will be available to meet weekly with the project team and will be available for questions throughout the semester. Additionally, the project sponsors would be willing to evaluate prototypes early and often, even in their classes. If time allows, an empirical evaluation of the effectiveness of the system would be of interest.

Big Data Analytics

The growth of public domain data represents a unique opportunity and challenge to Business Analysts. The College of Management and College of Engineering have been working with several Business Units within IBM to develop new software and hardware platforms to perform analytics on large (100s of TB) data sets. Big Data Analytics is enabled by recent hardware and software innovations that are extremely important to many industries. Currently, “Watson-like” software installed on an x86 platform solves Business Analytics problems. Limitations on system performance, understanding data stores, and scalability of analytics present challenges that must be overcome. New Linux-optimized hardware platforms, Hadoop based analytics, and natural language processing represent solutions to the challenges of Big Data, however, these solutions must be designed and tested in this business environment.

IBM has provided a new powerful cluster, P system, for Big Data Analytics research. IBM is sponsoring this project in order to gain a better understanding of the operating environment required to meet the needs of the business community. Students will work closely with faculty members from the Colleges of Management and Engineering as well as with a senior researcher from IBM research. The project will require independent thinking and problem solving while working with IBM’s most advanced business analytics platform.

The project will address the following:

- Comparison of operating performance of P system vs x86 platform

- Tuning of Hadoop based file and analytics software while removing processing roadblocks associated with middleware.

- Deploying business solution in a “cloud” environment; developing an understanding of user system requirements

- Working with Business Analyst’s to understand the system requirements of such a platform

Skill Sets Required:

- Experience with Linux, including scripting and system management.

- Proficiency in Java, preferably knowledge of Hadoop software.

- Ability to work independently and think creatively.

- Desire to understand cutting-edge technologies.

Project Management Portal

Background for Project

Maintaining customer relationships is critical to driving repeat and continued revenue streams in a service business. Not all projects go well, but such problems can be mitigated if the customer is kept apprised of project status. This student project will focus on developing a portal and web application that allow authenticated customers to track and monitor their I-Cubed project and key milestones, payment terms etc. This will improve both the customer’s and our internal management’s ability to monitor and track projects.

Project Description

Using data available from our existing systems (Salesforce, Peachtree, and Microsoft Project), the team will create project-specific portals that will allow the tracking of our projects for both internal and external users. We will provide the students access to Convertigo technology and access to our key project systems to develop an integrated dashboard application with a mobile extension that can be provided to our customers and internal management.

The student team will get experience in application integration, mobile application development and user experience creation. I-Cubed will provide students with guidance from both I-Cubed internal experts and our existing customers. This is a great opportunity for students to learn a cutting edge platform and gain experience / exposure to delivering against customer requirements.

In successfully completing the project, the student team will:

- Develop customer specific portals

- Expose data from our CRM, PMP, and other critical systems on specific projects

- Create secure login and registration portals integrated to CRM

- Develop engaging user experience

- Test and validate solution on desktop and mobile platforms (iphone, Android, Blackberry)

The user interface will permit the customer to:

- Sign up for I-Cubed PMP Portal

- Download Application or Login to Webpage

- View

- Project Milestones

- Financial Terms – Payment status and Due Date

- Access to SOW (Download PDF)

- Project team – Link to contact information and support connect from application

- Push project status notes

- Select desired project if multiple projects are held by the same customer.

The user interface will permit an I-Cubed user to:

- Capture contact information from customer participants

- Push updates to customers

- Capture snapshot project details for executive review

Design Constraints

This project should be created with a reusable framework that can be quickly instantiated when a new project undergoes delivery. Significant attention should be focused on user experience to improve the adoption and usability of the technology.

Sponsor Background

Since 1984, I-Cubed has provided the people, products and processes to extend the value of customers’ enterprise systems. The company's subject matter expertise in Enterprise Applications, Product Lifecycle Management Consulting, Business Process Consulting and Rights Management provides a unique insight that translates customer needs into commercial products. I-Cubed's product and services portfolio helps customers accelerate the integration of enterprise systems and collaborate securely throughout the supply chain. I-Cubed has been sponsoring senior design projects for more than 10 years. I-Cubed’s office is conveniently located on NC State’s Centennial campus in Venture II.

Trust No One: Secure Communications in the Presence of an Untrusted OS

Background

Modern computing systems are built with a large Trusted Computing Base (TCB) including complex operating systems, applications, and hardware. The TCB provides security and protection services for both data and processes within a computer system. Because the TCB lies at the lowest layer of the software stack, compromising any portion of the TCB results in compromising the security of the entire system, and sensitive information input to the machine no longer being protected. Given the size and complexity of the TCB in modern computers, it is very difficult to provide adequate protection to the TCB from attack and ultimately to provide protection to the entire computer system.

Much of the world we live in today is enabled by computer systems, and those systems rely upon the TCB for protection of their critical processes and data. Any vulnerability found within the TCB might affect the entire system and anything that depends upon it. The impact of a vulnerability can be as simple as disclosure of financial information or as complex as a power outage or physical destruction due to failure of a control system.

Project Purpose

The purpose of this project is to protect the critical system data, specifically user credentials, and the associated functions necessary for establishing a secure connection with a remote server from a compromised operating system. These protections will leverage advances in hardware-provided security to control access to the protected data and functions.

Project Statement

This project will seek to improve the security of critical data by leveraging the features of Intel’s Trusted Execution Technology (TXT) and Trusted Platform Modules (TPMs). This project will use Flicker, a dynamic software environment with a minimal TCB developed by Carnegie Mellon University (CMU), to store and protect critical data from the OS. Flicker provides an execution environment and functions for accessing the protected data. This software environment is not intended to replace conventional systems; rather it is intended to operate in conjunction with conventional systems while providing an additional level of protection to sensitive data previously unavailable. This design requirement to operate in conjunction with a conventional O.S. dictates that an Application Programmers Interface (API) must also be developed to provide a controlled interface between the trusted software module and the running O.S.

The focus of this project will be the integration of previously developed code and the development of additional functionality necessary to complete the establishment of a SSL/TLS connection. A single Flicker Module will be created with the capabilities necessary for establishing the SSL/TLS connection, which will be leveraged by a web browser when communicating with sensitive websites.

This project will specifically involve:

- Suspending all activity on the computer and turning control over to a trusted software module

- Resuming a running OS and restoring all associated system data

- Developing a trusted software module capable of validating a server certificate and computing the cryptographic operations of an SSL/TLS handshake without any services from the host OS

- Integrating the Browser with the trusted software module to establish a secure remote connection

Project Deliverables

This project will include the following deliverables:

- All developed source code

- Documentation covering the design, implementation, and lessons learned from the project

- Unit test suite for the SSL/TLS API

- A demonstration showcasing a web browser utilizing the trusted module to provide protected access to sensitive data

- A technical presentation covering the design and implementation of the trusted software module

- A written paper (optional academic paper for submission to a workshop)

Benefit to Students

Students will learn a great deal about the details of modern x86 architectures, developing low-level code, computer software security concepts, and current research challenges in the field of security. Of particular benefit, students will learn how to use hardware-enabled security and leverage the TPM. Both of these technologies are anticipated to provide the basis for many trusted computing environments and other security applications in the coming years.

Suggested Team Qualifications

- Interest in computer security (basic knowledge is beneficial)

- Basic background in operating systems

- Experience with x86 assembly and C programming

- Basic understanding of computer architectures

- Experience with Linux

Suggested Elective Courses

- CSC 405 Introduction to Computer Security or CSC 474 Information Systems Security

About APL

The Applied Physics Laboratory (APL) is a not-for-profit center for engineering, research, and development. Located north of Washington, DC, APL is a division of one of the world's premier research universities, The Johns Hopkins University (JHU). APL solves complex research, engineering, and analytical problems that present critical challenges to our nation. Our sponsors include most of the nation’s pivotal government agencies including the Department of Defense (DoD), the Department of Homeland Security (DHS), and the National Security Agency (NSA).

Project requires a Non-Disclosure Agreement to be signed

QoS Counter Visualization

NetApp released ONTAP Version 8.0 in 2010, providing a multi-controller clustered storage solution. Cluster QoS (Quality of Service) is adding a lot of additional instrumentation that will help us drill down and understand performance bottlenecks in NetApp's Data ONTAP operating system. Our instrumentation covers all parts of the datapath, from the client facing protocols through the cluster interconnect, filesystem, RAID, and the storage layer.

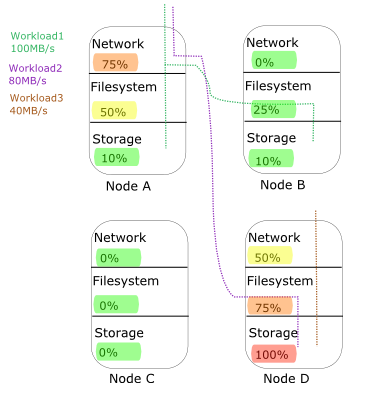

One potential application for the instrumentation is a "traffic congestion map" of ONTAP. This is a visualization of the components of a cluster. The visualization shows the utilization of various cluster resources, color-coded to highlight bottlenecks. Dataflow paths are overlaid to identify which workloads are responsible for the utilization (see figure below).

The proposal for the student design project is a prototype of this tool. Input to the tool is a text file with counter manager data in XML format. Output from the tool is a bitmap file with a picture similar to the mockup.

Any technology the students like and are familiar with is fine. NetApp would be interested in seeing a web browser based javascript application, which eventually could be extended into real time data collection. A simpler and more practical approach would be a Python script that outputs PNG images. NetApp also envisions this as an iPhone/iPad app which would be a valuable additional stretch opportunity for the students.

Prerequisites

- Basic understanding of operating systems and workload performance

- Analysis. Not much technical depth, but enough background to understand what a network or file system layer is, what we mean by cpu and disk utilization, and what we mean by workloads.

- Opening and parsing XML files

- Filtering and aggregating statistics

- Using a graphics library to draw the picture

Benefits to students

- Requires relatively little knowledge of NetApp specific technologies

- Can be broken into discrete milestones (data parsing, analysis, visualization)

- Visualizations are fun!

Benefits to NetApp

- We can use this tool when doing demos of QoS

Details

- Input is XML file with CM data that NetApp provides

- Output is a picture showing up to four nodes, broken up into Network/Filesystem/Storage

- The resources are annotated with the utilization percentage and colored <=25% green, 25-75% yellow, 75-90% orange, 90%+ red

- Each resource with more than 10 visits/second from a workload is said to be using that resource. Draw a spanning tree that connects all resources used by a workload and give it a unique color.

- Draw the name of the workload and its total throughput on the side

- Support for up to 5 workloads

Possible future extensions

- Mobile app

- Realtime visualization

Logging Wildlife Visits

Background

Wildlife study experts depend on several technologies to gather information about wildlife movements. This information is then used to address basic and applied questions about wildlife ecology, habitat use, conservation, production, harvest, and interactions with people.

Technologies used in these studies include GPS, cell and satellite data transmission techniques, and radio-based direction finding. Unfortunately, these technologies have drawbacks which can limit their usefulness in many situations. GPS data can be imprecise due to attenuation of signals caused by thick foliage under which many animals live and other factors. Further, once data are obtained (data are typically stored in collars on animals), recovery of the data is not simple for several reasons. Cell and satellite data streaming is possible, but expensive. Radio direction finding to locate and recover animal collars containing data is labor intensive and time consuming. Once data are in hand however, the overall goal of a wildlife study process is data analysis. For example, if one obtains a series of GPS coordinates and corresponding time stamps, then an animal track can be registered as an overlay on a map. One could then draw conclusions about movements, habits, etc., as may be of interest. However, there are many situations that could produce useful information if we invert the study process and ask, for a given known location, what is the wildlife visitation profile for that location? GPS data are sufficient for such a study, but not necessary. Consider, instead of using GPS data, if a wireless sensor network were able to be set up easily around an area of study interest. If animal collars were equipped with radio transmitters, the network could record animal ID and time of the visit when the animal came in range of the wireless network. This approach could have cost and time advantages over GPS based studies for some types of wildlife studies.

This brings us to the goal of this project:

Design, implement, and test a visitation oriented wireless sensor system, as described above. A suggested high level design would include two types of nodes: One type, a stationary network node that broadcasts “Are you there?” messages on a regular basis and records answers that may be received; the second type, a battery powered mobile node encased in an animal collar, listens on a regular schedule for “Are you there?” messages and responds when one is received. The energy cost of computation is an important design parameter of this project. The team should construct experiments to measure the current draw (battery drain) for various software controlled wake/sleep/transmit/receive intervals.

Engineering trade-offs need to be arrived at jointly at a systems design level with an ECE senior design team to be assigned to work, collaboratively, on the project. The stretch goal of the project is a prototype mobile node with a one year life. Harvested energy sources such as movement, or solar may be necessary to achieve this goal. Alternative energy management designs for the fixed nodes are also of interest.

Programming will be done in C or other suitable language, as determined by the team.

Web KPIs

Metrics are an important part of running an organization. Prometheus Group offers a solution to view Key Performance Indicators (KPIs) from within the SAP (the world’s leading enterprise software package) environment. The issue is that many of the higher level individuals in an organization do not even log into the SAP GUI. They want a quick and easy way to view their metrics from outside the normal SAP GUI. Our goal is to take our existing KPI engine inside of SAP, and recreate the user experience so that it’s available through a web browser on desktops and mobile devices alike. This will allow our customers to have a quick-access view of any of their company’s metrics.

You will learn

- SAP/ABAP

- SOAP/XML

- HTML/CSS/JS/JSP

Prometheus will provide access to SAP system and instruction on related use.

HTML5 Application Development

Project Description

The goal of this project is to evaluate/compare/contrast the following HTML-based libraries (in priority order):

- PrimeFaces (showcase)

- GWT (showcase)

- Dojo (showcase)

- Sencha Ext-JS (showcase) (using a trial copy)

Using something like the Flex Store application (which is a sample provided by Adobe to showcase Flash technology), we’d like a similar application built with each of the above mentioned technologies. More details regarding the functional requirements for the resulting application will be provided. The end result should be something that illustrates how HTML5 technology can be used to compete against other RIA tools and frameworks.

During the building of this application with each of the libraries, please use the following evaluation criteria:

- Learning Curve

- Speed of Development

- Tooling (ie IDE available, Eclipse Plugins, etc )

- Documentation

- Richness of API:

- Model

- View

- Controller

- Layouts

- Debugging

- Quality of cross-browser support (Desktop and mobile)

- Quality of generated code. Is it standards compliant?

- Maintainability of code

- Theme Support

- Component development

- ease of extending an existing component

- ease of creating a new/composite component

- Release Strategy

- can a comunity make changes

- are update schedules available

- are updates on any kind of regular schedule

- Testability: unit and automated

- Widget library

- Degree of 508 Accessibility support (ARIA support)

- Degree of I18N support (including Bidirectional text support)

- Scalability

A Personalized Special Offer Targeting System

Project Overview

The goal of the project is to develop an application which leverages an individual’s social activity, influence and location to provide relevant offers and promotions. The application will integrate with foursquare for location-based information, with Klout for social influencer rating and Twitter/Facebook for social activity. For example, a user that checks into a venue on foursquare, will receive an offer based upon the user’s location, relationship with the venue, social connectedness and influence.

Project Organization

The project will be run within the Agile Scrum framework. Agile development provides the benefits of early and consistent customer engagement. The Teradata representative will serve as the Product Owner for the Scrum team in order to provide application requirements and to assist with backlog grooming and acceptance criteria. Development will occur in two week Sprints. Planning for tasks will occur at the beginning of the Sprint, checkpoints and backlog grooming will be scheduled mid-Sprint and demonstrations of progress will happen at the end of each Sprint in a Sprint review.

Company Background

Teradata is the world's largest company focused on integrated data warehousing, big data analytics and business applications. Our powerful solutions portfolio and database are the foundation on which we’ve built our leadership position in business intelligence and are designed to address any business or technology need for companies of all sizes.

Only Teradata gives you the ability to integrate your organization’s data, optimize your business processes, and accelerate new insights like never before. The power unleashed from your data brings confidence to your organization and inspires leaders to think boldly and act decisively for competitive advantage. Learn more at teradata.com.

Transit Crowd II

TransLoc was formed by NCSU students with the goal of revolutionizing campus transit. Today, universities all over the country use the technology first used here on the Wolfline.

Continuing innovation of campus and public transit, we are looking to create an easy way for riders to communicate with each other about delays, accidents and other out of the ordinary situations. During the course of this semester, your team will add essential features to TransitCrowd, our mobile (iPhone and Android) app and corresponding RESTful API. The app was developed by a prior NCSU Senior Design team and its features include:

- Locate stops and routes near the rider using the phone's built-in GPS

- Quickly view messages from other users about the route/stop

- Add new messages and attach them to the stop/route, and quickly indicate what is going on from a predefined list of events (bus is late, bus just passed me, etc).

The features your team will be adding to the mobile app and RESTful API include:

- Keeping track of how many messages and of what type each user has added, and assign karma points based on them to reward participation

- Adding community moderation tools via upvote/downvote/flag features

- Integrating with Twitter/Facebook/Google+ to allow users to recommend the app to their friends

- Adding features that allow users to comment on messages from other users

- Creating backend scripts that will import transit system data, specified via GTFS feeds, into the TransitCrowd database

This project has the potential to revolutionize how riders use transit systems and communicate with each other. It will help them avoid routes that are running with delays or leave earlier to catch the bus that's coming early.

This project uses PhoneGap (JavaScript/CSS/HTML5 via jQuery Mobile) for the cross-platform mobile development, Node.js for server-side code and MongoDB for data storage running on Linux servers.

2D iPhone Game

The purpose behind designing and creating a 2D iPhone Game is to get the NCSU student team acclimated with the iOS platform and work towards shipping the game to the App Store by the end of the semester.

The student team will create a 2D side (or vertical) scrolling iPhone game where the goal is to dodge obstacles. The game is viewed from a side (or top down) perspective. Game mechanics will include tilting (or pressing a button) to move the character to avoid obstacles and obtain power-ups.

As the player progresses through the infinite level their speed will slowly increase until max speed is achieved. The game ends when a player collides into an obstacle. The score will calculated by the distance the player travels before hitting an obstacle. The player should also be able to earn achievements for achieving milestones in the game.

The students will have some creative freedom on the theming of the game and will receive guidance form the Two Toasters team.

About Two Toasters

Two Toasters is a premiere mobile product design firm focused on mobile strategy and developing applications for iOS and Android platforms. We are the mobile powerhouse behind some of the leading venture-backed firms and automotive companies in the US. Our focus on a developer-centric culture allows for a creative and technical environment that cultivates expert mobile developers.

Project requires an Intellectual Property Agreement to be signed

Refactoring Tools for Visual Studio Using Roslyn

Background

Software developers practice refactoring, the process of restructuring software without changing the way that it behaves, commonly on a day-to-day basis. However, because manual refactoring is slow and error prone, researchers have proposed tools that semi-automatically refactor code. Since these tools were proposed 20 years ago, refactoring tools have been built for a variety of integrated development environments. Researchers have built on top of these tools to explore new types of program transformations and new ways of helping software developers do their work. While there exists some refactoring tools for Visual Studio, none are open source, making it difficult for researchers to do refactoring research in Visual Studio and .NET languages.

Outcome

In this project, a team of 3-4 students will build several open-source refactoring tools using Roslyn, a new technology developed at Microsoft.5 Roslyn already includes appropriate infrastructure for building refactoring tools and an actively-moderated forum for asking Roslyn-related questions.6 Such tools will build a solid foundation for other researchers to build upon. In fact, depending on the student team's interest and progress, they may also create their own experimental refactoring tools.

Challenge

This project will be a challenge for the team because refactoring tools are highly sophisticated; building of the first one was an entire Ph.D. dissertation! However, this process has been made easier recently by better refactoring infrastructure, such as that provided by Roslyn. This project has a highly flexible scope, but students are expected to implement several refactoring tools.

Student Benefits

Completion of the project will have three benefits for students, beyond the standard benefits of any capstone project. First, students will work on an open-source project which can be easily viewed and inspected by potential employers. Students may also choose continue to contribute to the open-source project after the semester is complete, allowing them to engage more thoroughly with the open source community. Second, students will get a small taste of research experience as undergraduates.

Research Benefits

This project fits into a larger research agenda of creating more software-developer friendly programming tools. More specifically, Dr. Murphy-Hill’s research lab at NCSU would like to build new refactoring tools for Visual Studio (the group currently works exclusively in Eclipse), but they don’t have a solid set of already-available open-source refactoring tools for Visual Studio. This project will provide that base.

PAS-X User Rights Management Configurator

Problem

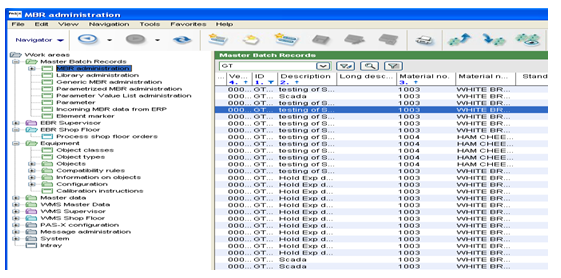

Werum develops a Manufacturing Execution Software for the Pharmaceutical industry, called PAS-X that is used by the largest drug manufacturers in the world. Its new J2EE based solution uses an Outlook type interface, where user functionality (in the form of dialogs is selected from the tree structure (see images below). PAS-X provides a mechanism to assign rights to users so that they can only access the dialogs for which they are trained. Unfortunately, the assignment of users’ rights is decoupled from the dialog selection structure and creates a lot of confusion with users.

The goal of this project is to develop a GUI framework that mimics the dialog structure of the application (or specific other rights), but provides a spreadsheet like way to assign dialog rights to various user groups (users are then assigned to user groups). The project team should build on top of what the previous NCSU team did (particularly when it comes to interfacing to PAS-X).

Activities

- Learn user right management concepts behind PAS-X V3 tool

- Develop a user right management GUI framework that is logical and very easy to use (spreadsheet type approach of assignments) – framework should be web-based to enable various people to remotely define and review an assignment prior to configuring PAS-X

- Develop an interface to PAS-X to read the dialog structures configuration

- Develop an interface to compile the selected user rights assignments into a PAS-X configuration

- Document the project life cycle approach (User Requirements, Functional Specification, Design, System & User Manuals)

The figure below shows our existing user interface (dialog structure - subset) in PAS-X:

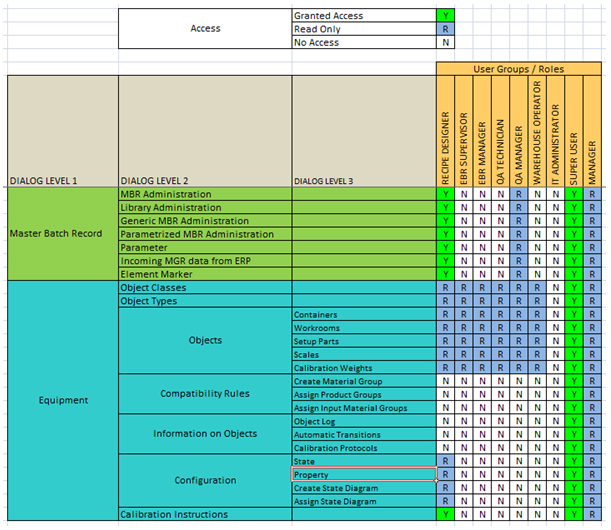

Here is a visualization of the user tool that people would use to define & assign user rights (association of dialogs with user groups) – to be developed (web application / java app):

Once this is done, then the application needs to configure PAS-X, using a service-based approach.

Company Profile

Werum Software & Systems is the worldwide leading supplier of Manufactuing Execution Systems (MES) for the pharmaceutical and biopharmaceutical industries.

US Regional Offices, Cary, NC

Project Archives

| 2026 | Spring | ||

| 2025 | Spring | Fall | |

| 2024 | Spring | Fall | |

| 2023 | Spring | Fall | |

| 2022 | Spring | Fall | |

| 2021 | Spring | Fall | |

| 2020 | Spring | Fall | |

| 2019 | Spring | Fall | |

| 2018 | Spring | Fall | |

| 2017 | Spring | Fall | |

| 2016 | Spring | Fall | |

| 2015 | Spring | Fall | |

| 2014 | Spring | Fall | |

| 2013 | Spring | Fall | |

| 2012 | Spring | Fall | |

| 2011 | Spring | Fall | |

| 2010 | Spring | Fall | |

| 2009 | Spring | Fall | |

| 2008 | Spring | Fall | |

| 2007 | Spring | Fall | Summer |

| 2006 | Spring | Fall | |

| 2005 | Spring | Fall | |

| 2004 | Spring | Fall | Summer |

| 2003 | Spring | Fall | |

| 2002 | Spring | Fall | |

| 2001 | Spring | Fall |